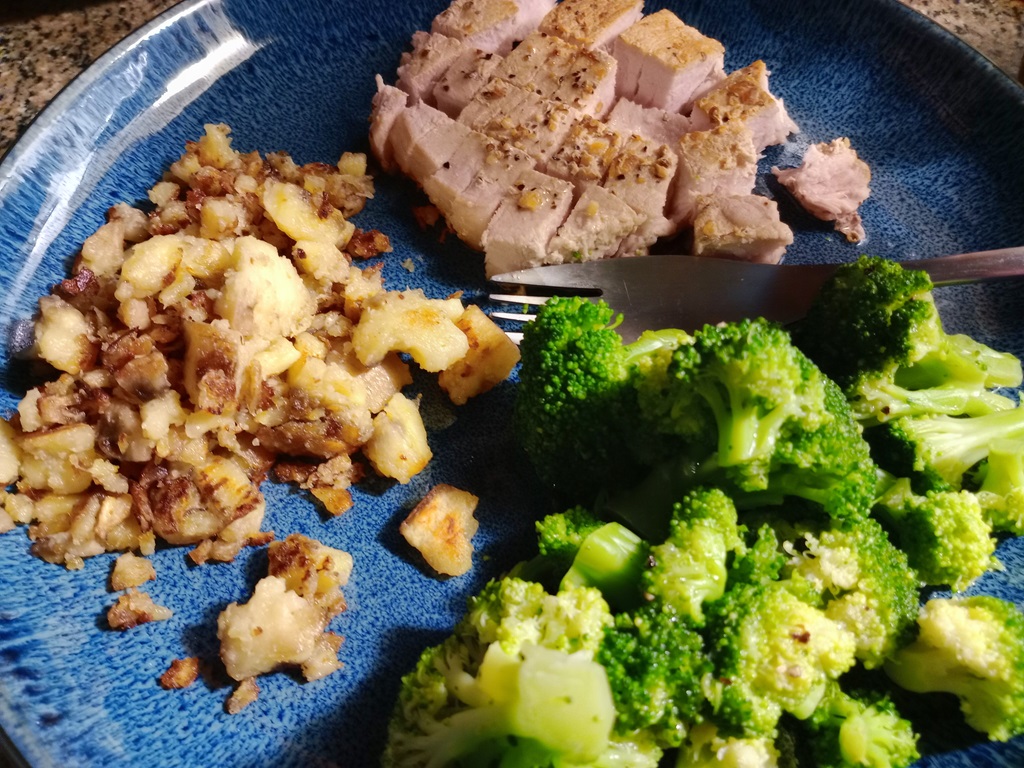

I made green banana hash tonight to go with our pork chops and broccoli. Microwaved four green bananas for five minutes (cut off ends, cut along back to cut through the peel, then microwave). I let them sit about ten minutes to cool down, then removed the peel. Diced into small pieces and then smashed some to make really small pieces. Added a little salt, then sauteed in coconut oil until crispy. They’re really good – and with bright green bananas, they do a very good impression of potatoes.

Venafi Cert Issuance Fails after Windows 2022 Upgrade

Certificate Issuance Fails

After requesting a certificate, the request immediately fails with the error:

Failed to post CSR with error: Unknown certificate profile type.

I think it is just a coincidence, but wanted to document the scenario in case it comes up again. The application makes web calls to a vendor API to issue certs. The API calls, after the upgrade, were failing.

In this scenario, a call was being made to {base_url}/api/ssl/v1/types, the connection failed. Since the list of valid certificate profiles could not be retrieved, the request failed saying the certificate profile was unknown.

GET https://hard.cert-manager.com/api/ssl/v1/types?organizationId=####

Looking at a debug trace, the following flow was observed:

- Authentication headers sent: login=<REDACTED>, password=<REDACTED>, customerUri=<REDACTED>

- Transport-level failure (no HTTP status returned on the failing attempt)

- Symptoms: “Decrypt failed with error 0X90317” followed by “The underlying connection was closed: The connection was closed unexpectedly.”

- Context: Revocation checks reported “revocation server was offline,” then the client proceeded; long idle/keep-alive reuse likely contributed to the close.

Connection reuse vs server keep-alive: Apache is advertising Keep-Alive: timeout=3. The .NET client is reusing long-idle TLS connections via the proxy; by the time it sends application data, the server/proxy has already closed the session, leading to “underlying connection was closed” errors.

Revocation checks through the proxy: The .NET trace shows “revocation server was offline” before proceeding. That extra handshake work plus proxy blocking CRL/OCSP can increase latency and contribute to idle reuse issues.

.NET SChannel quirks: Older HttpWebRequest/ServicePoint behaviors (Expect100-Continue, connection pooling) can interact poorly with short keep-alive servers/proxies.

Luckily, this is a .NET application, and you can create custom configuration files for .NET apps. In the file with the binary, look for a text file named BinaryName.exe.config

If none exists, create one. The following disables the proxy:

<?xml version=”1.0″ encoding=”utf-8″?>

<configuration>

<system.net>

<!– Turn off use of the system proxy for this app –>

<defaultProxy enabled=”true”>

<proxy usesystemdefault=”false” />

</defaultProxy>

</system.net>

</configuration>

Client Connections to HTTPS IIS Site Fail After Upgrade to Windows Server 2022

Client connections to the HTTPS IIS site failed with the following error:

Secure Connection Failed

An error occurred during a connection to certmgr-dev.uniti.com.

PR_CONNECT_RESET_ERROR

Error code: PR_CONNECT_RESET_ERROR

The page you are trying to view cannot be shown because the authenticity of the received data could not be verified. Please contact the website owners to inform them of this problem.

The IIS site was set to “accept” client certificates.

- Client Certificates = Accept means IIS/HTTP.sys will try to retrieve a client certificate only if the app touches Request.ClientCertificate (or a module that maps/validates client certs). That retrieval is done via TLS renegotiation in TLS 1.2.

- On Server 2022, browsers prefer TLS 1.3. TLS 1.3 does not support the old renegotiation used to fetch a client cert mid‑request. When your app/module at “/” accesses the client cert, IIS attempts renegotiation, fails, and the connection is reset.

Setting Client Certificates to “Ignore” prevents IIS from attempting to renegotiate, so the site loads. This obviously isn’t a solution if you want to use client certificates to authenticate … but we’re authenticating through Ping, so don’t actually need the client certs.

Green Banana Pancakes

Another attempt to make a less inflammatory version of pancakes – this recipe was superb. Fluffy pancakes (not light and fluffy, almond flour makes a denser, heavier pancake), very tasty, and incredible paired with cherry juice and cherries.

Ingredients:

- 2 green bananas, steamed and mashed

- 1 cup almond flour

- 2 tbsp cassava flour

- 1 tbsp maple syrup

- 1 tsp baking powder

- 1/2 tsp salt

- 3/4 cup almond milk

- 1 egg, beaten

- 2 tbsp coconut oil or olive oil

Method:

Steam the bananas in the microwave (about 5 minutes) or boil them (20 minutes). Allow to cool, peel, and then mash.

Mix the dry ingredients together. Mix the wet ingredients together and combine with mashed bananas. Slowly combine the wet and dry ingredients.

Allow batter to sit for 10 minutes (almond flour will absorb moisture, so it will thicken as it rests)

Over medium low heat, cook pancakes. Pour some batter into the pan. When bubbles start to form and not pop, flip and cook for a few more minutes.

For a sauce, I heated juicy frozen cherries. The cherry juice was drizzled over the pancakes, and the cherries were served on top.

Plant Bonanza

We are making a list of unique, nutritious to grow — I’ve gotten seeds for most of the ones that will grow in our area.

| Plant | Latin name | Notes | Annual or Perennial | Zone | Benefit |

| Alfalfa | Medicago sativa | True Leaf Market | Perennial herb | Zone 3-9 | Nutritious sprouts; soil improvement via N-fixation |

| Amaranth | Amaranthus spp. | Already have seeds | Annual herb | Zone 2-11 | Protein-rich grain; mineral-dense greens |

| American Chestnut | Castanea dentata | Already have trees | Perennial tree | Zone 4-8 | Starchy, gluten-free nuts; wildlife support |

| Ashitaba | Angelica keiskei | Strictly Medicinal – sold out | Perrenial | Zone 7+ | |

| Asparagus | Asparagus officinalis | Planted at ridgeline | Perennial vegetable | Zone 3-8 | High in folate, vitamin K, and fiber |

| Blue hubbard squash | Cucurbita maxima ‘Blue Hubbard’ | True Leaf Market | Annual vine | Zone 3-10 | Beta-carotene-rich flesh; long storage |

| Breadfruit | Artocarpus altilis | Perennial tree | Zone 10-12 | Starchy fruit staple; carbs, fiber, potassium; eaten roasted/boiled/baked | |

| Buckwheat | Fagopyrum esculentum | Already have seeds | Annual herb | Zone 3-10 | Gluten-free grain; high in rutin (flavonoid) |

| Cattail | Typha spp. | Perennial aquatic | Zone 3-10 | Multiple edible parts: spring shoots (“cossacks”), pollen (protein), rhizomes (starch) | |

| Chickweed | Stellaria media | Dave’s garden seeds | Annual (often self-seeding) | Zone 2-9 | Edible greens; vitamin C and minerals; mild flavor for salads |

| Chicory | Cichorium intybus | Perennial herb | Zone 3-9 | Inulin-rich roots; bitter greens for salads | |

| Chokeberry / Aronia | Aronia melanocarpa | Perennial shrub | Zone 3-8 | Very high antioxidants (anthocyanins), vitamin C; tart berries for juice/jam | |

| Chufa / Tiger Nut | Cyperus esculentus var. sativus | Perennial sedge (often grown as annual) | Zone 8-11 | Nut-like tubers with healthy fats, resistant starch, fiber; used for horchata | |

| Comfrey | Symphytum officinale | Ridgeline | Perennial herb | Zone 3-9 | Not nitrogen-fixing; excellent chop-and-drop mulch/biomass, accumulates K/Ca; use leaves sparingly if eaten due to PA alkaloids |

| Dandelion | Taraxacum officinale | All over the place | Perennial herb | Zone 3-10 | Leaves/roots rich in iron, vitamin A, K, C; edible greens, flowers, root coffee |

| Duckweed | Lemna spp. | Aquatic | Aquatic perennial | Zone 4-11 | High-protein feed; water remediation potential |

| Egyptian Walking Onion | Allium × proliferum | Perennial | Zone 3-9 | Reliable perennial onion greens and bulbs | |

| Fava Bean | Vicia faba | Dave’s garden seeds | Annual legume | Zone 3-10 | Protein, fiber, folate, iron; contains L-DOPA (used in Parkinson’s research) |

| Flax seeds | Linum usitatissimum | True Leaf Market | Annual herb | Zone 3-10 | High in ALA omega-3, fiber, and lignans |

| Good King Henry | Blitum bonus-henricus | Dave’s garden seeds | Perennial | Zone 3-8 | Edible spinach-like leaves and shoots; calcium, vitamin C, some B vitamins |

| Groundnut | Apios americana | https://peacefulheritage.com/products/lsu-groundnut-plants-apios-americana-naturally-grown | Perennial vine (legume) | Zone 4-9 | Protein-rich edible tubers; nitrogen-fixing; also edible beans/flowers |

| Ironwort | Sideritis spp. (e.g., Sideritis scardica) | Perennial subshrub | Zone 7-10 | Traditional tea with antioxidant/soothing properties | |

| Jerusalem Artichoke / Sunchoke | Helianthus tuberosus | Perennial tuber | Zone 3-9 | Inulin-rich tubers (prebiotic), potassium; prolific, can spread | |

| Lamb’s Quarters | Chenopodium album | Dave’s garden seeds | Annual | Zone 2-11 | Tender greens high in protein (for a leaf), calcium, iron, vitamins A/C |

| Lovage | Levisticum officinale | Already have seeds | Perennial | Zone 4-9 | Strong celery-like flavor for stocks/salads; minerals; productive cut-and-come-again herb |

| Lupini Bean | Lupinus albus | True Leaf Market | Annual legume | Zone 4-10 | High-protein beans; nitrogen fixation |

| Medlar | Mespilus germanica (syn. Crataegus germanica) | Perennial tree | Zone 5-9 | Unique fruit; vitamin C; winter delicacy | |

| Mesquite | Prosopis spp. (e.g., P. glandulosa) | Perennial tree | Zone 8-11 | Low-glycemic flour from pods; nitrogen fixer | |

| Moringa | Moringa oleifera | Perennial tree | Zone 9-11 | Leaves rich in vitamins A, C, calcium, and protein | |

| Nine Star Broccoli | Brassica oleracea | https://plantingjustice.org/products/rare-perennial-9-star-broccoli – sold out | Perennial | Zone 6-9 | Multiple harvests of white heads and side shoots |

| Oca | Oxalis tuberosa | Perennial (often grown as annual) | Zone 8-10 | Carbohydrate-rich tubers; vitamin C, potassium; cool-climate Andean staple | |

| Oyster Mushroom | Pleurotus ostreatus | Fungus (cultivated) | Zone 3-10 | Protein; ergothioneine and other antioxidants | |

| Palmer Amaranth | Amaranthus palmeri | Annual herb | Zone 7-11 | Nutrient-dense greens; drought tolerance | |

| Prairie Turnip / Indian Breadroot | Pediomelum esculentum | https://www.nortonnaturals.com/products/prairie-turnip-pediomelum-esculentum | Perennial legume | Zone 4-7 | Edible tuber with notable protein for a root; nitrogen-fixing |

| Prickly Pear / Nopal | Opuntia ficus-indica | Perennial cactus | Zone 8-11 | Pads and fruits edible; fiber, vitamin C, magnesium; glycemic-friendly | |

| Psin wild rice | Zizania palustris | Aquatic | Annual aquatic grass | Zone 3-8 | Nutritious whole grain; high in protein and minerals |

| Purple Collard Tree (cutting) | Brassica oleracea var. acephala (tree collards) | Perennial | Zone 8-10 | Nutrient-dense leafy greens year-round in mild climates | |

| Purslane | Portulaca oleracea | Already have seeds | Annual | Zone 2-11 | Supplies omega-3 (ALA), vitamins A/C, minerals; succulent, crunchy leaves |

| Rutabega | Brassica napus var. napobrassica | https://store.experimentalfarmnetwork.org/products/rutabangin-rutabaga-grex?_pos=1&_sid=365a8b7a1&_ss=r | Biennial (grown as annual) | Zone 3-9 | Vitamin C–rich storage root |

| Salsify | Tragopogon porrifolius | Biennial (grown as annual) | Zone 5-9 | Fiber-rich root with unique flavor | |

| Scarlet Runner Beans | Phaseolus coccineus | Perennial vine (annual in cold climates) | Zone 7-10 | Edible pods/beans; attracts pollinators | |

| Sea Buckthorn | Hippophae rhamnoides | Already in orchard | Perennial shrub | Zone 3-7 | Very high vitamin C; also vitamin E and carotenoids; edible berries and juice |

| Skirret | Sium sisarum | https://store.experimentalfarmnetwork.org/products/skirret?srsltid=AfmBOoonPys7OfNy4DBFlsvT_QnLsmzgZ9nGsZ-KCrbNVE9gyPy7jyBa | Perennial | Zone 4-9 | Sweet, parsnip-like roots; productive perennial |

| Sorghum | Sorghum bicolor | Already have seeds | Annual grass | Zone 6-10 | Staple grain; antioxidant-rich varieties |

| Spirulina | Arthrospira platensis | Aquatic | Tender perennial (cultured) | Zone N/A | Very high protein; iron; B vitamins (note: B12 present mostly as inactive analog) |

| Stinging Nettle | Urtica dioica | Perennial herb | Zone 3-10 | Iron- and mineral-rich greens; tea; fiber crop | |

| Sweet Potato | Ipomoea batatas | Make slips from sweet potato | Tender perennial (grown as annual) | Zone 8-11 | High in beta-carotene (vitamin A) and fiber |

| Tamarillo | Solanum betaceum | Tender perennial shrub/tree | Zone 10-11 | Vitamin C–rich tangy fruit | |

| Tepary Bean | Phaseolus acutifolius | FarmDirectSeeds | Annual legume | Zone 5-11 | High vegetable protein and fiber; drought-tolerant staple bean |

| Wild Lettuce | Lactuca virosa | Biennial herb | Zone 6-9 | Leaves and latex traditionally used for calming | |

| Winged bean | Psophocarpus tetragonolobus | True Leaf Market | Perennial vine (grown as annual in cooler zones) | Zone 9-12 | Very protein-rich; multipurpose plant; nitrogen fixer |

| Wolffia | Wolffia spp. | Aquatic | Aquatic perennial | Zone 5-11 | Very high protein biomass; potential food/feed |

| Wormwood | Artemisia absinthium | Dave’s garden seeds | Perennial herb | Zone 4-9 | Bitter aromatic used sparingly as culinary/aperitif flavor; stimulates digestion |

| Yarrow | Achillea millefolium | Already have seeds | Perennial herb | Zone 3-9 | Aromatic leaves/flowers used as a culinary herb/tea; digestive and bitter-tonic qualities |

| Udo | Aralia cordata | rhizomes are best | Perennial herb | Zone 4-8 |

Found on:

| https://www.youtube.com/@LostNatureVault |

| https://www.youtube.com/@LostPlantRemedies |

| https://www.youtube.com/@ReclaimedNature |

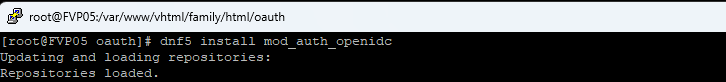

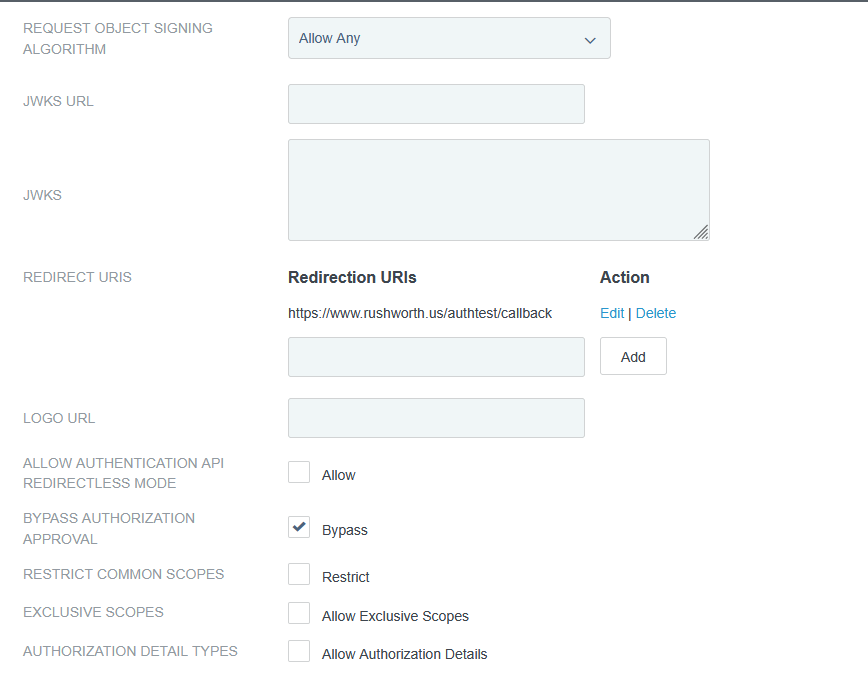

Apache OIDC Authentication to PingFederate (or PingID) Using OIDC

This is kind of a silly update to my attempt to document using mod_auth_openidc in Apache. At the time, I didn’t know who set up the PingFederate side of the connection, so I just used Google as the authentication provider. Five years later, I am one of the people setting up the connections and can finally finish the other side. So here is an update — now using PingFederate as the OIDC/OAUTH provider.

OAUTH Client Setup – Apache

First, make sure mod_auth_openidc is installed

In your Apache config, you can add authentication to the entire site or just specific paths under the site. In this example, we are creating an authenticated sub-directory at /authtest

In the virtual host, I am adding an alias for the protected path as /authtest, configuring the directory, and configuring the location to require valid-user using openid-connect. I am then configuring the OIDC connection.

The OIDCClientID and OIDCClientSecret will be provided to you after the connection is set up in PingID. Just put placeholders in until the real values are known.

The OIDCRedirectURI needed to be a path under the protected directory for me – the Apache module handles the callback. Provide this path on the OIDC connection request.

The OIDCCryptoPassphrase just needs to be a long pseudo-random string. It can include special characters.

# Serve /authtest from local filesystem Alias /authtest "/var/www/vhtml/sandbox/authtest/" <Directory "/var/www/vhtml/sandbox/authtest"> Options -Indexes +FollowSymLinks AllowOverride None Require all granted </Directory> # mod_auth_openidc configuration for Ping (PingFederate/PingID) # The firewall will need to be configured to allow web server to communicate with this host OIDCProviderMetadataURL https://login-dev.windstream.com/.well-known/openid-configuration # The ID and secret will be provided to you OIDCClientID d5d53555-7525-4555-a565-b525c59545d5 OIDCClientSecret p78…Q2kxB # Redirect/callback URI – provide this in the request form for the callback URL OIDCRedirectURI https://www.rushworth.us/authtest/callback # Session/cookie settings – you make up the OIDCCryptoPassphrase OIDCCryptoPassphrase "…T9y" OIDCCookiePath /authtest OIDCSessionInactivityTimeout 3600 OIDCSessionMaxDuration 28800 # Scopes and client auth OIDCScope "openid profile email" OIDCRemoteUserClaim preferred_username OIDCProviderTokenEndpointAuth client_secret_basic # If Ping's TLS cert at https://localhost:9031 isn't trusted by the OS CA store, # install the proper CA chain, or temporarily disable validation (not recommended long-term): # OIDCSSLValidateServer Off # Protect the URL path with OIDC <Location /authtest> AuthType openid-connect Require valid-user OIDCUnAuthAction auth </Location>

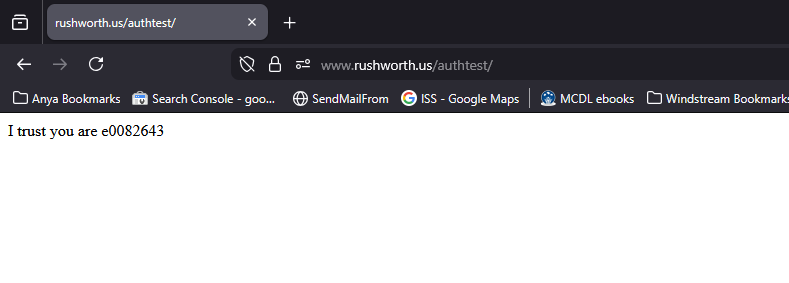

Sample web code for the “protected” page if you want to use the user’s ID. The user’s email is found at $_server[‘OIDC_CLAIM_email’]

[lisa@fedora conf.d]# cat /var/www/vhtml/sandbox/authtest/index.php

<?php

if( isset($_SERVER['OIDC_CLAIM_iss']) && $_SERVER['OIDC_CLAIM_iss'] == "https://login-dev.windstream.com"){

echo "I trust you are " . $_SERVER['OIDC_CLAIM_username'] . "\n";

}

else{

print "Not authenticated ... \n";

print "<UL>\n";

foreach($_SERVER as $key_name => $key_value) {

print "<LI>" . $key_name . " = " . $key_value . "\n";

}

print "</UL>\n";

}

?>

Results on the web page – user will be directed to PingID to authenticate, and you will verify that login-dev.windstream.com (or login.windstream.com in production) has authenticated them as the OIDC_CLAIM_username value:

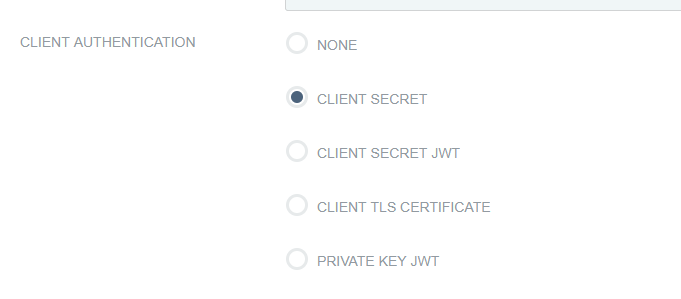

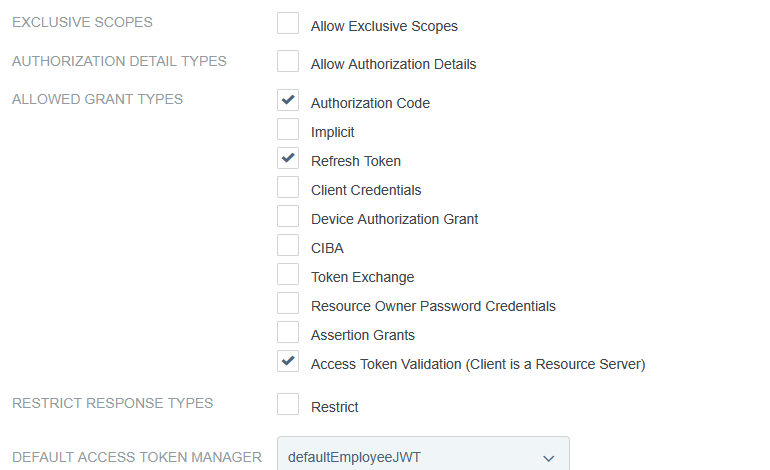

OAUTH Client Setup – PingID

Client auth, add redirect URLs

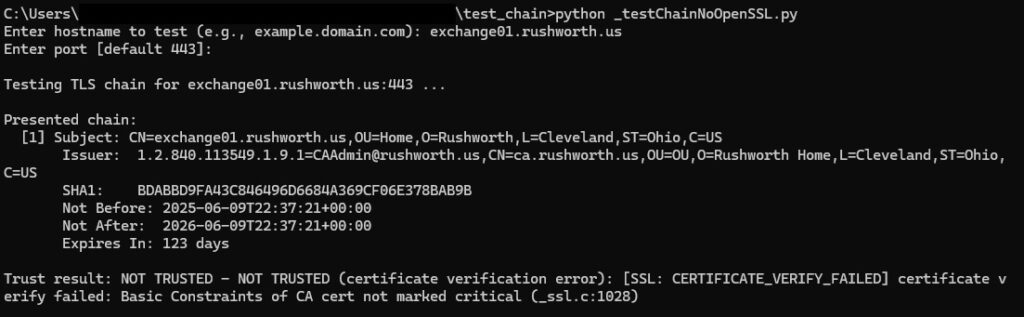

Viewing *Real* Certificate Chain

Browsers implement AIA which “helps” by repairing the certificate chain and forming a trust even without proper server configuration. Which is great for user experience, but causes a lot of challenges to people troubleshooting SSL connection failures from devices, old equipment, etc. It’s fine when I try it from my laptop!

This python script reports on the real certificate chain being served from an endpoint. Self-signed certificates will show as untrusted

And public certs will show the chain and show as trusted

Code:

import ssl

import socket

import datetime

import select

# Third-party modules (install: pip install pyopenssl cryptography)

try:

from OpenSSL import SSL, crypto

from cryptography import x509

from cryptography.hazmat.primitives import hashes

except ImportError as e:

raise SystemExit(

"Missing required modules. Please install:\n"

" pip install pyopenssl cryptography\n"

f"Import error: {e}"

)

def prompt(text, default=None):

s = input(text).strip()

return s if s else default

def check_trust(hostname: str, port: int, timeout=6.0):

"""

Attempt a TLS connection using system trust store and hostname verification.

Returns (trusted: bool, message: str).

"""

try:

ctx = ssl.create_default_context()

with socket.create_connection((hostname, port), timeout=timeout) as sock:

with ctx.wrap_socket(sock, server_hostname=hostname) as ssock:

# Minimal HTTP GET to ensure we fully complete the handshake

req = f"GET / HTTP/1.1\r\nHost: {hostname}\r\nConnection: close\r\n\r\n"

ssock.sendall(req.encode("utf-8"))

_ = ssock.recv(1)

return True, "TRUSTED (system trust store)"

except ssl.SSLCertVerificationError as e:

return False, f"NOT TRUSTED (certificate verification error): {e}"

except ssl.SSLError as e:

return False, f"NOT TRUSTED (SSL error): {e}"

except Exception as e:

return False, f"Error connecting: {e}"

def _aware_utc(dt: datetime.datetime) -> datetime.datetime:

"""

Ensure a datetime is timezone-aware in UTC. cryptography returns naive UTC datetimes.

"""

if dt.tzinfo is None:

return dt.replace(tzinfo=datetime.timezone.utc)

return dt.astimezone(datetime.timezone.utc)

def _cert_to_info(cert: x509.Certificate):

subj = cert.subject.rfc4514_string()

issr = cert.issuer.rfc4514_string()

fp_sha1 = cert.fingerprint(hashes.SHA1()).hex().upper()

nb = _aware_utc(cert.not_valid_before_utc)

na = _aware_utc(cert.not_valid_after_utc)

now = datetime.datetime.now(datetime.timezone.utc)

delta_days = max(0, (na - now).days)

return {

"subject": subj,

"issuer": issr,

"sha1": fp_sha1,

"not_before": nb,

"not_after": na,

"days_to_expiry": delta_days

}

def _do_handshake_blocking(conn: SSL.Connection, sock: socket.socket, timeout: float):

"""

Drive the TLS handshake, handling WantRead/WantWrite by waiting with select.

"""

deadline = datetime.datetime.now() + datetime.timedelta(seconds=timeout)

while True:

try:

conn.do_handshake()

return

except SSL.WantReadError:

remaining = (deadline - datetime.datetime.now()).total_seconds()

if remaining <= 0:

raise TimeoutError("TLS handshake timed out (WantRead)")

r, _, _ = select.select([sock], [], [], remaining)

if not r:

raise TimeoutError("TLS handshake timed out (WantRead)")

continue

except SSL.WantWriteError:

remaining = (deadline - datetime.datetime.now()).total_seconds()

if remaining <= 0:

raise TimeoutError("TLS handshake timed out (WantWrite)")

_, w, _ = select.select([], [sock], [], remaining)

if not w:

raise TimeoutError("TLS handshake timed out (WantWrite)")

continue

def fetch_presented_chain(hostname: str, port: int, timeout: float = 12.0):

"""

Capture the presented certificate chain using pyOpenSSL.

Returns (chain: list of {subject, issuer, sha1, not_before, not_after, days_to_expiry}, error: str or None).

"""

# TCP connect

try:

sock = socket.create_connection((hostname, port), timeout=timeout)

sock.settimeout(timeout)

except Exception as e:

return [], f"Error connecting: {e}"

try:

# TLS client context

ctx = SSL.Context(SSL.TLS_CLIENT_METHOD)

# Compatibility tweaks:

# - Lower OpenSSL security level

try:

ctx.set_cipher_list(b"DEFAULT:@SECLEVEL=1")

except Exception:

pass

# - Disable TLS 1.3 and set minimum TLS 1.2

try:

ctx.set_options(SSL.OP_NO_TLSv1_3)

except Exception:

pass

try:

# Ensure TLSv1.2+ (pyOpenSSL exposes set_min_proto_version on some builds)

if hasattr(ctx, "set_min_proto_version"):

ctx.set_min_proto_version(SSL.TLS1_2_VERSION)

except Exception:

pass

# - Set ALPN to http/1.1 (some paths work better when ALPN is present)

try:

ctx.set_alpn_protos([b"http/1.1"])

except Exception:

pass

conn = SSL.Connection(ctx, sock)

conn.set_tlsext_host_name(hostname.encode("utf-8"))

conn.set_connect_state()

# Blocking mode (best effort)

try:

conn.setblocking(True)

except Exception:

pass

# Drive handshake

_do_handshake_blocking(conn, sock, timeout=timeout)

# Retrieve chain (some servers only expose leaf)

chain = conn.get_peer_cert_chain()

infos = []

if chain:

for c in chain:

der = crypto.dump_certificate(crypto.FILETYPE_ASN1, c)

cert = x509.load_der_x509_certificate(der)

infos.append(_cert_to_info(cert))

else:

peer = conn.get_peer_certificate()

if peer is not None:

der = crypto.dump_certificate(crypto.FILETYPE_ASN1, peer)

cert = x509.load_der_x509_certificate(der)

infos.append(_cert_to_info(cert))

# Cleanup

try:

conn.shutdown()

except Exception:

pass

finally:

try:

conn.close()

except Exception:

pass

try:

sock.close()

except Exception:

pass

if not infos:

return [], "No certificates captured (server did not present a chain and peer cert unavailable)"

return infos, None

except Exception as e:

try:

sock.close()

except Exception:

pass

etype = type(e).__name__

emsg = str(e) or "no message"

return [], f"TLS handshake or chain retrieval error: {etype}: {emsg}"

def main():

hostname = prompt("Enter hostname to test (e.g., example.domain.com): ")

if not hostname:

print("Hostname is required.")

return

port_str = prompt("Enter port [default 443]: ", "443")

try:

port = int(port_str)

except ValueError:

print("Invalid port.")

return

print(f"\nTesting TLS chain for {hostname}:{port} ...")

chain, err = fetch_presented_chain(hostname, port)

print("\nPresented chain:")

if err:

print(f" [ERROR] {err}")

elif not chain:

print(" [No certificates captured]")

else:

for i, ci in enumerate(chain, 1):

print(f" [{i}] Subject: {ci['subject']}")

print(f" Issuer: {ci['issuer']}")

print(f" SHA1: {ci['sha1']}")

nb_val = ci.get("not_before")

na_val = ci.get("not_after")

nb_str = nb_val.isoformat() if isinstance(nb_val, datetime.datetime) else str(nb_val)

na_str = na_val.isoformat() if isinstance(na_val, datetime.datetime) else str(na_val)

print(f" Not Before: {nb_str}")

print(f" Not After: {na_str}")

dte = ci.get("days_to_expiry")

if dte is not None:

print(f" Expires In: {dte} days")

trusted, msg = check_trust(hostname, port)

print(f"\nTrust result: {'TRUSTED' if trusted else 'NOT TRUSTED'} - {msg}")

if __name__ == "__main__":

main()

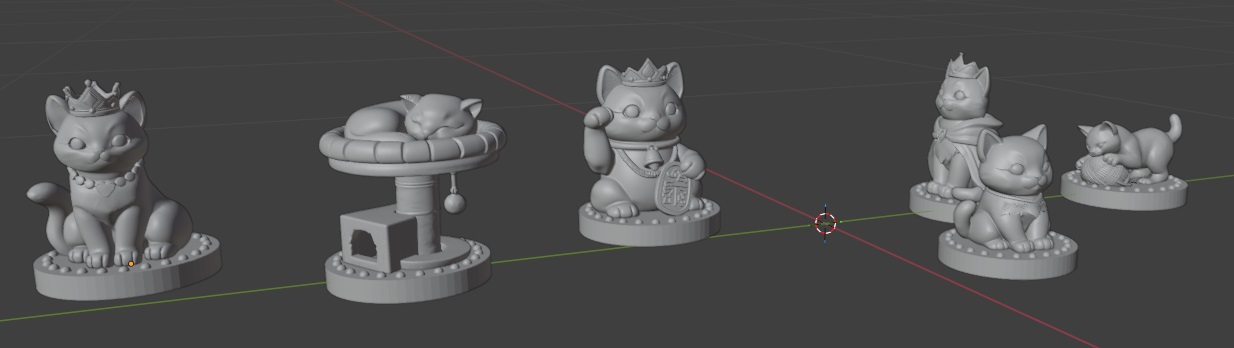

Blender – Repairing Corrupted Mesh

In object mode, select the object you want to repair. In the python console, run:

import bpy

bpy.context.object.data.validate(verbose=True)