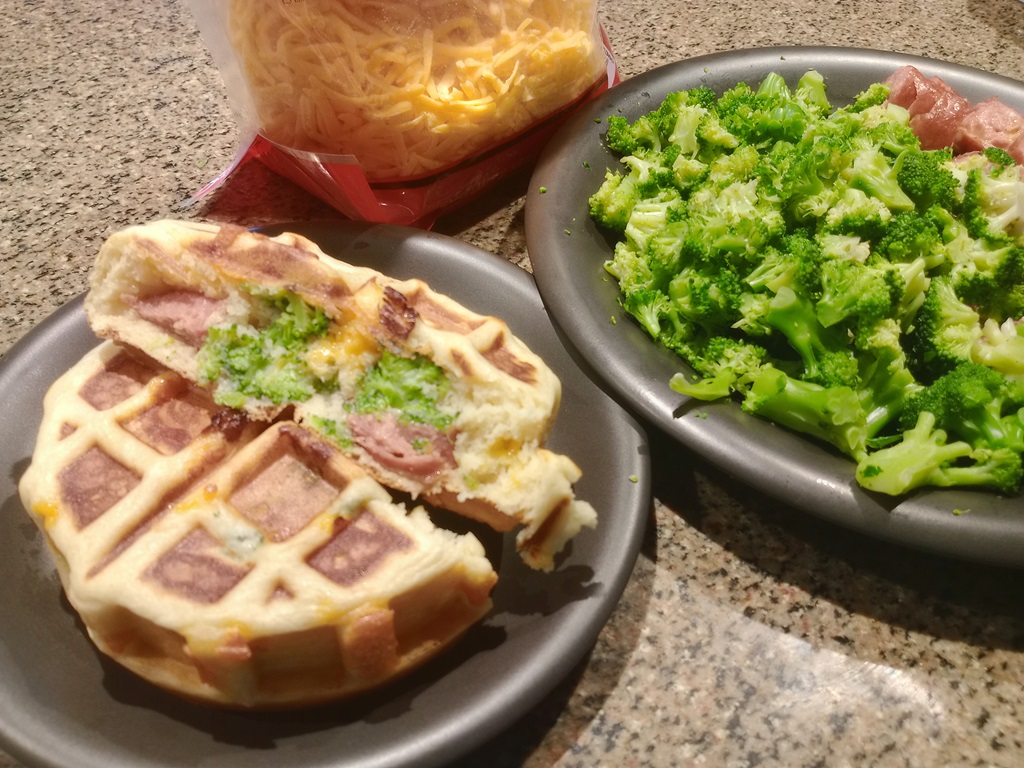

Sausage, broccoli, cheddar stufflers

Growing Space

Apples progressing

Philly Cheesesteak Pizza

Apple Progress

The Void

We’ve been cleaning up the farm … but I don’t have any pictures for about a week.

Read Everything

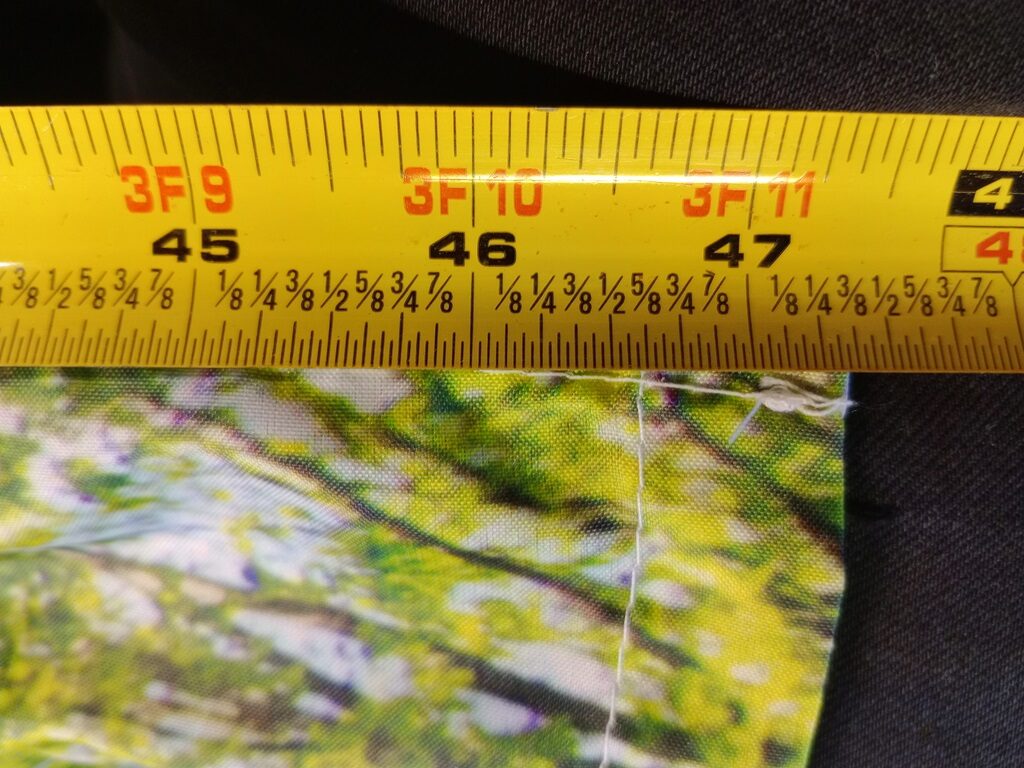

We bought a fabric thing that is 1’x4′ — which seems like it would be the size of the item. It did not fit our one foot by four foot thing … so I measured it and was disappointed to discover it was not quite 4 foot long (it’s about 1/8″ short of one foot as well!). Read through the entire product listing to discover a “dimensions” about eight bullet points down. And it claims it will be 47 3/8″ … pretty much exactly the length I am measuring. So, yeah, read the ten paragraphs of description because sometimes they hide important details in there!