Because I made two not-permitted posts since Anya started the challenge, she’s extended it by ten days! October 7th is the last day of Anya’s rule about what I can post on my own website … the one I set up for myself, on my server, so I would have somewhere to store my stuff. Yeah.

Month: September 2022

Pumpkin Muffins

Ingredients

- 1 cup brown sugar

- 1 cup pumpkin puree

- 1 cup buttermilk

- 1/4 cup vegetable oil

- 2 large eggs

- 1 tsp vanilla

- 2 1/4 cups all purpose flour

- 2 tsp baking powder

- 2 tsp ground cinnamon

- 1/4 tsp nutmeg

- 1/4 tsp ground cloves

- 1/4 tsp ground ginger

- 1/2 tsp baking soda

- 1/2 tsp salt

Topping — 2 T melted butter, 2 T maple sugar, 1 tsp ground cinnamon

Method

- Preheat the oven to 350F

- Whisk together brown sugar, pumpkin puree, buttermilk, oil, and eggs

- In another bowl, whisk together flour, baking powder, salt, and spices.

- Gently fold dry ingredients into wet ingredients.

- Divide batter between 12 muffin cups. Bake about 20 minutes, test with toothpick or skewer.

- Allow to cool for 10 minutes then remove to a wire rack to cool.

- Stir together all of the topping ingredients and brush on top of muffins.

Fairy Eggs

The chickens we hatched earlier this year have started laying their first eggs — our first chicken to lay an egg (Queenington) laid a large egg, and the rest of our egg layers followed with fairly normal chicken-egg sized eggs. I didn’t know that it was common for chickens to start off laying small eggs (called fairy eggs) until we got the Bresse hens. They’re not great for hatching (really tiny chick incubates and often cannot even get out of the egg), but the eggs are perfectly edible. I think we’ll be making pickled eggs with this year’s tiny eggs.

Pickled Eggs

Pickled Eggs

Course: Snacks, SidesCuisine: AmericanDifficulty: Easy10

servings15

minutes10

minutesIngredients

20 eggs

1 cup apple cider vinegar

1 cup water

1/4 cup maple sugar

1 tsp salt

2 cloves garlic, crushed

1 bay leaf, crushed

Spices – dill, onion

Method

- Hard boil eggs and peel.

- In a saucepan, mix vinegar, water, sugar, spices, and salt. Boil, reduce to low. Mix in garlic and bay leaf. Remove from heat.

- In a glass jar, place eggs and pour vinegar mixture. Add spices — onion, dill, etc.

- Seal and store in refrigerator.

OpenSearch Evaluation Overview

What is ElasticSearch?

ElasticSearch, based on the Lucene search software, is a distributed search and analytics application which ingests, stores, and indexes data. Kibana is a web-based front-end providing user access to data stored within ElasticSearch.

What is OpenSearch?

In short, it’s the same but different. OpenSearch is also based on the Lucene search software, is designed to be a distributed search and analytics application, and ingests/stores/indexes data. If it’s essentially the same thing, why does OpenSearch exist? ElasticSearch was initially licensed under the open-source Apache 2.0 license – a rather permissive free software license. ElasticCo did not agree with how their software was being used by Amazon; and, in 2021, the license for ElasticSearch was changed to Server Side Public License (SSPL). One of the requirements of SSPL is that anyone who implements the software and sells their implementation as a service needs to publish their source code under the SSPL license – not just changes made to the original program but all other software a user would require to run the software-as-a-service environment for themselves. Amazon used ElasticSearch for their Amazon Elasticsearch Service offering, but was unable/unwilling to continue doing so under the new license terms. In April of 2021, Amazon Web Services created a fork of ElasticSearch as the basis for OpenSearch.

Differences Between OpenSearch and ElasticSearch

After the OpenSearch fork was created, the product roadmap for ElasticSearch was driven by ElasticCo and the roadmap for OpenSearch was community driven (with significant oversight and input from Amazon) – this means the products are not identical although they provide the same core functionality. Elastic publishes a list of features unique to ElasticSearch, and the underlying machine learning algorithms are different. However, the important components of the “unique” feature list have been implemented in OpenSearch over time.

The biggest differences are price and support. OpenSearch is free software – there is no purchasing a license to unlock features. It does appear that Amazon has an internal iteration of OpenSearch as their as-a-service offering provides features not available in the open-source OpenSearch code base, but that is only available for cloud customers. ElasticCo offers ElasticSearch as free software with a limited feature set. One critical limitation is user authentication mechanisms – we are unable to implement PingID as an authentication source with the free feature set. Advanced features not currently used today – machine learning based anomaly detection, as an example – are also unavailable in the free iteration of ElasticSearch. With an ElasticSearch license, we would also get vendor support. OpenSearch does not offer vendor support, although there are third party companies that will provide support services.

Both OpenSearch and ElasticSearch have community-based support forums available – I have gotten responses from developers on both forums for questions regarding usage nuances.

Salient Feature Comparison

Most companies have a list differentiating their product from the products offered by competitors – but the important thing is how the products differ as it relates to how an individual customer uses the product. A car that can have a fresh cup of espresso waiting for you as you leave for work might be amazing to some people, but those who don’t drink coffee won’t be nearly as impressed. So how do the two products compare for Windstream?

Data ingestion – Data is ingested using the same mechanisms – ElasticCo’s filebeat and logstash are important components of data ingestion, and these components remain unchanged. This means existing processes that feed data into ElasticSearch today would not need to be changed to begin ingesting data into OpenSearch.

Data storage – Both products distribute searchable data over a cluster of servers. Data storage is “tiered” as hot, warm, and cold which allows less used data to reside on slower, less expensive resources. We have confirmed that ingested data is properly housed on cluster nodes designated for ‘hot’ storage and moved to ‘warm’ and ‘cold’ storage as dictated by defined policies. The item count to size ratio is similar between both products (i.e. storing ten million documents takes about the same amount of disk space). OpenSearch provides the ability to alert on transition failures (moving from hot to warm, for instance) which will reduce the amount of manual “health checking” required for the environment.

Search and aggregation – Both products allow both GUI and API searches of indexed data. Data can be aggregated as it is searched – returning the max/min/average value from a search, a count of records matching search criterion, creating sub-aggregations. ElasticSearch does have aggregations not available in OpenSearch, although these could be handled through custom scripted aggregations and many have corresponding GitHub issues requesting such an aggregation be added to OpenSearch (e.g. weighted average, geohash grid, or geotile grid)

| auto-interval date histogram | x | |

| categorize text | x | |

| children | x | |

| composite | x | |

| frequent items | x | |

| geohex grid | x | |

| geotile grid | x | |

| ip prefix | x | |

| multi terms | x | |

| parent | x | |

| random sampler | x | |

| rare terms | x | |

| terms | x | |

| variable width histogram | x | |

| boxplot | x | |

| geo-centroid | x | |

| geo-line | x | |

| median absolute deviation | x | |

| rate | x | |

| string stats | x | |

| t-test | x | |

| top metrics | x | |

| weighted avg | x |

Alerting – ElastAlert2 can be used to provide the same index monitoring and alerting functionality that ElastAlert currently provides with ElasticSearch. Additionally, OpenSearch includes a built-in alerting capability that might allow us to streamline the functionality into the base OpenSearch implementation.

API Access – Both ElasticSearch and OpenSearch provide API-based access to data. Queries to the ElasticSearch API endpoint returned expected data when directed to the OpenSearch API endpoint. The ElasticSearch python module can be used to access OpenSearch data, although there is a specific OpenSearch module as well.

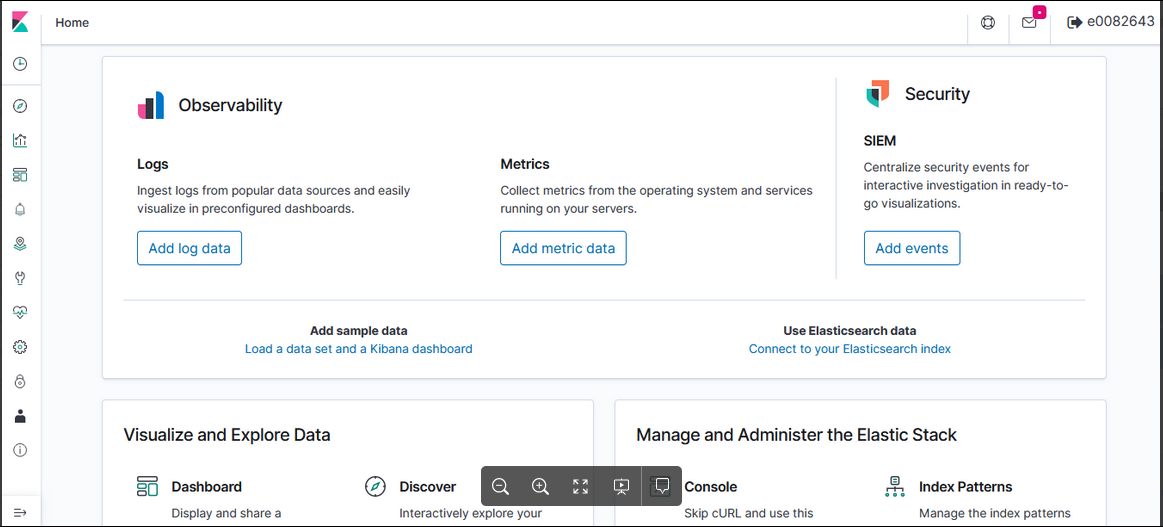

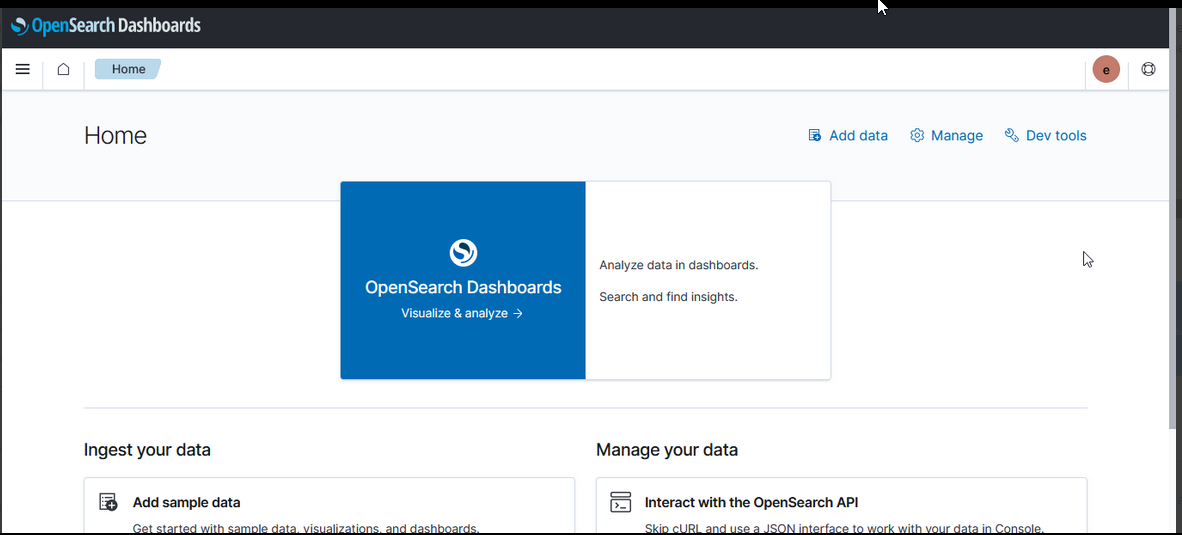

UX – ElasticSearch allows users to search and visualize data through Kibana; OpenSearch provides graphical user access in OpenSearch Dashboard. While the “look and feel” of the GUI differs (Kibana 8 looks different than the Kibana 7 we use today, too), the user functionality remains the same.

| Kibana 7.7 | OpenSearch Dashboards 2.2 |

|  |

Kibana uses “KQL” – Kibana Query Language – to compose searches while OpenSearch Dashboards uses “DQL” – Dashboards Query Language, but queries used in Kibana were used in OpenSearch Dashboard without modification.

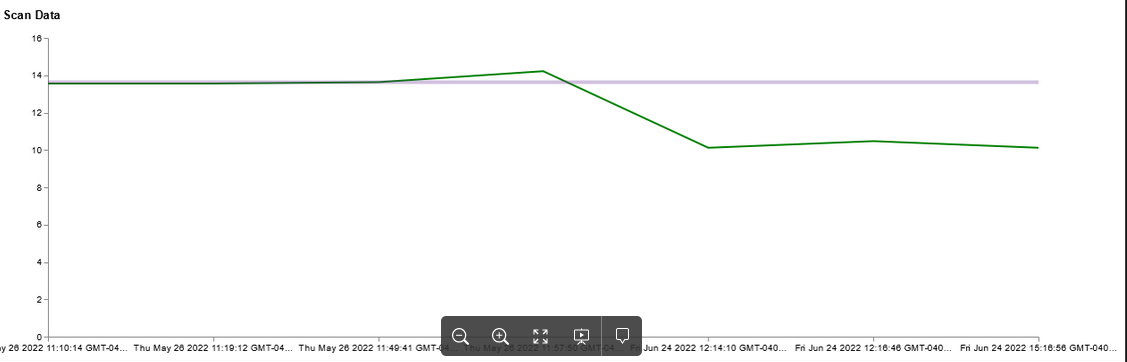

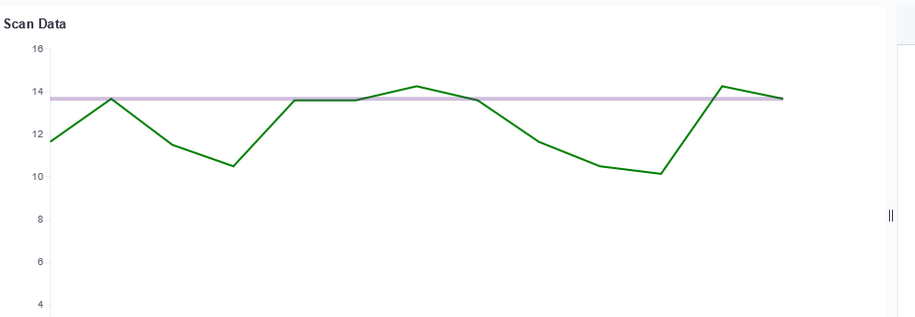

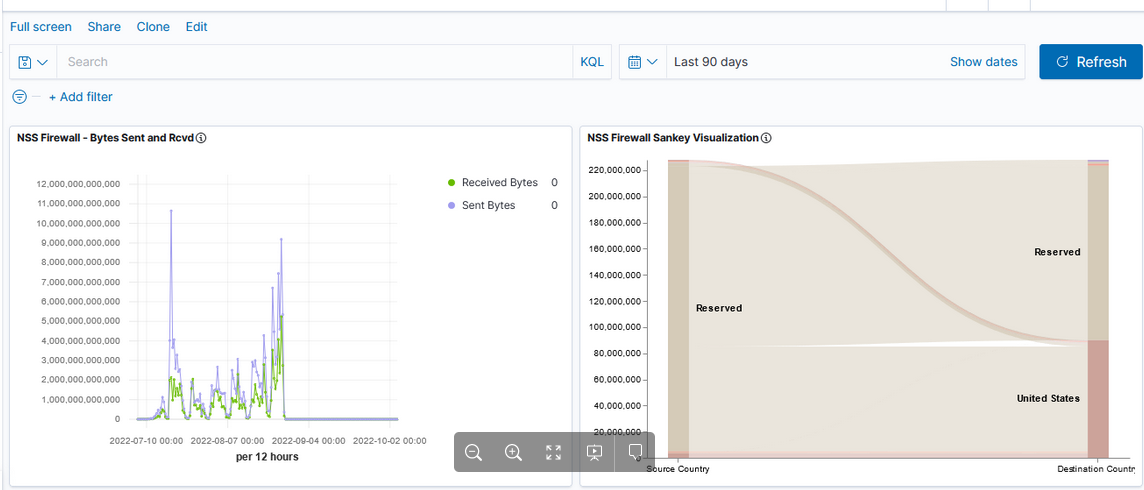

Currently used visualizations are available in both Kibana and OpenSearch Dashboards

| Kibana Visualization | OpenSearch Dashboards Visualization |

|  |

But there are some currently unused visualizations that are unique to each product.

| Area | x | x |

| Controls | x | x |

| Data Table | x | x |

| Gauge | x | x |

| Goal | x | x |

| Heat Map | x | x |

| Horizonal Bar | x | x |

| Lens | x | |

| Line | x | x |

| Maps | x | |

| Markdown | x | x |

| Metric | x | x |

| Pie | x | x |

| Tag Cloud | x | x |

| Timeline | x | x |

| TSVB | x | x |

| Vega | x | x |

| Vertical Bar | x | x |

| Coordinate Map | x | |

| Gantt Chart | x | |

| Region Map | x |

Dashboards can be used to group visualizations.

| Kibana | OpenSearch Dashboards |

|  |

New features will be available in either OpenSearch or a licensed installation of ElasticSearch. Currently data is either retained as written or aged out of the system to save disk space. Either path allows us to roll up data – as an example retaining the total number of users per month or total bytes per month instead of retaining each detailed record. Additionally, we will be able to use the “anomaly detection” which is able to monitor large volumes of index data and highlight unusual events. Both newer ElasticSearch versions and OpenSearch offer a Tableau connector which may make data stored in the platform more accessible to users.

Configuring OpenSearch 2.x with OpenID Authentication

Sorry, again, Anya … I really mean it this time. Restart your ‘no posting about computer stuff’ timer!

I was able to cobble together a functional configuration to authenticate users through an OpenID identity provider. This approach combined the vendor documentation, ten different forum posts, and some debugging of my own. Which is to say … not immediately obvious.

Importantly, you can enable debug logging on just the authentication component. Trying to read through the logs when debug logging is set globally is unreasonable. To enable debug logging for JWT, add the following to config/log4j2.properties

logger.securityjwt.name = com.amazon.dlic.auth.http.jwt

logger.securityjwt.level = debug

On the OpenSearch Dashboard server, add the following lines to ./opensearch-dashboards/config/opensearch_dashboards.yml

opensearch_security.auth.type: "openid"

opensearch_security.openid.connect_url: "https://IdentityProvider.example.com/.well-known/openid-configuration"

opensearch_security.openid.client_id: "<PRIVATE>"

opensearch_security.openid.client_secret: "<PRIVATE>"

opensearch_security.openid.scope: "openid "

opensearch_security.openid.header: "Authorization"

opensearch_security.openid.base_redirect_url: "https://opensearch.example.com/auth/openid/login"

On the OpenSearch servers, in ./config/opensearch.yml, make sure you have defined plugins.security.ssl.transport.truststore_filepath

While this configuration parameter is listed as optional, something needs to be in there for the OpenID stuff to work. I just linked the cacerts from our JDK installation into the config directory.

If needed, also configure the following additional parameters. Since I was using the cacerts truststore from our JDK, I was able to use the defaults.

| plugins.security.ssl.transport.truststore_type | The type of the truststore file, JKS or PKCS12/PFX. Default is JKS. |

| plugins.security.ssl.transport.truststore_alias | Alias name. Optional. Default is all certificates. |

| plugins.security.ssl.transport.truststore_password | Truststore password. Default is changeit. |

Configure the openid_auth_domain in the authc section of ./opensearch/config/opensearch-security/config.yml

openid_auth_domain:

http_enabled: true

transport_enabled: true

order: 1

http_authenticator:

type: "openid"

challenge: false

config:

openid_connect_idp:

enable_ssl: true

verify_hostnames: false

openid_connect_url: https://idp.example.com/.well-known/openid-configuration

authentication_backend:

type: noop

Note that subject_key and role_key are not defined. When I had subject_key defined, all user logon attempts failed with the following error:

[2022-09-22T12:47:13,333][WARN ][c.a.d.a.h.j.AbstractHTTPJwtAuthenticator] [UOS-OpenSearch] Failed to get subject from JWT claims, check if subject_key 'userId' is correct.

[2022-09-22T12:47:13,333][ERROR][c.a.d.a.h.j.AbstractHTTPJwtAuthenticator] [UOS-OpenSearch] No subject found in JWT token

[2022-09-22T12:47:13,333][WARN ][o.o.s.h.HTTPBasicAuthenticator] [UOS-OpenSearch] No 'Basic Authorization' header, send 401 and 'WWW-Authenticate Basic'

Finally, use securityadmin.sh to load the configuration into the cluster:

/opt/opensearch-2.2.1/plugins/opensearch-security/tools/securityadmin.sh --diagnose -cd /opt/opensearch/config/opensearch-security/ -icl -nhnv -cacert /opt/opensearch-2.2.1/config/certs/root-ca.pem -cert /opt/opensearch-2.2.1/config/certs/admin.pem -key /opt/opensearch-2.2.1/config/certs/admin-key.pem -h UOS-OpenSearch.example.com

Restart OpenSearch and OpenSearch Dashboard — in the role mappings, add custom objects for the external user IDs.

When logging into the Dashboard server, users will be redirected to the identity provider for authentication. In our sandbox, we have two Dashboard servers — one for general users which is configured for external authentication and a second for locally authenticated users.

Firewalld — Adding and Removing a Forwarding Rule

(Sorry, Anya … after today, I’ll try to not post anything about computers for three days!) Linux restricts non-root users from opening ports <1024. It’s generally a good idea not to run your services as root. Which means, unfortunately, we end up running a lot of services on nonstandard ports (so frequently that 1389 and 1636 are a quasi-standard port for LDAP and LDAPS, 8080 and 8443 quasi-standard ports for HTTP and HTTPS). But having to remember to add the nonstandard port to a web URL is an annoyance for users — I’ve seen a lot of people fix this by adding a load balanced VIP or NGINX proxy in front of the service to handle port translations. But there is a quick and easy way to handle port translation without any additional equipment. Most Linux hosts have firewalld running, and you can tell the firewall to forward the port for you. In this example, I’m letting my Kibana users access my web service using https://kibana.example.com without needing to append the :5601:

firewall-cmd –permanent –zone=public –add-forward-port=port=443:proto=tcp:toport=5601

Should you decide against the port forwarding, the same command with –remove-forward-port deregisters the rule:

firewall-cmd –zone=public –remove-forward-port=port=443:proto=tcp:toport=5601

ElastAlert2 SSL with OpenSearch 2.x

This turned out to be one of those situations where I went down a very complicated path for a very simple problem. We were setting up ElastAlert2 in our OpenSearch sandbox. I’ve used both the elasticsearch-py and opensearch-py modules with Python 3 to communicate with the cluster, so I didn’t anticipate any problems.

Which, of course, meant we had problems. A very cryptic message:

javax.net.ssl.SSLHandshakeException: Insufficient buffer remaining for AEAD cipher fragment (2). Needs to be more than tag size

A quick perusal of the archive of all IT knowledge (aka Google) led me to a Java bug: https://bugs.openjdk.org/browse/JDK-8221218 which may or may not be resolved in the latest OpenJDK (which we are using). I say may or may not because it’s marked as resolved in some places but people report experiencing the bug after resolution was reported.

Fortunately, the OpenSearch server reported something more useful:

[2022-09-20T12:18:55,869][WARN ][o.o.h.AbstractHttpServerTransport] [UOS-OpenSearch.example.net] caught exception while handling client http traffic, closing connection Netty4HttpChannel{localAddress=/10.1.2.3:9200, remoteAddress=/10.1.2.4:55494}

io.netty.handler.codec.DecoderException: io.netty.handler.ssl.NotSslRecordException: not an SSL/TLS record: 504f5354202f656c617374616c6572745f7374617475735f6572726f722f5f646f6320485454502f312e310d0a486f73743a20

Which I’ve shortened because it was several thousand fairly random seeming characters. Except they aren’t random — that’s the communication hex encoded. Throwing the string into a hex decoder, I see the HTTP POST request.

Which … struck me as rather odd because it should be SSL encrypted rubbish. Turns out use_ssl was set to False! Evidently attempting to send clear text ‘stuff’ to an encrypted endpoint produces the same error as reported in the Java bug.

Setting use_ssl to true brought us to another adventure — an SSLCertVerificationError. We have set the verify_certs to false — even going so far as to go into util.py and modifying line 354 so the default is False. No luck. But there’s another config in each ElastAlert2 rule — http_post_ignore_ssl_errors — that actually does ignore certificate errors. One the rules were configured with http_post_ignore_ssl_errors, ElastAlert2 was able to communicate with the OpenSearch cluster and watch for triggering events.

OpenSearch 2.x: Building a New Tenant

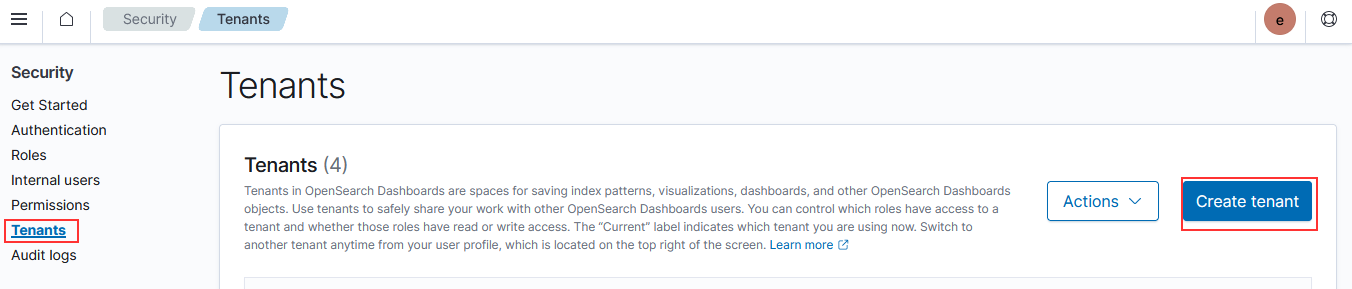

Logged in as an admin user, use the left-hand navigation menu to select “Security”. Select “Tenants” and click on “Create tenant”.

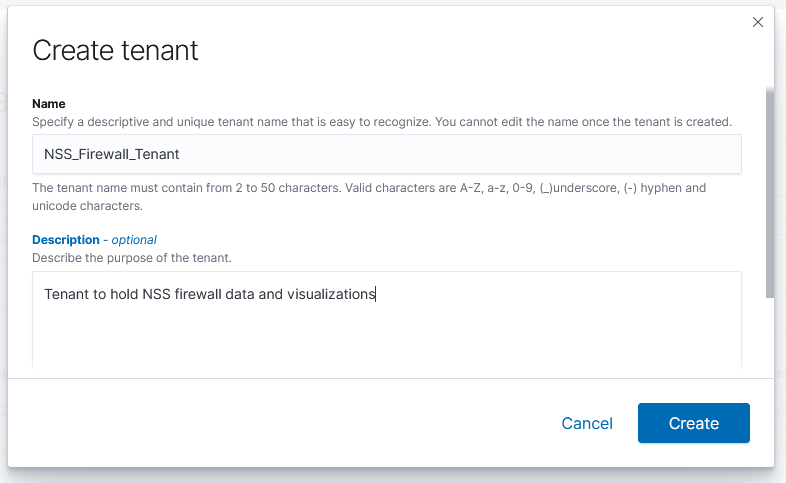

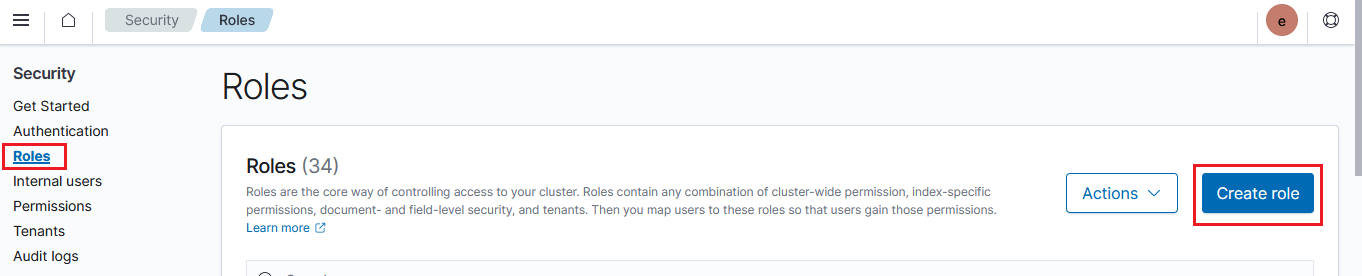

Give the tenant a name and a description, then click “Create”

OK, now a tenant is created. The important bit is to establish a role that only sees data within the tenant. Click on “Roles”, then click “Create role”.

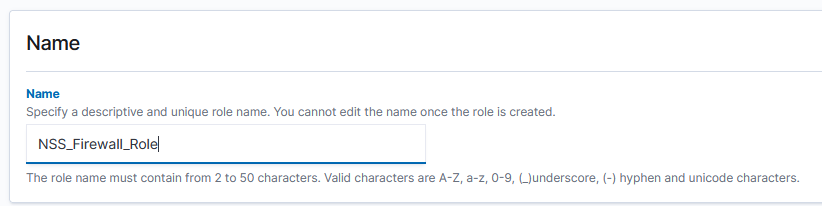

Give the role a name:

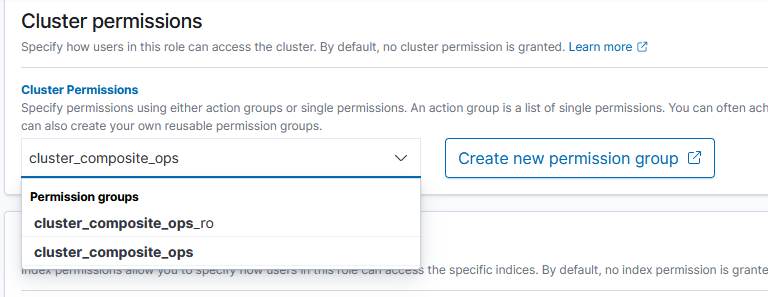

Under “Cluster permissions” add either cluster_composite_ops_ro (for read only access – cannot make new visualizations or dashboards) or cluster_composite_ops – we may make a “help desk” type role where users are not permitted to write to the tenant, but my examples herein are all for business owners who will be able to save queries, create visualizations, modify dashboards, etc.

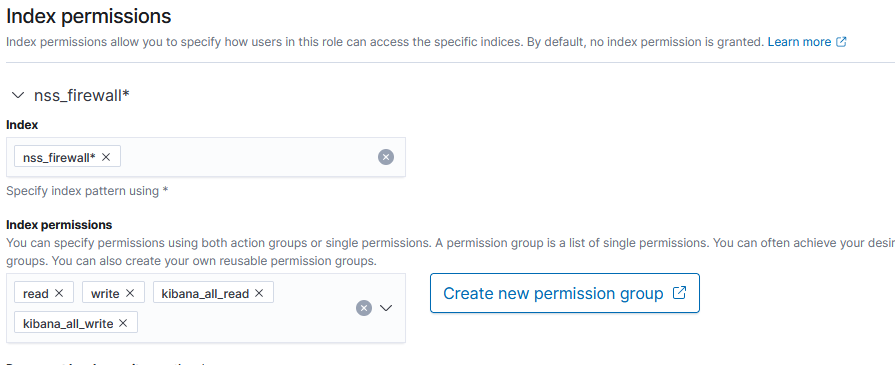

Under “Index permissions”, add the index pattern (or patterns) to which the tenant should have access. Add read or read/write permissions to the index. We are not using any of the fine-grained security components (providing access only to records that come in from a specific host or contain a specific error)

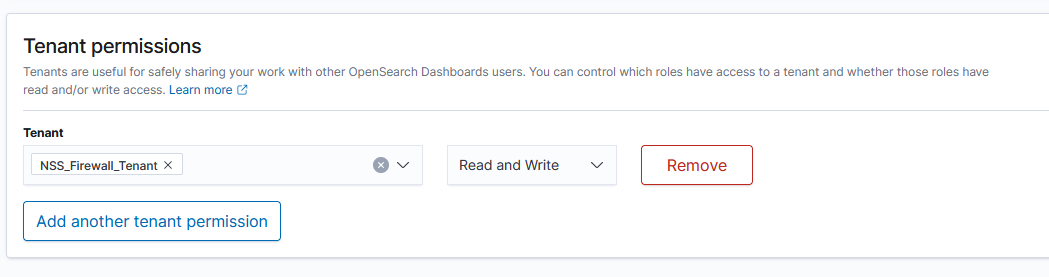

Finally, under “Tenant Permissions”, select the associated tenant and grant either Read or Read and Write permission

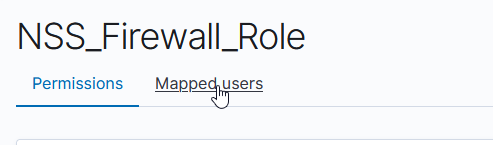

Click “Create” and your new role has been created. Once created, click on “Mapped users”

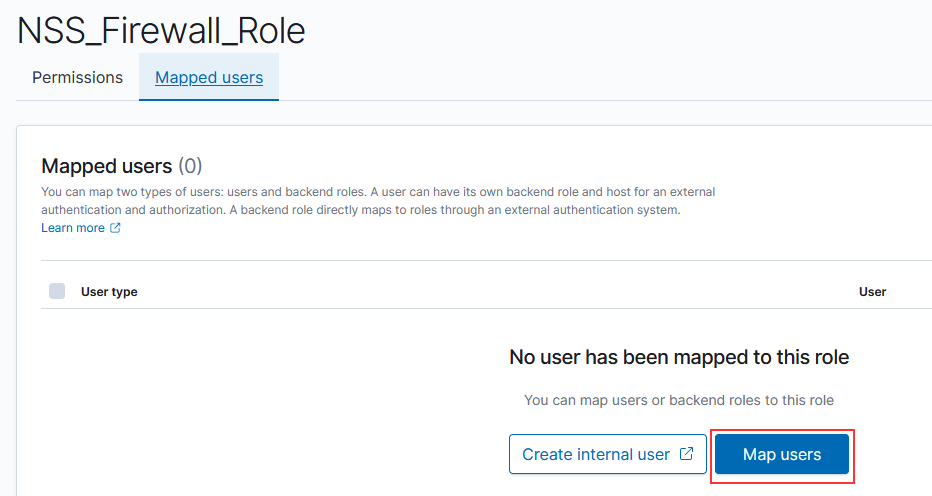

Select “Map users” to edit the users mapped to the role.

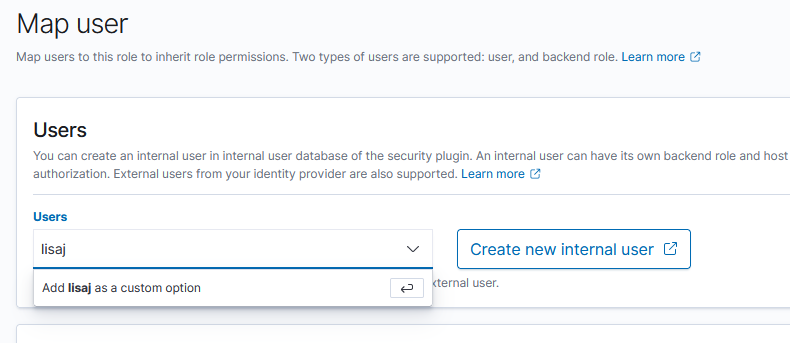

To map an externally authenticated user (accounts authenticate through OpenID, for example), just type the username and hit enter to add “as a custom option”.

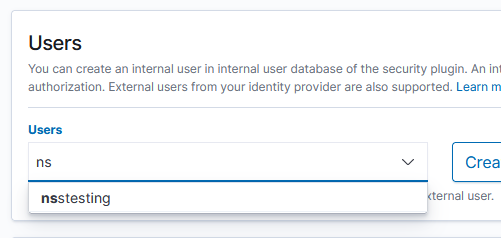

For an internal user, you’ll be able to select them from a user list as you begin typing the user ID

There is one trick to getting a new tenant working — https://forum.opensearch.org/t/multi-tenancy-for-different-indices/5008/8 indicates you’ve got to use an admin account from the global tenant, switch to the new tenant, and create the index pattern there. Once the index pattern (or, I suppose, patterns) has been created, the tenant users are able to discover / visualize their data.

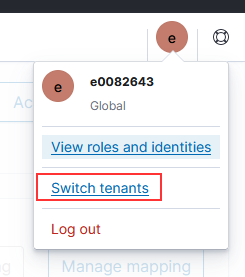

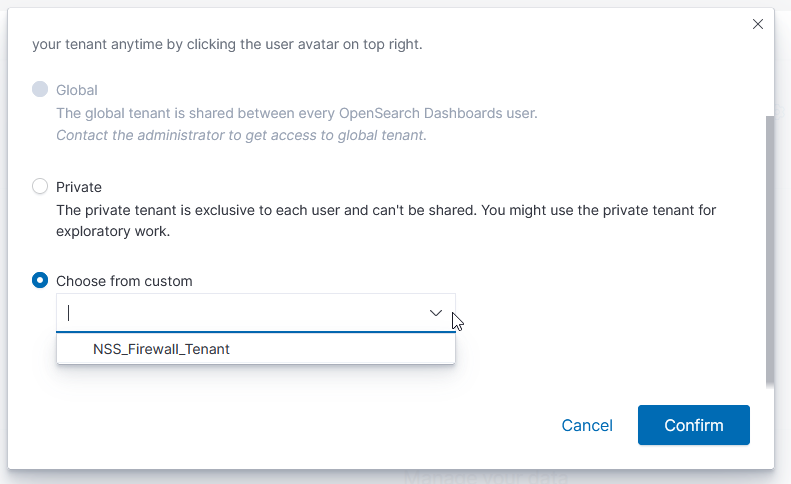

Click on the icon for your user account and select “Switch tenants”

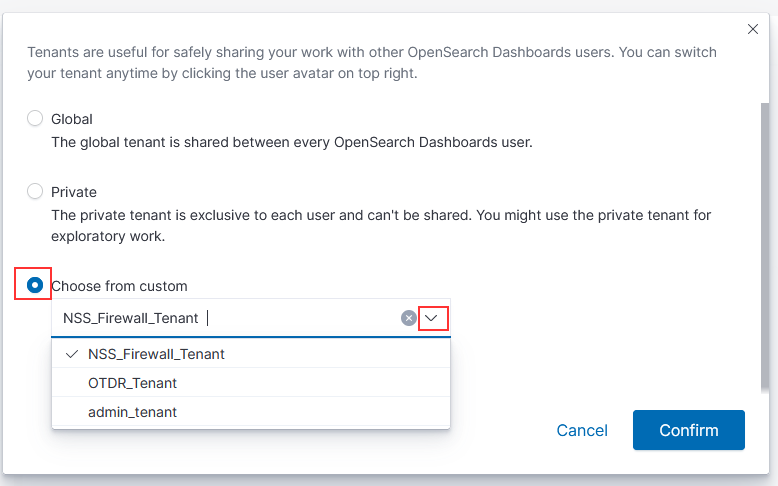

Select the radio button in front of “Choose from custom” and then use the drop-down to select your newly created tenant. Click “Confirm” to switch to that tenant.

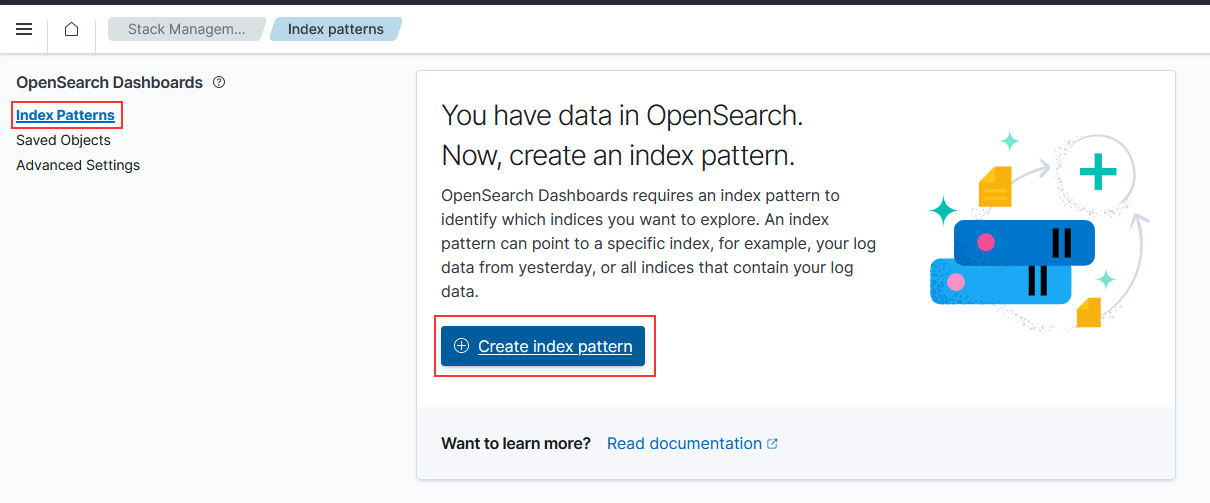

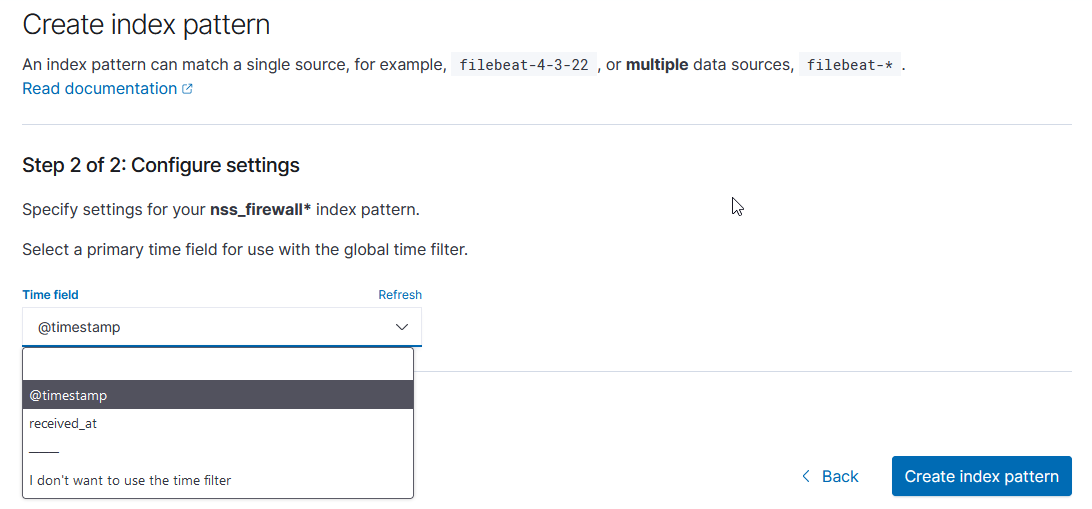

From the left-hand navigation menu, select “Stack Management” and then select “Index Patterns”. Click to “Create index pattern”

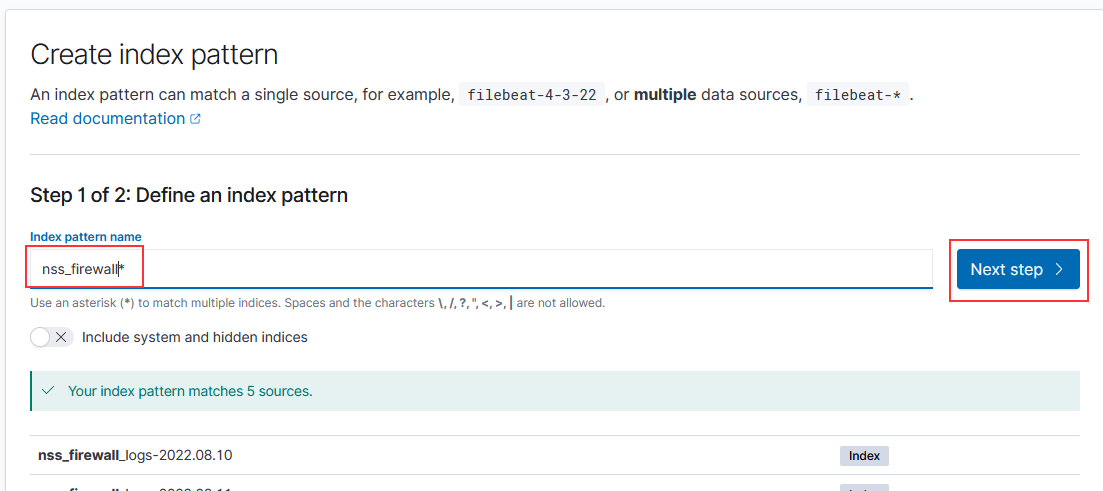

Type the pattern and click “Next step”

Select the time field from the drop-down menu, then click “Create index pattern”

Now you’re ready – have a user log into OpenSearch Dashboard. They’ll need to select the radio button to “Choose from custom” and select their tenant from the dropdown menu.

Logstash – Filtering Null-Terminated Messages

I have a syslog message that contains a null terminated string: "syslog_message":"A10\u0000" — these messages represent is-alive checks from a load balancer to the logstash servers. I would prefer not to have thousands of “the A10 checked & said logstash is still there” filling up Elasticsearch.

Unfortunately, the logstash configuration doesn’t recognize unicode escape sequences … and it’s not like I can literally type a NULL the way I could type a ° or è

I’ve been able to filter out any messages that start with A10. Since our “real” messages start with timestamps, I shouldn’t be dropping any good data, but there’s always the possibility. Without any way to indicate a null character, the closest match is any single character … and I’ve decided not to worry about a possible log message that is simply A101 or A10$ until we encounter a system that would send such messages.

#if [message] == "A10\u0000"{ -- doesn't work

#if [message] == "A10\\u0000"{ -- doesn't work

#if [message] == 'A10\u0000'{ -- doesn't work

#if [message] =~ /^A10/{ -- this isn't great because of false positives, although *these* messages all start with a timestamp so are unlikely to match

if [message] =~ "^A10.$" {

drop { }

}