Certificate Issuance Fails

After requesting a certificate, the request immediately fails with the error:

Failed to post CSR with error: Unknown certificate profile type.

I think it is just a coincidence, but wanted to document the scenario in case it comes up again. The application makes web calls to a vendor API to issue certs. The API calls, after the upgrade, were failing.

In this scenario, a call was being made to {base_url}/api/ssl/v1/types, the connection failed. Since the list of valid certificate profiles could not be retrieved, the request failed saying the certificate profile was unknown.

GET https://hard.cert-manager.com/api/ssl/v1/types?organizationId=####

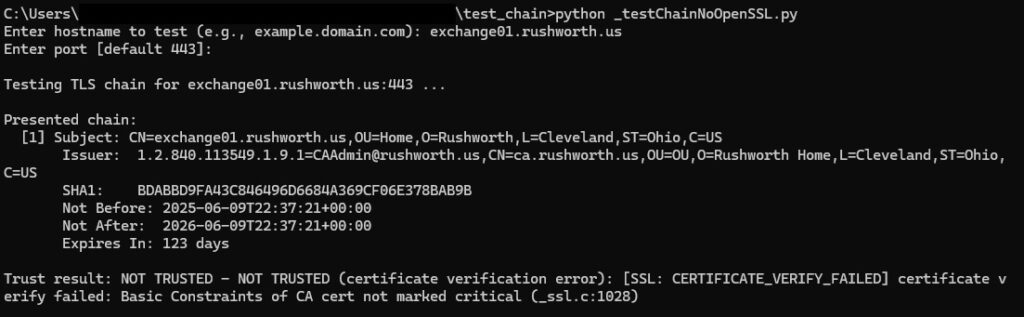

Looking at a debug trace, the following flow was observed:

- Authentication headers sent: login=<REDACTED>, password=<REDACTED>, customerUri=<REDACTED>

- Transport-level failure (no HTTP status returned on the failing attempt)

- Symptoms: “Decrypt failed with error 0X90317” followed by “The underlying connection was closed: The connection was closed unexpectedly.”

- Context: Revocation checks reported “revocation server was offline,” then the client proceeded; long idle/keep-alive reuse likely contributed to the close.

Connection reuse vs server keep-alive: Apache is advertising Keep-Alive: timeout=3. The .NET client is reusing long-idle TLS connections via the proxy; by the time it sends application data, the server/proxy has already closed the session, leading to “underlying connection was closed” errors.

Revocation checks through the proxy: The .NET trace shows “revocation server was offline” before proceeding. That extra handshake work plus proxy blocking CRL/OCSP can increase latency and contribute to idle reuse issues.

.NET SChannel quirks: Older HttpWebRequest/ServicePoint behaviors (Expect100-Continue, connection pooling) can interact poorly with short keep-alive servers/proxies.

Luckily, this is a .NET application, and you can create custom configuration files for .NET apps. In the file with the binary, look for a text file named BinaryName.exe.config

If none exists, create one. The following disables the proxy:

<?xml version=”1.0″ encoding=”utf-8″?>

<configuration>

<system.net>

<!– Turn off use of the system proxy for this app –>

<defaultProxy enabled=”true”>

<proxy usesystemdefault=”false” />

</defaultProxy>

</system.net>

</configuration>