There’s a rather innocuous sounding bug in Apache Airflow that should be corrected in 2.8.2 — https://github.com/apache/airflow/pull/36538 — that means you absolutely cannot set up SSO using OAUTH with FabAirflowSecurityManagerOverride. Using the deprecated AirflowSecurityManager would work, manually updating your Apache Airflow code with the fix will work. But there’s no point in trying to set up SSO with the FabAirflowSecurityManagerOverride as your custom security manager — whatever lovely code you write won’t be invoked, you’ll get an error saying the username or email address is not present even though you thoughtfully wrote out some custom code to map out those exact attributes, and it all looks like it should be working!

Tag: OAuth

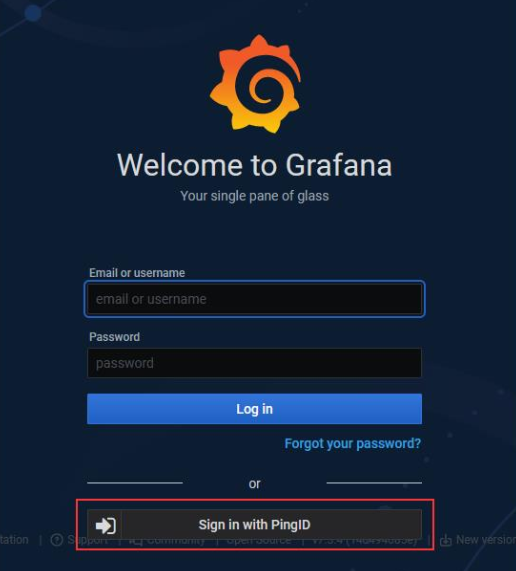

Grafana — SSO With PingID (OAuth)

I enabled SSO in our development Grafana system today. There’s not a great user experience with SSO enabled because there is a local ‘admin’ user that has extra special rights that aren’t given to users put into the admin role. If you just enable SSO, there is a new button added under the logon dialogue that users can use to initiate an SSO authentication. That’s not great, though, since most users really should be using the SSO workflow. And people are absolutely going to be putting their login information into that really obvious set of text input fields.

Grafana has a configuration to bypass the logon form and just always go down the OAUTH authentication:

# Set to true to attempt login with OAuth automatically, skipping the login screen. # This setting is ignored if multiple OAuth providers are configured. oauth_auto_login = true

Except, now, the rare occasion we need to use the local admin account requires us to set this to false, restart the service, do our thing, change the setting back, and restart the service again. Which is what we’ll do … but it’s not a great solution either.

Config to authenticate Grafana to PingID using OAUTH

#################################### Generic OAuth ########################## [auth.generic_oauth] name = PingID enabled = true allow_sign_up = true client_id = 12345678-1234-4567-abcd-123456789abc client_secret = abcdeFgHijKLMnopqRstuvWxyZabcdeFgHijKLMnopqRstuvWxyZ scopes = openid profile email email_attribute_name = email:primary email_attribute_path = login_attribute_path = user role_attribute_path = id_token_attribute_name = auth_url = https://login.example.com/as/authorization.oauth2 token_url = https://login.example.com/as/token.oauth2 api_url = https://login.example.com/idp/userinfo.openid allowed_domains = team_ids = allowed_organizations = tls_skip_verify_insecure = true tls_client_cert = tls_client_key = tls_client_ca =

Configuring OpenSearch 2.x with OpenID Authentication

Sorry, again, Anya … I really mean it this time. Restart your ‘no posting about computer stuff’ timer!

I was able to cobble together a functional configuration to authenticate users through an OpenID identity provider. This approach combined the vendor documentation, ten different forum posts, and some debugging of my own. Which is to say … not immediately obvious.

Importantly, you can enable debug logging on just the authentication component. Trying to read through the logs when debug logging is set globally is unreasonable. To enable debug logging for JWT, add the following to config/log4j2.properties

logger.securityjwt.name = com.amazon.dlic.auth.http.jwt

logger.securityjwt.level = debug

On the OpenSearch Dashboard server, add the following lines to ./opensearch-dashboards/config/opensearch_dashboards.yml

opensearch_security.auth.type: "openid"

opensearch_security.openid.connect_url: "https://IdentityProvider.example.com/.well-known/openid-configuration"

opensearch_security.openid.client_id: "<PRIVATE>"

opensearch_security.openid.client_secret: "<PRIVATE>"

opensearch_security.openid.scope: "openid "

opensearch_security.openid.header: "Authorization"

opensearch_security.openid.base_redirect_url: "https://opensearch.example.com/auth/openid/login"

On the OpenSearch servers, in ./config/opensearch.yml, make sure you have defined plugins.security.ssl.transport.truststore_filepath

While this configuration parameter is listed as optional, something needs to be in there for the OpenID stuff to work. I just linked the cacerts from our JDK installation into the config directory.

If needed, also configure the following additional parameters. Since I was using the cacerts truststore from our JDK, I was able to use the defaults.

| plugins.security.ssl.transport.truststore_type | The type of the truststore file, JKS or PKCS12/PFX. Default is JKS. |

| plugins.security.ssl.transport.truststore_alias | Alias name. Optional. Default is all certificates. |

| plugins.security.ssl.transport.truststore_password | Truststore password. Default is changeit. |

Configure the openid_auth_domain in the authc section of ./opensearch/config/opensearch-security/config.yml

openid_auth_domain:

http_enabled: true

transport_enabled: true

order: 1

http_authenticator:

type: "openid"

challenge: false

config:

openid_connect_idp:

enable_ssl: true

verify_hostnames: false

openid_connect_url: https://idp.example.com/.well-known/openid-configuration

authentication_backend:

type: noop

Note that subject_key and role_key are not defined. When I had subject_key defined, all user logon attempts failed with the following error:

[2022-09-22T12:47:13,333][WARN ][c.a.d.a.h.j.AbstractHTTPJwtAuthenticator] [UOS-OpenSearch] Failed to get subject from JWT claims, check if subject_key 'userId' is correct.

[2022-09-22T12:47:13,333][ERROR][c.a.d.a.h.j.AbstractHTTPJwtAuthenticator] [UOS-OpenSearch] No subject found in JWT token

[2022-09-22T12:47:13,333][WARN ][o.o.s.h.HTTPBasicAuthenticator] [UOS-OpenSearch] No 'Basic Authorization' header, send 401 and 'WWW-Authenticate Basic'

Finally, use securityadmin.sh to load the configuration into the cluster:

/opt/opensearch-2.2.1/plugins/opensearch-security/tools/securityadmin.sh --diagnose -cd /opt/opensearch/config/opensearch-security/ -icl -nhnv -cacert /opt/opensearch-2.2.1/config/certs/root-ca.pem -cert /opt/opensearch-2.2.1/config/certs/admin.pem -key /opt/opensearch-2.2.1/config/certs/admin-key.pem -h UOS-OpenSearch.example.com

Restart OpenSearch and OpenSearch Dashboard — in the role mappings, add custom objects for the external user IDs.

When logging into the Dashboard server, users will be redirected to the identity provider for authentication. In our sandbox, we have two Dashboard servers — one for general users which is configured for external authentication and a second for locally authenticated users.

OpenID Authentication with OpenDistro

The following configuration changes needed to be made to enable federated authentication through OpenIDC using OpenDistro 1.8.0 withElasticSearch 7.7.0 — this presupposes that you have an application properly registered with an OIDC identity provider.

./kibana/config/kibana.yml

opendistro_security.auth.type: "openid"

opendistro_security.openid.connect_url: "https://login.example.com/.well-known/openid-configuration"

opendistro_security.openid.client_id: "REDACTED"

opendistro_security.openid.client_secret: "REDACTED"

opendistro_security.openid.scope: "openid"

opendistro_security.openid.header: "Authorization"

opendistro_security.openid.base_redirect_url: "https://opensearch.dev.example.com"

And then on the ElasticSearch node, update ./elasticsearch/config/elasticsearch.yml

opendistro_security.ssl.transport.truststore_filepath: cacerts

And ./elasticsearch/plugins/opendistro_security/securityconfig/config.yml

basic_internal_auth_domain:

description: "Authenticate via HTTP Basic against internal users database"

http_enabled: true

transport_enabled: true

order: 4

http_authenticator:

type: basic

challenge: true

authentication_backend:

type: intern

openid_auth_domain:

http_enabled: true

transport_enabled: true

order: 1

http_authenticator:

type: openid

challenge: false

config:

enable_ssl: true

verify_hostnames: false

openid_connect_url: https://login.example.com/.well-known/openid-configuration

authentication_backend:

type: noop

Use securityadmin.sh to update — it helps if you update ./elasticsearch/plugins/opendistro_security/securityconfig/roles_mapping.yml

all_access:

reserved: false

backend_roles:

- "admin"

users:

- "lisa"

description: "Maps admin to all_access"

My experience is that the ElasticSearch API will allow authentication for local users. Kibana, however, does not — if you want to allow local users to log into Kibana, you’d either need a different Kibana instance (permanently allow local users to access Kibana) or update the kibana.yml to exclude the federated logon stuff & restart the service (temporary workaround when the identity provider has an issue).

The biggest challenge that I encountered is that there is, evidently, a bug in OpenDistro 1.13.1 that makes OIDC authentication non-functional. Downgrading to OpenDistro 1.13.0 worked, 1.8.0 (the version matched with our ElasticSearch 7.7.0 iteration) worked. And, reportedly, the newest 1.13.3 works as well.

On Federated Identity Providers

The basic idea here is that you may want someone to be able to validate your users without actually having access to your passwords or directory data. As a counter-example, a company I work with has their payroll “stuff” outsourced. Doing so required a B2B VPN that allowed the hosting company to access an internal LDAP directory. I set up an access control list for their connection so they could only authenticate users. Someone at the hosting company couldn’t download all of the e-mail addresses or phone numbers. Even so, a sufficiently motivated employee of the third-party company could get the logon and password for anyone who used their server – if it’s my code, adding the equivalent of ‘fileHandle.write(f”u:{username} p:{password}”)’ would write a log file with every cred used on the site.

Don’t contract with dodgy companies that are going to drop your user creds out to a file and do malicious stuff is a good start, but I would concede that “avoid dodgy companies” isn’t a great security paradigm. Someone came up with this “federated identity” methodology — instead of you asking the user for their ID and password, you get a URL to redirect not-yet-logged-on users over to someone trusted to handle passwords. This is the “identify provider”, or IDP.

I access your website (called the ‘service provider’, or SP), and you see I don’t have any sort of auth cookie to get me logged in. You forward my browser, along with some header info, over to IdentityProviderSite. IdentityProviderSite says to the end user “hey, what is your username and password”, checks that what is entered, maybe does the MFA “really, prove it” thing, and then redirects the browser back to the originating website. It includes some header stuff that says “Hi, I am IdentityProviderSite and I used my trusted private key to sign this message. I promise that the person associated with this connection is really Lisa. And here’s her important info (could just be username, could be first name, last name, email address, etc) that you can also trust is right.” No idea why, but the info about the person is called an “assertion” — so you’ll see talk about mapping assertions (which is basically telling my application that the thing it calls “logonID” is going to be called “userID” or “uid” or whatever in the data coming from IdentityProviderSite). Voila, I’m now on your website and logged in even though my password never transited your system. All you ever got was a promise that the person on this connection is really Lisa.

To accomplish this, there is a ‘trust’ between an application & an identity provider — if you tried to send a web user to IdentityProviderSite without establishing such a trust, it would say “yeah, I’m not validating users for you — I have no idea who you are”. And, similarly, a web app isn’t going to just trust any random source to say “really, I promise this is Lisa”. So we go into the web application and say “I really, really want to trust IdentityProviderSite when it tells me a user’s ID” and then go into IdentityProviderSite and say “I want WebApp to be able to ask to validate users”. And there’s some crypto stuff because IdentityProviderSite signs it’s “I promise this is Lisa” message & we don’t want someone to be able to edit that to say “I promise this is Fred”.

Why, oh why, is “where to send the authenticated person back to continue on their merry way” called an Assertion Consumer Service? The “service provider” is supposed to “consume” the identity … so it’s the URL of the “assertion consumer” (i.e. the code in the application that has some clue what to do with the “I promise this is Lisa” blob of data that they call an assertion).

Does this make any sense for third-party companies that we really shouldn’t trust? Companies that aren’t located on our internal network to access our directories directly? Absolutely! Does this make any sense for our internal stuff? Stuff with direct, encrypted access to the AD directory? Eh … it goes well with the “trust no one” security principal. And points for consistency — every app’s logon will look the same. But it’s a lot of overhead / Internet traffic / complexity, too.

The basic process flow when a user attempts to use a site is:

- A client attempts to access some web resource to which they are not already authenticated

- The end web application redirects the client to the Identity Provider.

- The Identity Provider authenticates the user.

- The Identity Provider redirects the client to the Assertion Consumer Service (ACS) on the web resource by sending a SAML response over HTTP POST.

- The web server processes the SAML response.

- The client is redirected to the actual web application URL

- The web server authorizes the user to access the requested web resource.

- The application server sends the HTTP response back to client.

SSO In Apache HTTPD – OAuth2

PingID is another external authentication source that looks to be replacing ADFS at work in the not-too-distant future. Unfortunately, I’ve not been able to get anyone to set up the “other side” of this authentication method … so the documentation is untested. There is an Apache Integration Kit available from PingID (https://www.pingidentity.com/en/resources/downloads/pingfederate.html). Documentation for setup is located at https://docs.pingidentity.com/bundle/pingfederate-apache-linux-ik/page/kxu1563994990311.html

Alternately, you can use OAuth2 through Apache HTTPD to authenticate users against PingID. To set up OAuth, you’ll need the mod_auth_openidc module (this is also available from the RedHat dnf repository). You’ll also need the client ID and secret that make up the OAuth2 client credentials. The full set of configuration parameters used in /etc/httpd/conf.d/auth_openidc.conf (or added to individual site-httpd.conf files) can be found at https://github.com/zmartzone/mod_auth_openidc/blob/master/auth_openidc.conf

As I am not able to register to use PingID, I am using an alternate OAUTH2 provider for authentication. The general idea should be the same for PingID – get the metadata URL, client ID, and secret added to the oidc configuration.

Setting up Google OAuth Client:

Register OAuth on Google Cloud Platform (https://console.cloud.google.com/) – Under “API & Services”, select “OAuth Consent Screen”. Build a testing app – you can use URLs that don’t go anywhere interesting, but if you want to publish the app for real usage, you’ll need real stuff.

Under “API & Services”, select “Credentials”. Select “Create Credentials” and select “OAuth Client ID”

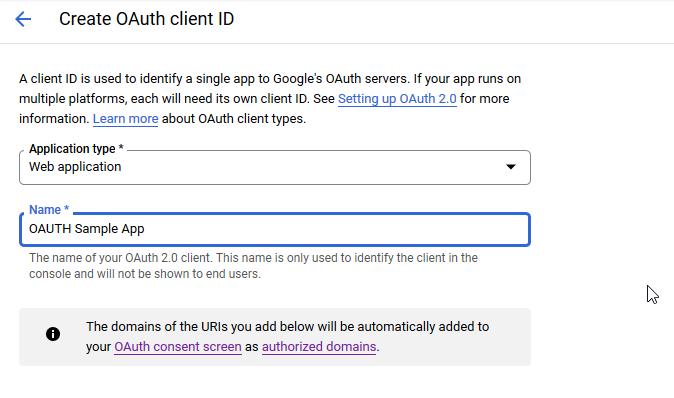

Select the application type “Web application” and provide a name for the connection

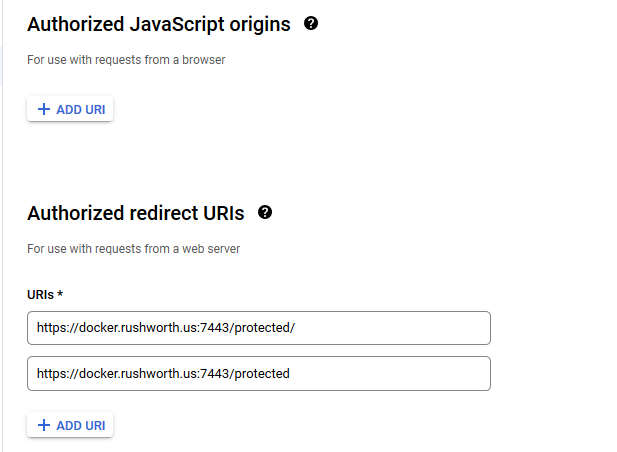

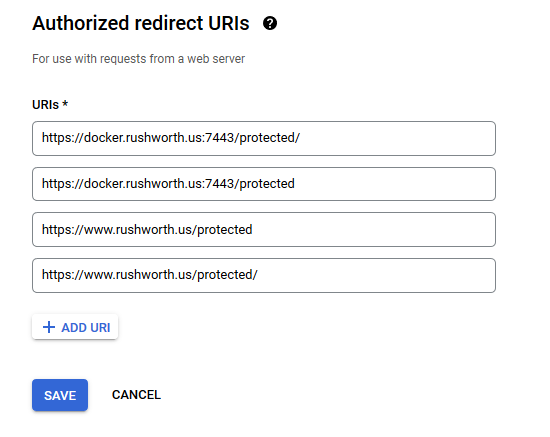

You don’t need any authorized JS origins. Add the authorized redirect URI(s) appropriate for your host. In this case, the internal URI is my docker host, off port on 7443. The generally used URI is my reverse proxy server. I’ve had redirect URI mismatch errors when the authorized URIs don’t both include and exclude the trailing slash. Click “Create” to complete the operation.

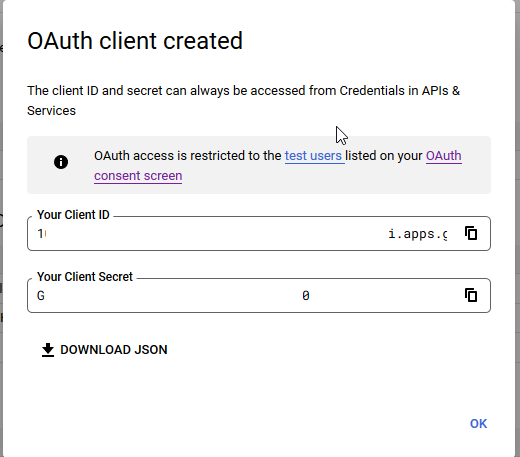

You’ll see a client ID and secret – stash those as we’ll need to drop them into the openidc config file. Click “OK” and we’re ready to set up the web server.

Setting Up Apache HTTPD to use mod_auth_openidc

Clone the mod_auth_openidc repo (https://github.com/zmartzone/mod_auth_openidc.git) – I made one change to the Dockerfile. I’ve seen general guidance that using ENV to set DEBIAN_FRONTEND to noninteractive is not ideal, so I replaced that line with the transient form of the directive:

ARG DEBIAN_FRONTEND=noninteractive

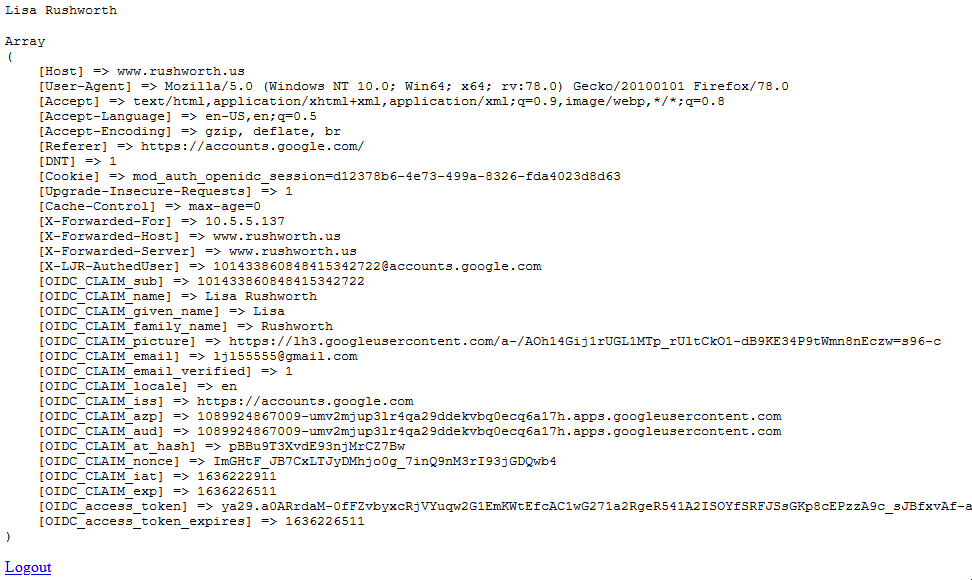

I also changed the index.php file to

RUN echo "<html><head><title>Sample OAUTH Site</title><head><body><?php print $_SERVER['OIDC_CLAIM_email'] ; ?><pre><?php print_r(array_map(\"htmlentities\", apache_request_headers())); ?></pre><a href=\"/protected/?logout=https%3A%2F%2Fwww.rushworth.us%2Floggedout.html\">Logout</a></body></html>" > /var/www/html/protected/index.php

Build an image:

docker build -t openidc:latest .

Create an openidc.conf file on your file system. We’ll bind this file into the container so our config is in place instead of the default one. In my example, I have created “/opt/openidc.conf”. File content included below (although you’ll need to use your client ID and secret and your hostname). I’ve added a few claims so we have access to the name and email address (email address is the logon ID)

Then run a container using the image. My sandbox is fronted by a reverse proxy, so the port used doesn’t have to be well known.

docker run --name openidc -p 7443:443 -v /opt/openidc.conf:/etc/apache2/conf-available/openidc.conf -it openidc /bin/bash -c "source /etc/apache2/envvars && valgrind --leak-check=full /usr/sbin/apache2 -X"

* In my case, the docker host is not publicly available. I’ve also added the following lines to the reverse proxy at www.rushworth.us

ProxyPass /protected https://docker.rushworth.us:7443/protected ProxyPassReverse /protected https://docker.rushworth.us:7443/protected

Access https://www.rushworth.us/protected/index.php (I haven’t published my app for Google’s review, so it’s locked down to use by registered accounts only … at this time, that’s only my ID. I can register others too.) You’ll be bounced over to Google to provide authentication, then handed back to my web server.

We can then use the OIDC_CLAIM_email — $_SERVER[‘OIDC_CLAIM_email’] – to continue in-application authorization steps (if needed).

openidc.conf content:

LogLevel auth_openidc:debug

LoadModule auth_openidc_module /usr/lib/apache2/modules/mod_auth_openidc.so

OIDCSSLValidateServer On

OIDCProviderMetadataURL https://accounts.google.com/.well-known/openid-configuration

OIDCClientID uuid-thing.apps.googleusercontent.com

OIDCClientSecret uuid-thingU4W

OIDCCryptoPassphrase S0m3S3cr3tPhrA53

OIDCRedirectURI https://www.rushworth.us/protected

OIDCAuthNHeader X-LJR-AuthedUser

OIDCScope "openid email profile"

<Location /protected>

AuthType openid-connect

Require valid-user

</Location>

OIDCOAuthSSLValidateServer On

OIDCOAuthRemoteUserClaim Username