In object mode, select the object you want to repair. In the python console, run:

import bpy

bpy.context.object.data.validate(verbose=True)

In object mode, select the object you want to repair. In the python console, run:

import bpy

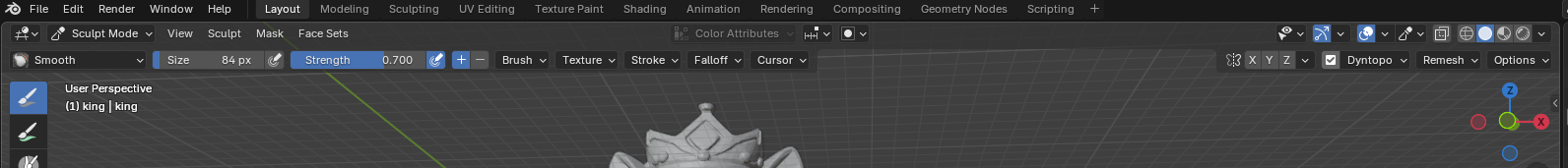

bpy.context.object.data.validate(verbose=True)I’ve got a king! I intended to start with the king, but then realized it made more sense to make the pawns — kittens — first and then increase the size and add decorations for the other pieces. Instead of first, the kitten king was the last one made. Blender has some cool “brushes” for creating folds and gathers in fabric.

I hand-sculpted bases for my chess set before realizing that I wanted them to be very identical. So I wanted to “cut” the hand-sculpted base off of the figure and replace it with a programmatic one. Except I kept getting this error using box trim — Not supported in dynamic topology mode.

Switch to a drawing brush and uncheck Dynamic Topology box

Then switch back to box trim and it actually trims

And then, of course, you need to remember to turn dynamic topography back on for the drawing tools to draw.

There is a one-line DOS command to get all files with a specific extension and change it to a different extension. In my case, I had a bunch of p12 files that I wanted to be pfx so they’d open magically without creating a new association.

for %f in (*.p12) do ren "%f" "%~nf.pfx"

I copied the pawn and grew her a little. Added a crown and necklace … voila, a kitten queen! I’ve been using the smooth brush a lot to even out the surface. I’m curious how these will 3d print because the model is made up of a lot of little triangles.

421,132 to be exact. As I’ve discovered, you can configure the viewport to add data in overlays. Statistics is a nice one!