A long time ago, before the company I work for paid for an external SSL certificate management platform that does nice things like clue you into the fact your cert is expiring tomorrow night, we had an outage due to an expired certificate. One. I put together a perl script that parsed output from the openssl client and proactively alerted us of any pending expiration events.

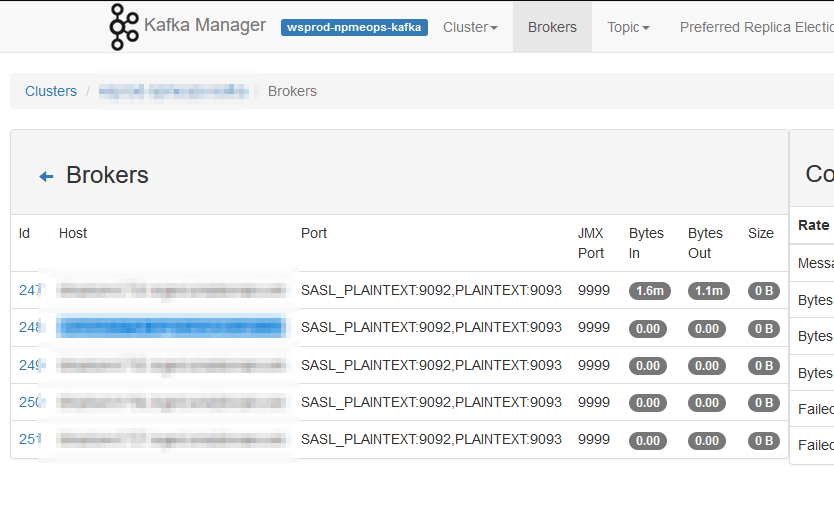

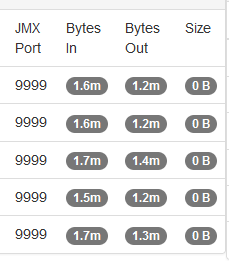

That script went away quite some time ago — although I still have a copy running at home that ensures our SMTP and web server certs are current. This morning, though, our K8s environment fell over due to expired certificates. Digging into it, the certs are in the management platform (my first guess was self-signed certificates that wouldn’t have been included in the pending expiry notices) but were not delivered to those of us who actually manage the servers. Luckily it was our dev k8s environment and we now know the prod one will be expiring in a week or so. But I figured it was a good impetus to resurrect the old script. Unfortunately, none of the modules I used for date calculation were installed on our script server. Seemed like a hint that I should rewrite the script in Python. So … here is a quick Python script that gets certificates from hosts and calculates how long until the certificate expires. Add on a “if” statement and a notification function, and we shouldn’t come in to failed environments needing certificate renewals.

from cryptography import x509

from cryptography.hazmat.backends import default_backend

import socket

import ssl

from datetime import datetime, timedelta

# Dictionary of hosts:port combinations to check for expiry

dictHostsToCheck = {

"tableau.example.com": 443 # Tableau

,"kibana.example.com": 5601 # ELK Kibana

,"elkmaster.example.com": 9200 # ELK Master

,"kafka.example.com": 9093 # Kafka server

}

for strHostName in dictHostsToCheck:

iPort = dictHostsToCheck[strHostName]

datetimeNow = datetime.utcnow()

# create default context

context = ssl.create_default_context()

# Do not verify cert chain or hostname so we ensure we always check the certificate

context.check_hostname = False

context.verify_mode = ssl.CERT_NONE

with socket.create_connection((strHostName, iPort)) as sock:

with context.wrap_socket(sock, server_hostname=strHostName) as ssock:

objDERCert = ssock.getpeercert(True)

objPEMCert = ssl.DER_cert_to_PEM_cert(objDERCert)

objCertificate = x509.load_pem_x509_certificate(str.encode(objPEMCert),backend=default_backend())

print(f"{strHostName}\t{iPort}\t{objCertificate.not_valid_after}\t{(objCertificate.not_valid_after - datetimeNow).days} days")