I made garlic kale pork burgers for dinner tonight — cut up pork loin & ground it with garlic scapes, salt, ground black pepper, and kale. Then cooked patties and topped with kale sauteed in butter with more pureed garlic scapes. Served on an everything bagel, and they were very good.

Category: Technology

Finding Active Directory Global Catalog Servers

… or domain controllers, or kerberos, or …

Back when I managed the Active Directory environment, I’d have developers ask where they should direct traffic. Now, since it was all LDAP, I did the right thing and got a load balanced virtual IP built out so they had “ad.example.com” on port 636 & all of my DCs had SAN’s on their SSL certs so ad.example.com was valid. But! It’s not always that easy — especially if you are looking for something like the global catalog servers. Or no such VIP exists. Luckily, the fact people are logging into the domain tells you that you can ask the internal DNS servers to give you all of this good info. Because domain controllers register service records in DNS.

These are the registrations for all servers regardless of associated site.

_ldap: The LDAP service record is used for locating domain controllers that provide directory services over LDAP.

_ldap._tcp.example.com

_kerberos: This record is used for locating domain controllers that provide Kerberos authentication services.

_kerberos._tcp.example.com

_kpasswd: The kpasswd service record is used for finding domain controllers that can handle Kerberos principal password changes.

_kpasswd._tcp.example.com

_gc: The Global Catalog service record is used for locating domain controllers that have the Global Catalog role.

_gc._tcp._tcp.example.com

_ldap._tcp.pdc: If you need to identify which domain controller holds the PDC emulator operations master role.

_ldap._tcp.pdc._msdcs.example.com

You can also find global catalog, Kerberos, and LDAP service records registered in individual sites. For all servers specific to a site, you need to use _tcp.SiteName._sites.example.com instead.

Example — return all GC’s specific to the site named “SiteXYZ”:

dig _gc._tcp.SiteXYZ._sites.example.com SRV

If you are using Windows, first enter “set type=SRV” to return service records and then query for the specific service record you want to view:

C:\Users\lisa>nslookup

Default Server: dns123.example.com

Address: 10.237.123.123

> set type=SRV

> _gc._tcp.SiteXYZ._sites.example.com

Server: dns123.example.com

Address: 10.23.123.123

_gc._tcp.SiteXYZ._sites.example.com SRV service location:

priority = 0

weight = 100

port = 3268

svr hostname = addc032.example.com

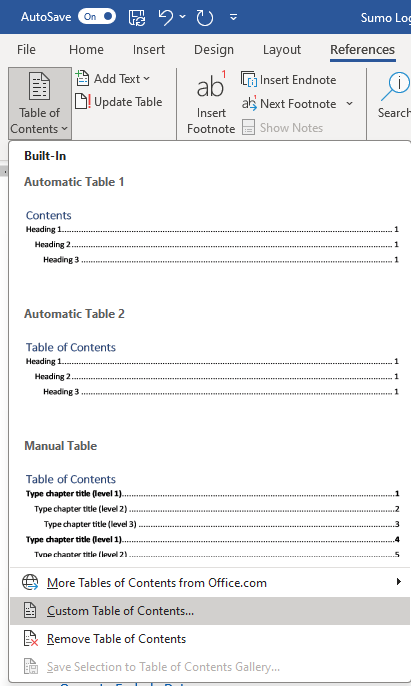

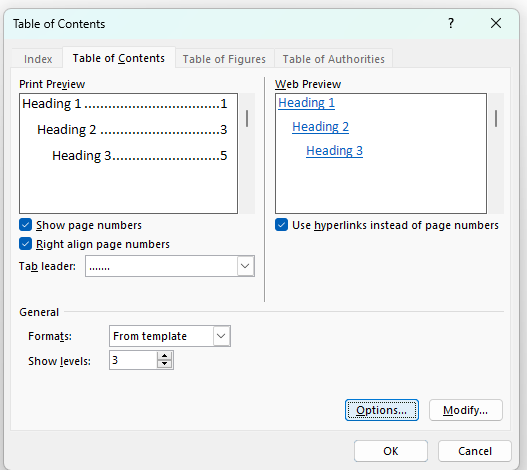

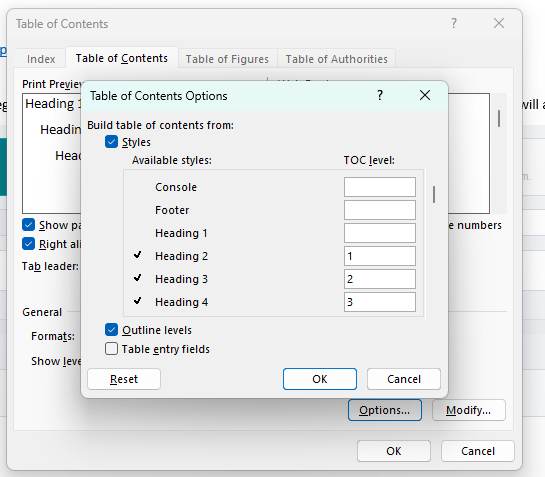

Microsoft Word – Custom Table of Contents to Omit Heading 1

I know I could sort this by adjusting which “style” I use when I write documents … but I like Heading 1 as the title, Heading 2 as a paragraph title, etc. To create a table of contents below the title line that doesn’t start with the title line and then have everything subordinate to that top item, create a custom table of contents:

Select “Options”

And simply re-map the styles to TOC levels – Heading 1 doesn’t get a level. Heading 2 becomes 1, Heading 3 becomes 2, and Heading 4 becomes 3.

Voila – now I’ve got a bunch of “top level” items from Heading 2

Sumo Logic: Creating Roles via API

This script creates very basic roles with no extra capabilities and restricts the role to viewing only the indicated source category’s data.

################################################################################

# This script reads an Excel file containing role data, then uses the Sumo Logic

# API to create roles based on the data. It checks each row for a role name and

# uses the source category to set data filters. The script requires a config.py

# file with access credentials.

################################################################################

import pandas as pd

import requests

import json

from config import access_id, access_key # Import credentials from config.py

# Path to Excel file

excel_file_path = 'NewRoles.xlsx'

# Base URL for Sumo Logic API

base_url = 'https://api.sumologic.com/api/v1'

################################################################################

# Function to create a new role using the Sumo Logic API.

#

# Args:

# role_name (str): The name of the role to create.

# role_description (str): The description of the role.

# source_category (str): The source category to restrict the role to.

#

# Returns:

# None. Prints the status of the API call.

################################################################################

def create_role(role_name, role_description, source_category):

url = f'{base_url}/roles'

# Role payload

data_filter = f'_sourceCategory={source_category}'

payload = {

'name': role_name,

'description': role_description,

'logAnalyticsDataFilter': data_filter,

'auditDataFilter': data_filter,

'securityDataFilter': data_filter

}

# Headers for the request

headers = {

'Content-Type': 'application/json',

'Accept': 'application/json'

}

# Debugging line

print(f"Attempting to create role: '{role_name}' with description: '{role_description}' and filter: '{data_filter}'")

# Make the POST request to create a new role

response = requests.post(url, auth=(access_id, access_key), headers=headers, data=json.dumps(payload))

# Check the response

if response.status_code == 201:

print(f'Role {role_name} created successfully.')

else:

print(f'Failed to create role {role_name}. Status Code: {response.status_code}')

print('Response:', response.json())

################################################################################

# Reads an Excel file and processes each row to extract role information and

# create roles using the Sumo Logic API.

#

# Args:

# file_path (str): The path to the Excel file containing role data.

#

# Returns:

# None. Processes the file and attempts to create roles based on the data.

################################################################################

def process_excel(file_path):

# Load the spreadsheet

df = pd.read_excel(file_path, engine='openpyxl')

# Print column names to help debug and find correct ones

print("Columns found in Excel:", df.columns)

# Iterate over each row in the DataFrame

for index, row in df.iterrows():

role_name = row['Role Name'] # Correct column name for role name

source_category = row['Source Category'] # Correct column name for source category to which role is restricted

# Only create a role if the role name is not null

if pd.notnull(role_name):

role_description = f'Provides access to source category {source_category}'

create_role(role_name, role_description, source_category)

# Process the Excel file

process_excel(excel_file_path)

Excel Formula – Text Before Character

The following formula prints just the substring found before the first dash from the data in cell A2:

=LEFT(A2, FIND("-", A2) - 1)

Parsing HAR File

I am working with a new application that doesn’t seem to like when a person has multiple roles assigned to them … however, I first need to prove that is the problem. Luckily, your browser gets the SAML response and you can actually see the Role entitlements that are being sent. Just need to parse them out of the big 80 meg file that a simple “go here and log on” generates!

To gather data to be parsed, open the Dev Tools for the browser tab. Click the settings gear icon and select “Persist Logs”. Reproduce the scenario – navigate to the site, log in. Then save the dev tools session as a HAR file. The following Python script will analyze the file, extract any SAML response tokens, and print them in a human-readable format.

################################################################################

# This script reads a HAR file, identifies HTTP requests and responses containing

# SAML tokens, and decodes "SAMLResponse" values.

#

# The decoded SAML assertions are printed out for inspection in a readable format.

#

# Usage:

# - Update the str_har_file_path with your HAR file

################################################################################

# Editable Variables

str_har_file_path = 'SumoLogin.har'

# Imports

import json

import base64

import urllib.parse

from xml.dom.minidom import parseString

################################################################################

# This function decodes SAML responses found within the HAR capture

# Args:

# saml_response_encoded(str): URL encoded, base-64 encoded SAML response

# Returns:

# string: decoded string

################################################################################

def decode_saml_response(saml_response_encoded):

url_decoded = urllib.parse.unquote(saml_response_encoded)

base64_decoded = base64.b64decode(url_decoded).decode('utf-8')

return base64_decoded

################################################################################

# This function finds and decodes SAML tokens from HAR entries.

#

# Args:

# entries(list): A list of HTTP request and response entries from a HAR file.

#

# Returns:

# list: List of decoded SAML assertion response strings.

################################################################################

def find_saml_tokens(entries):

saml_tokens = []

for entry in entries:

request = entry['request']

response = entry['response']

if request['method'] == 'POST':

request_body = request.get('postData', {}).get('text', '')

if 'SAMLResponse=' in request_body:

saml_response_encoded = request_body.split('SAMLResponse=')[1].split('&')[0]

saml_tokens.append(decode_saml_response(saml_response_encoded))

response_body = response.get('content', {}).get('text', '')

if response.get('content', {}).get('encoding') == 'base64':

response_body = base64.b64decode(response_body).decode('utf-8', errors='ignore')

if 'SAMLResponse=' in response_body:

saml_response_encoded = response_body.split('SAMLResponse=')[1].split('&')[0]

saml_tokens.append(decode_saml_response(saml_response_encoded))

return saml_tokens

################################################################################

# This function converts XML string to an XML dom object formatted with

# multiple lines with heirarchital indentations

#

# Args:

# xml_string (str): The XML string to be pretty-printed.

#

# Returns:

# dom: A pretty-printed version of the XML string.

################################################################################

def pretty_print_xml(xml_string):

dom = parseString(xml_string)

return dom.toprettyxml(indent=" ")

# Load HAR file with UTF-8 encoding

with open(str_har_file_path, 'r', encoding='utf-8') as file:

har_data = json.load(file)

entries = har_data['log']['entries']

saml_tokens = find_saml_tokens(entries)

for token in saml_tokens:

print("Decoded SAML Token:")

print(pretty_print_xml(token))

print('-' * 80)

Exchange 2013 DNS Oddity

Not that anyone hosts their own Exchange server anymore … but we had a pretty strange issue pop up. Exchange has been, for a dozen years, configured to use the system DNS servers. The system can still use DNS just fine … but the Exchange transport failed to query DNS and just queued messages.

PS C:\scripts> Get-Queue -Identity "EXCHANGE01\3" | Format-List *

DeliveryType : SmtpDeliveryToMailbox

NextHopDomain : mailbox database 1440585757

TlsDomain :

NextHopConnector : 1cdb1e55-a129-46bc-84ef-2ddae27b808c

Status : Retry

MessageCount : 7

LastError : 451 4.4.0 DNS query failed. The error was: DNS query failed with error ErrorRetry

RetryCount : 2

LastRetryTime : 1/4/2025 12:20:04 AM

NextRetryTime : 1/4/2025 12:25:04 AM

DeferredMessageCount : 0

LockedMessageCount : 0

MessageCountsPerPriority : {0, 0, 0, 0}

DeferredMessageCountsPerPriority : {0, 7, 0, 0}

RiskLevel : Normal

OutboundIPPool : 0

NextHopCategory : Internal

IncomingRate : 0

OutgoingRate : 0

Velocity : 0

QueueIdentity : EXCHANGE01\3

PriorityDescriptions : {High, Normal, Low, None}

Identity : EXCHANGE01\3

IsValid : True

ObjectState : New

Yup, still configured to use the SYSTEM’s DNS:

PS C:\scripts> Get-TransportService | Select-Object Name, *DNS*

Name : EXCHANGE01

ExternalDNSAdapterEnabled : True

ExternalDNSAdapterGuid : 2fdebb30-c710-49c9-89fb-61455aa09f62

ExternalDNSProtocolOption : Any

ExternalDNSServers : {}

InternalDNSAdapterEnabled : True

InternalDNSAdapterGuid : 2fdebb30-c710-49c9-89fb-61455aa09f62

InternalDNSProtocolOption : Any

InternalDNSServers : {}

DnsLogMaxAge : 7.00:00:00

DnsLogMaxDirectorySize : 200 MB (209,715,200 bytes)

DnsLogMaxFileSize : 10 MB (10,485,760 bytes)

DnsLogPath :

DnsLogEnabled : True

I had to hard-code the DNS servers to the transport and restart the service:

PS C:\scripts> Set-TransportService EXCHANGE01 -InternalDNSServers 10.5.5.85,10.5.5.55,10.5.5.1

PS C:\scripts> Set-TransportService EXCHANGE01 -ExternalDNSServers 10.5.5.85,10.5.5.55,10.5.5.1

PS C:\scripts> Restart-Service MSExchangeTransport

WARNING: Waiting for service 'Microsoft Exchange Transport (MSExchangeTransport)' to stop...

WARNING: Waiting for service 'Microsoft Exchange Transport (MSExchangeTransport)' to start...

PS C:\scripts> Get-TransportService | Select-Object Name, InternalDNSServers, ExternalDNSServers

Name InternalDNSServers ExternalDNSServers

---- ------------------ ------------------

EXCHANGE01 {10.5.5.1, 10.5.5.55, 10.5.5.85} {10.5.5.85, 10.5.5.55, 10.5.5.1}

Viola, messages started popping into my mailbox.

Fedora 41, KVM, QEMU, and the Really (REALLY!) Bad Performance

Ever since we upgraded to Fedora 41, we have been having horrible problems with our Exchange server. It will drop off the network for half an hour at a time. I cannot even ping the VM from the physical server. Some network captures show there’s no response to the ARP request.

Evidently, the VM configuration contains a machine type that doesn’t automatically update. We are using PC-Q35 as the chipset … and 4.1 was the version when we built our VMs. This version, however has been deprecated. Which you can see by asking virsh what capabilities it has:

2025-01-02 23:17:26 [lisa@linux01 /var/log/libvirt/qemu/]# virsh capabilities | grep pc-q35

<machine maxCpus='288' deprecated='yes'>pc-q35-5.2</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-4.2</machine>

<machine maxCpus='255' deprecated='yes'>pc-q35-2.7</machine>

<machine maxCpus='4096'>pc-q35-9.1</machine>

<machine canonical='pc-q35-9.1' maxCpus='4096'>q35</machine>

<machine maxCpus='288'>pc-q35-7.1</machine>

<machine maxCpus='1024'>pc-q35-8.1</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-6.1</machine>

<machine maxCpus='255' deprecated='yes'>pc-q35-2.4</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-2.10</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-5.1</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-2.9</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-3.1</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-4.1</machine>

<machine maxCpus='255' deprecated='yes'>pc-q35-2.6</machine>

<machine maxCpus='4096'>pc-q35-9.0</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-2.12</machine>

<machine maxCpus='288'>pc-q35-7.0</machine>

<machine maxCpus='288'>pc-q35-8.0</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-6.0</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-4.0.1</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-5.0</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-2.8</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-3.0</machine>

<machine maxCpus='288'>pc-q35-7.2</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-4.0</machine>

<machine maxCpus='1024'>pc-q35-8.2</machine>

<machine maxCpus='288'>pc-q35-6.2</machine>

<machine maxCpus='255' deprecated='yes'>pc-q35-2.5</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-2.11</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-5.2</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-4.2</machine>

<machine maxCpus='255' deprecated='yes'>pc-q35-2.7</machine>

<machine maxCpus='4096'>pc-q35-9.1</machine>

<machine canonical='pc-q35-9.1' maxCpus='4096'>q35</machine>

<machine maxCpus='288'>pc-q35-7.1</machine>

<machine maxCpus='1024'>pc-q35-8.1</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-6.1</machine>

<machine maxCpus='255' deprecated='yes'>pc-q35-2.4</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-2.10</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-5.1</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-2.9</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-3.1</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-4.1</machine>

<machine maxCpus='255' deprecated='yes'>pc-q35-2.6</machine>

<machine maxCpus='4096'>pc-q35-9.0</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-2.12</machine>

<machine maxCpus='288'>pc-q35-7.0</machine>

<machine maxCpus='288'>pc-q35-8.0</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-6.0</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-4.0.1</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-5.0</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-2.8</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-3.0</machine>

<machine maxCpus='288'>pc-q35-7.2</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-4.0</machine>

<machine maxCpus='1024'>pc-q35-8.2</machine>

<machine maxCpus='288'>pc-q35-6.2</machine>

<machine maxCpus='255' deprecated='yes'>pc-q35-2.5</machine>

<machine maxCpus='288' deprecated='yes'>pc-q35-2.11</machine>

Or filtering out the deprecated ones …

2025-01-02 23:16:50 [lisa@linux01 /var/log/libvirt/qemu/]# virsh capabilities | grep pc-q35 | grep -v "deprecated='yes'"

<machine maxCpus='4096'>pc-q35-9.1</machine>

<machine canonical='pc-q35-9.1' maxCpus='4096'>q35</machine>

<machine maxCpus='288'>pc-q35-7.1</machine>

<machine maxCpus='1024'>pc-q35-8.1</machine>

<machine maxCpus='4096'>pc-q35-9.0</machine>

<machine maxCpus='288'>pc-q35-7.0</machine>

<machine maxCpus='288'>pc-q35-8.0</machine>

<machine maxCpus='288'>pc-q35-7.2</machine>

<machine maxCpus='1024'>pc-q35-8.2</machine>

<machine maxCpus='288'>pc-q35-6.2</machine>

<machine maxCpus='4096'>pc-q35-9.1</machine>

<machine canonical='pc-q35-9.1' maxCpus='4096'>q35</machine>

<machine maxCpus='288'>pc-q35-7.1</machine>

<machine maxCpus='1024'>pc-q35-8.1</machine>

<machine maxCpus='4096'>pc-q35-9.0</machine>

<machine maxCpus='288'>pc-q35-7.0</machine>

<machine maxCpus='288'>pc-q35-8.0</machine>

<machine maxCpus='288'>pc-q35-7.2</machine>

<machine maxCpus='1024'>pc-q35-8.2</machine>

<machine maxCpus='288'>pc-q35-6.2</machine>

So I shut down my Exchange server again (again, again), used “virsh edit “exchange01”, changed

<os>

<type arch='x86_64' machine='pc-q35-4.1'>hvm</type>

<boot dev='hd'/>

</os>

to

<os>

<type arch='x86_64' machine='pc-q35-7.1'>hvm</type>

</os>

And started my VM. It took about an hour to boot. It absolutely hogged the disk physical server’s resources. It was the top listing in iotop -o

But then … all of the VMs dropped off of iotop. My attempt to log into the server via the console was logged in and waiting for me. My web mail, which had failed to load all day, was in my e-mail. And messages that had been queued for delivery had all come through.

The load on our physical server dropped from 30 to 1. Everything became responsive. And Exchange has been online for a good thirty minutes now.

Sumo Logic: Validating Collector Data Sources via API

This script is an example of using the Sumo Logic API to retrieve collector details. This particular script looks for Linux servers and validates that each collector has the desired log sources defined. Those that do not contain all desired sources are denoted for farther investigation.

import requests

from requests.auth import HTTPBasicAuth

import pandas as pd

from config import access_id, access_key # Import your credentials from config.py

# Base URL for Sumo Logic API

base_url = 'https://api.sumologic.com/api/v1'

def get_all_collectors():

"""Retrieve all collectors with pagination support."""

collectors = []

limit = 1000 # Adjust as needed; check API docs for max limit

offset = 0

while True:

url = f'{base_url}/collectors?limit={limit}&offset={offset}'

response = requests.get(url, auth=HTTPBasicAuth(access_id, access_key))

if response.status_code == 200:

result = response.json()

collectors.extend(result.get('collectors', []))

if len(result.get('collectors', [])) < limit:

break # Exit the loop if we received fewer than the limit, meaning it's the last page

offset += limit

else:

print('Error fetching collectors:', response.status_code, response.text)

break

return collectors

def get_sources(collector_id):

"""Retrieve sources for a specific collector."""

url = f'{base_url}/collectors/{collector_id}/sources'

response = requests.get(url, auth=HTTPBasicAuth(access_id, access_key))

if response.status_code == 200:

sources = response.json().get('sources', [])

# print(f"Log Sources for collector {collector_id}: {sources}")

return sources

else:

print(f'Error fetching sources for collector {collector_id}:', response.status_code, response.text)

return []

def check_required_logs(sources):

"""Check if the required logs are present in the sources."""

required_logs = {

'_security_events': False,

'_linux_system_events': False,

'cron_logs': False,

'dnf_rpm_logs': False

}

for source in sources:

if source['sourceType'] == 'LocalFile':

name = source.get('name', '')

for key in required_logs.keys():

if name.endswith(key):

required_logs[key] = True

# Determine missing logs

missing_logs = {log: "MISSING" if not present else "" for log, present in required_logs.items()}

return missing_logs

# Main execution

if __name__ == "__main__":

collectors = get_all_collectors()

report_data = []

for collector in collectors:

# Check if the collector's osName is 'Linux'

if collector.get('osName') == 'Linux':

collector_id = collector['id']

collector_name = collector['name']

print(f"Checking Linux Collector: ID: {collector_id}, Name: {collector_name}")

sources = get_sources(collector_id)

missing_logs = check_required_logs(sources)

if any(missing_logs.values()):

report_entry = {

"Collector Name": collector_name,

"_security_events": missing_logs['_security_events'],

"_linux_system_events": missing_logs['_linux_system_events'],

"cron_logs": missing_logs['cron_logs'],

"dnf_rpm_logs": missing_logs['dnf_rpm_logs']

}

# print(f"Missing logs for collector {collector_name}: {report_entry}")

report_data.append(report_entry)

# Create a DataFrame and write to Excel

df = pd.DataFrame(report_data, columns=[

"Collector Name", "_security_events", "_linux_system_events", "cron_logs", "dnf_rpm_logs"

])

# Generate the filename with current date and time

if not df.empty:

timestamp = pd.Timestamp.now().strftime("%Y%m%d-%H%M")

output_file = f"{timestamp}-missing_logs_report.xlsx"

df.to_excel(output_file, index=False)

print(f"\nData written to {output_file}")

else:

print("\nAll collectors have the required logs.")

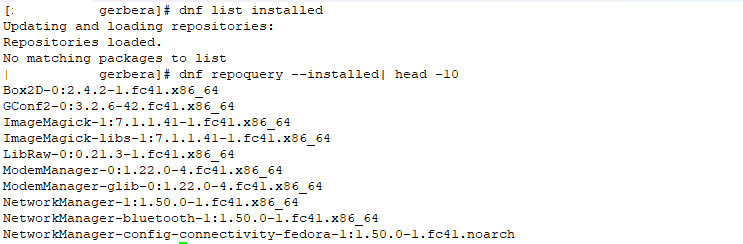

Fedora 41 – Using DNF to List Installed Packages

We upgraded all of our internal servers to Fedora 41 after a power outage yesterday — had a number of issues to resolve (the liblockdev legacy config reverted so OpenHAB no longer could use USB serial devices, the physical server was swapping 11GB of data even though it had 81GB of memory free, and our Gerbera installation requires some libspdlog.so.1.12 which was updated to version 1.14 with the Fedora upgrade.

The last issue was more challenging to figure out because evidently DNF is now DNF5 and instead of throwing an error like “hey, new version dude! Use the new syntax” when you use an old command to list what is installed … it just says “No matching packages to list”. Like there are no packages installed? Since I’m using bash, openssh, etc … that’s not true.

Luckily, the new syntax works just fine. dnf repoquery –installed

Also:

dnf5 repoquery –available

dnf5 repoquery –userinstalled