# Run filebeat from the command line and add debugging flags to increase verbosity of output

# -e directs output to STDERR instead of syslog

# -c indicates the config file to use

# -d indicates which debugging items you want -- * for all

/opt/filebeat/filebeat -e -c /opt/filebeat/filebeat.yml -d "*"

Category: Technology

Python Logging to Logstash Server

Since we are having a problem with some of our filebeat servers actually delivering data over to logstash, I put together a really quick python script that connects to the logstash server and sends a log record. I can then run tcpdump on the logstash server and hopefully see what is going wrong.

import logging

import logstash

import sys

strHost = 'logstash.example.com'

iPort = 5048

test_logger = logging.getLogger('python-logstash-logger')

test_logger.setLevel(logging.INFO)

test_logger.addHandler(logstash.TCPLogstashHandler(host=strHost,port=iPort))

test_logger.info('May 22 23:34:13 ABCDOHEFG66SC03 sipd[3863cc60] CRITICAL One or more Dns Servers are currently unreachable!')

test_logger.warning('May 22 23:34:13 ABCDOHEFG66SC03 sipd[3863cc60] CRITICAL One or more Dns Servers are currently unreachable!')

test_logger.error('May 22 23:34:13 ABCDOHEFG66SC03 sipd[3863cc60] CRITICAL One or more Dns Servers are currently unreachable!')

Using tcpdump to capture traffic

I like tshark (command line wireshark), but some of our servers don’t have it installed and won’t have it installed. So I’m re-learning tcpdump!

List data from a specific source IP

tcpdump src 10.1.2.3

List data sent to a specific port

tcpdump dst port 5048

List data sent from an entire subnet

tcpdump net 10.1.2.0/26

And add -X or -A to see the whole packet.

PostgreSQL Logical Replication – Row Filter

Researching something else about logical replication, I came across a commit message about row filtering on logical replication. From the date of the commit, I expect this will be included in PostgreSQL 15.

Adding a WHERE clause after the table name limits the rows that are included in the publication — you could publish employees in Vermont or only completed transactions.

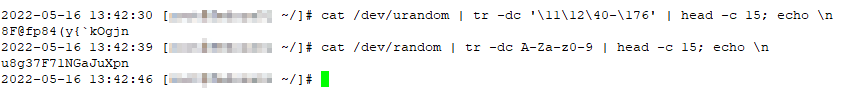

Using urandom to Generate Password

Frequently, I’ll use password generator websites to create some pseudo-random string of characters for system accounts, database replication,etc. But sometimes the Internet isn’t readily available … and you can create a decent password right from the Linux command line using urandom.

If you want pretty much any “normal” character, use tr to pull out all of the other characters:

'\11\12\40-\176'

Or remove anything outside of upper case, lower case, and number characters using

a-zA-Z0-9

Pass the output to head to grab however many characters you actually want. Voila — a quick password.

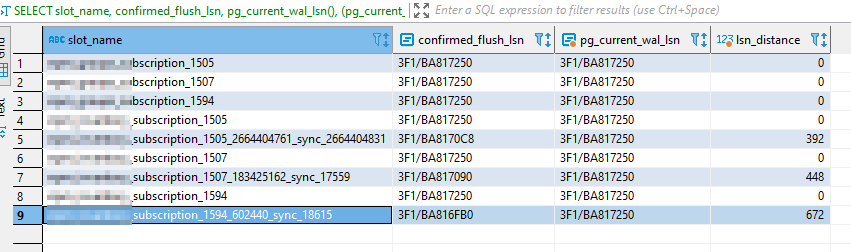

PostgreSQL Replication Lag — Distance between current and last confirmed flushed

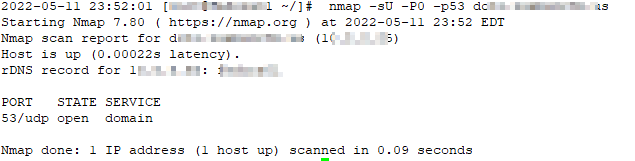

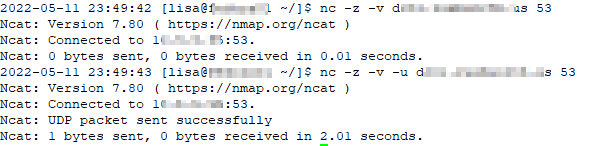

UDP Port Check

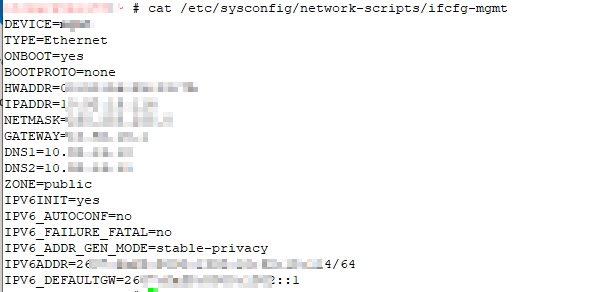

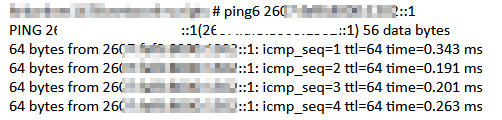

IPv6

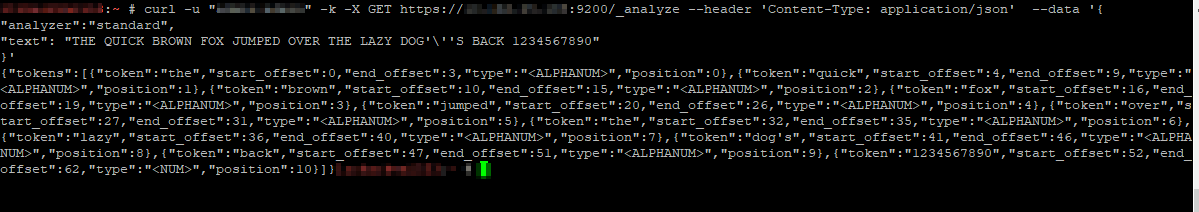

ElasticSearch Analyzer

Analyzer Components

Character filters are the first component of an analyzer. They can remove unwanted characters – this could be html tags (“char_filter”: [“html_strip”]) or some custom replacement – or change character(s) into other character(s). Output from the character filter is passed to the tokenizer.

The tokenizer breaks the string out into individual components (tokens). A commonly used tokenizer is the whitespace tokenizer which uses whitespace characters as the token delimiter. For CSV data, you could build a custom pattern tokenizer with “,” as the delimiter.

Then token filters removes anything deemed unnecessary. The standard token filter applies a lower-case function too – so NOW, Now, and now all produce the same token.

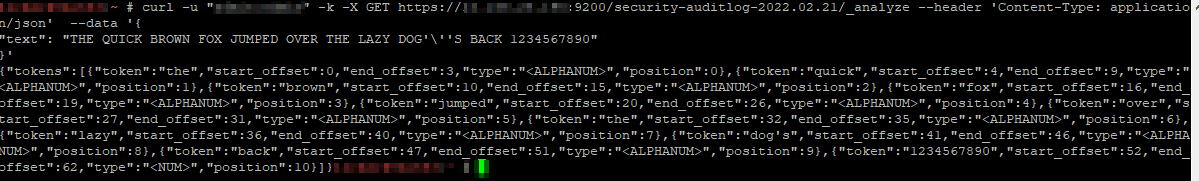

Testing an analyzer

You can one-off analyze a string using any of the

curl -u “admin:admin” -k -X GET https://localhost:9200/_analyze –header ‘Content-Type: application/json’ –data ‘

“analyzer”:”standard”,

“text”: “THE QUICK BROWN FOX JUMPED OVER THE LAZY DOG’\”S BACK 1234567890″

}’

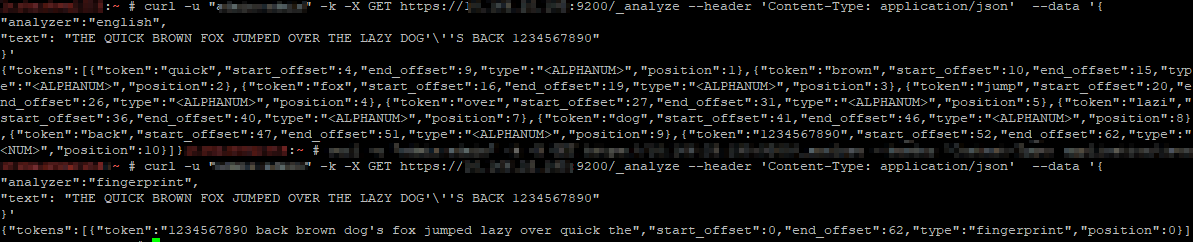

Specifying different analyzers produces different tokens

It’s even possible to define a custom analyzer in an index – you’ll see this in the index configuration. Adding character mappings to a custom filter – the example used in Elastic’s documentation maps Arabic numbers to their European counterparts – might be a useful tool in our implementation. One of the examples is turning ASCII emoticons into emotional descriptors (_happy_, _sad_, _crying_, _raspberry_, etc) that would be useful in analyzing customer communications. In log processing, we might want to map phrases into commonly used abbreviations (not a real-world example, but if programmatic input spelled out “self-contained breathing apparatus”, I expect most people would still search for SCBA if they wanted to see how frequently SCBA tanks were used for call-outs). It will be interesting to see how frequently programmatic input doesn’t line up with user expectations to see if character mappings will be beneficial.

In addition to testing individual analyzers, you can test the analyzer associated to an index – instead of using the /_analyze endpoint, use the /indexname/_analyze endpoint.

Resetting Lost/Forgotten ElasticSearch Admin Passwords

There are a few ways to reset the password on an individual account … but they require you to have a known password. But what about when you don’t have any good passwords? (You might be able to read your kibana.yml and get a known good password, so that would be a good place to check). Provided you have OS access, just create another superuser account using the elasticsearch-users binary:

/usr/share/elasticsearch/bin/elasticsearch-users useradd ljradmin -p S0m3pA5sw0Rd -r superuser

You can then use curl to the ElasticSearch API to reset the elastic account password

curl -s --user ljradmin:S0m3pA5sw0Rd -XPUT "http://127.0.0.1:9200/_xpack/security/user/elastic/_password" -H 'Content-Type: application/json' -d'

{

"password" : "N3wPa5sw0Rd4ElasticU53r"

}

'