Crocus flowers are starting to sprout up around the yard — years ago, Anya and I planted crocus bulbs randomly throughout the front yard. Some have split into three or four plants already, and some are still single little flowers. We’ve even got some sprouting up elsewhere — maybe they’re spreading seeds too?

Author: Lisa

Reverse Osmosis Maple Sap Stats

We collected nine gallons of sap with SG of 1.009 = 2.3 Brix

We ran all of the sap through the reverse osmosis system at 60psi and had sap with SG 1.011 = 2.8 Brix

We ran the concentrated sap through the reverse osmosis system a second time, this time at 80psi and had sap with SG 1.022 = 5.6 Brix.

The “pure water” output SG was about 1.003 — we re-ran this through the RO as well.

At the end of the day, we have about 4 gallons of sap at 5.6% sugar, another gallon from the “pure water” run that’s a lower SG, and four gallons of water that’s removed.

Notes for the future:

- We want to see what a single pass at higher pressure does — is it multiple passes that farther concentrated the sap or the higher pressure?

- We took SG readings and converted to brix using an online converter. Next time, we should just take the readings in Brix 🙂

- We might need a different refractometer to get accurate readings near 1 … not sure how accurate our tool is at the low end of the range.

Maple Syrup Reverse Osmosis Build

Apache HTTPD Log File Analysis — Hits by IP Address

When we are decommissioning a website (or web server), I always watch the log files to ensure there aren’t a lot of people still accessing it. Sometimes there are and it’s worth tracking them down individually to clue them into the site’s eminent demise. Usually there aren’t, and it’s just a confirmation that our decommissioning efforts won’t be impactful.

This python script looks for IP addresses in the log files and outputs each IP & it’s access count per log file. Not great if you’ll see a bunch of IP addresses in the recorded URI string, but it’s good enough for 99% of our log data.

import os

import re

from collections import Counter

def parseApacheHTTPDLog(strLogFile):

regexIPAddress = r'\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}'

with open(strLogFile) as f:

objLog = f.read()

listIPAddresses = re.findall(regexIPAddress,objLog)

counterAccessByIP = Counter(listIPAddresses)

for strIP, iAccessCount in counterAccessByIP.items():

print(f"{strLogFile}\t{str(strIP)}\t{str(iAccessCount)}")

if __name__ == '__main__':

strLogDirectory = '/var/log/httpd/'

for strFileName in os.listdir(strLogDirectory):

if strFileName.__contains__("access_log"):

#if strFileName.__contains__("hostname.example.com") and strFileName.__contains__("access_log"):

parseApacheHTTPDLog(f"{strLogDirectory}{strFileName}")

Gerbera – Searching for Playlists

Proto-Ducks

Incubating Eggs

We’re about to start incubating eight duck eggs, so I wanted to record the temperature and humidity settings that I’ve found for the chicken, duck, and turkey eggs (well, future turkey eggs! We managed to get five male turkeys last year)

| DUCKS | |||

| Start | End | Temp | Humidity |

| 1 | 25 | 99.5 | 55-58% |

| 26 | 28 | 98.5 | 65% |

| 28 | hatching | 97 | 70-80% |

| CHICKENS | |||

| Start | End | Temp | Humidity |

| 1 | 18 | 99.5-100.5 | 45-55% |

| 19 | Hatching | 99.5 | 65-70% |

| TURKEYS | |||

| Start | End | Temp | Humidity |

| 1 | 24 | 99-100 | 50-60% |

| 25 | Hatching | 99 | 65-70% |

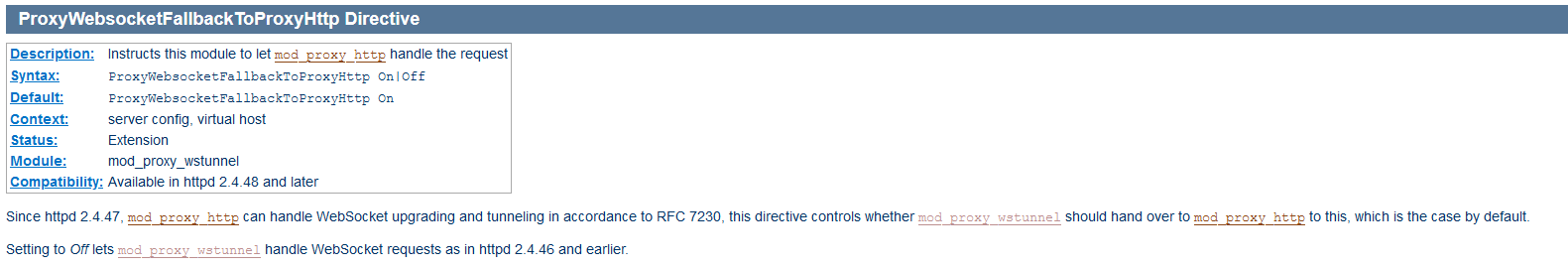

Reverse Proxying WebSockets through mod_proxy — HTTP Failback

I’ve been successfully reverse proxying MQTT over WebSockets via Apache HTTPD for quite some time now. The last few weeks, my phone isn’t able to connect. There’s no good rational presented (and manually clicking the “send data” button on my client successfully connects). It was time to upgrade the server anyway. Once I got the latest Linux distro on the server, I couldn’t connect at all to my MQTT server. The error log showed AH00898: Error reading from remote server returned by /mqtt

Evidently, httpd 2.4.47 added functionality to upgrade and tunnel in accordance with RFC 7230. And that doesn’t work at all in my scenario. Haven’t dug in to the why of it yet, but adding ProxyWebsocketFallbackToProxyHttp Off to the reverse proxy config allowed me to successfully communicate with the MQTT server through the reverse proxy.

(Not) Finding the Rygel Log File

We’ve spent a lot of time trying to get a log file from the rygel server … setting the log level in the config file didn’t seem to do anything useful. And I cannot even find a log file. The only output we’re able to find is formed by running the binary from the command line. Where is that log file?!? Hey — there isn’t one. All of this log level setting has to do with what’s written to STDOUT and STDERR. You can either modify the unit file to tee the output off to a file or run it from the command line

To get debugging output, use

G_MESSAGES_DEBUG=all rygel -g 5

To tee the output off to a file,

rygel -g *:5 2>&1 | tee -a /path/to/rygel.log

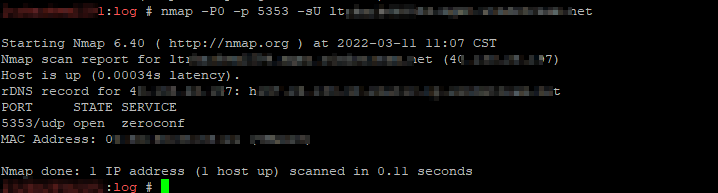

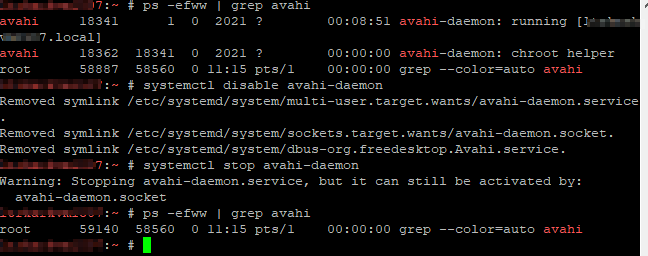

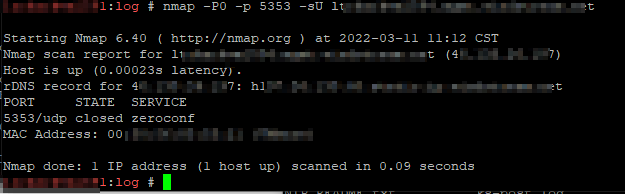

Brinqa Remediation – mDNS

Some systems were found to be responding to mDNS requests (5353/udp). Linux hosts were running the avahi-daemon which provides this service. As the auto-discovery service is not used for service identification, the avahi-daemon was disabled.

Confirm response is seen on 5353/udp prior to change:

nmap -P0 -p 5353 -sU hostname.example.net

SSH to host identified as responding to mDNS requests. Disable the avahi-daemon then stop the avahi-daemon:

systemctl disable avahi-daemon systemctl stop avahi-daemon

Verify that 5353/udp is no longer open by repeating the nmap command.

Fin.

Summary: Playlist items are not returned from searches initiated on my uPNP client. The playlist is visible when browsing the Gerbera web UI under Playlists->All Playlists->Playlist Name and Playlists->Directories->Playlists->Playlist Name

Action: In a uPNP client, search using the criteria

upnp:class = "object.container.playlistContainer" and dc:title = "Playlist Name",Expected Results: Playlist matching search criteria is returned

Actual Results: No results are returned

Investigation:

From the Gerbera debug log, the search being executed is:

SELECT DISTINCT "c"."id", "c"."ref_id","c"."parent_id", "c"."object_type", "c"."upnp_class", "c"."dc_title",

"c"."mime_type" , "c"."flags", "c"."part_number", "c"."track_number",

"c"."location", "c"."last_modified", "c"."last_updated"

FROM "mt_cds_object" "c"

INNER JOIN "mt_metadata" "m" ON "c"."id" = "m"."item_id"

INNER JOIN "grb_cds_resource" "re" ON "c"."id" = "re"."item_id"

WHERE (LOWER("c"."upnp_class")=LOWER('object.container.playlistContainer'))

AND (LOWER("c"."dc_title")=LOWER('Playlist Name'))

ORDER BY "c"."dc_title" ASC;

The playlists do not have a row in the grb_cds_resource table, so the “INNER JOIN” means the query returns no records.

I am able to work around this issue by manually inserting playlist items into the grb_cds_resource table

INSERT INTO grb_cds_resource (item_id, res_id, handlerType) VALUES (1235555,0,0);If I have some time, I want to test changing join2 to be a left outer join and see if that breaks anything.