As we have increased in staff, we’ve gained a few new programmers. While it was easy enough for us to avoid stepping on each other’s toes, we have experienced several production problems that could be addressed by rethinking our repository configuration.

Current state: We have a monolithic repository for different batch servers. Each server has a clone of the repository, and the development equivalent has a clone of the same repository. The repository has top-level folders for each independent script. There is a SharedTools top-level folder for reusable functions.

Changes are made on forks located both on the development server and individuals’ computers, tested on the development server, then pushed to the repo. Under a CRQ, a pull is performed from the production server to elevate the new code. Glomming dozens of scripts into a single repository was simple and quick; but, with new people involved with development efforts, we have experienced challenges with changes being lost, unintentional elevation of code, and having UAT run against under-development code.

Pitfalls: Four people working on four different scripts are working in the same repository. We have had individuals developing on their laptop overwrite changes (force push is dangerous, even if force-with-lease is used), we have had individuals developing on the dev server commit other people’s edits (git add * isn’t a good idea in a shared environment – specifically add changed files to your commit), and we’ve had duplication of effort (which is certainly a problem outside of development efforts, and one that can be addressed outside of git).

We could address the issues we’ve seen through training and communication – ensure anyone contributing code to the repository adequately understands what force push means, appreciates what wildcards include, and generally have a more nuanced understanding of git than the one-hour training I provided last year. But I think we should consider the LOE and advantages of using a technical solution to ensure less experienced git users are able to successfully use our repositories.

Proposal – Functional Splits:

While we have a few more individuals with development experience, they are quite specifically Windows script developers (PowerShell, VBScript, etc). We could just stop using the Windows batch server and let the two or three Microsoft guys figure it out for themselves. This limits individual growth – I “don’t do” PowerShell development, the Windows guys don’t learn Linux. And, as the group changes over time, we have not addressed the underlying problem of multiple people working on the same codebase.

Proposal – Git Changes:

We can begin using branches for development efforts and reserve “master” for ready-for-deployment code. Doing so, we eliminate the possibility of inadvertently elevating code before it is ready – only commands targeted to “origin master” will be run on production servers.

Using descriptive branch names (Initials-ScriptFolderName-SummaryOfChange) will help eliminate duplicated efforts. If I notice we need to send a few mass mails with inline images, seeing “TJR-sendMassMail-AddInlineImages” in the branch list lets me know you’ve got it covered. And “TJR-sendMassMail-RecipientListFromLiveLDAPQuery” lets me know you’re working on something else and I’m setting myself up for merge challenges by working on my change right now. If both of our changes are high priority, we might choose to work through a merge operation. This would be an informed, upfront decision instead of a surprise message indicating that fast-forward merging is not possible.

In large development projects, branch management can become a full-time pursuit. I do not think that will be an issue in our case. Minimizing the number of branches used, and not creating branches based on branches, makes branch management a simpler task. We should be able to perform fast-forward merges to push code into master because our branches modify different files in the repository.

To begin a development effort, create a branch and push it to the git server. Make your changes within that branch, and ensure you keep your branch in sync with master – you cannot merge branches that are “behind” into master without force. Once you are finished with your development, merge your branch into master and delete your branch. This approach will require additional training to ensure everyone understands how to create, rebase, merge, and delete branches (and not to just force operations because it lets you complete your task).

Instead of using ‘master’ for production code, the inverse is equally viable: create a “stable” branch that is for production code and only pull that branch to PROD servers. I believe this approach is done to prevent accidental changes to prod code – you’ve got to intentionally target “origin stable” with an operation to impact production code.

Our single repository configuration is a detriment to using branches if development is performed on the DEV server. To illustrate the issue, create a BranchTesting repo and add a single file to master. Create a Branch1 branch in one command window and check it out. Create a Branch2 in a second command window and check it out. In your first command window, add a file and commit it. In your second command window, add a file and commit it. You will find that both files have been committed to Branch2.

How can we address this issue?

Develop on our individual workstations instead of the DEV server. Not sharing a file set for our development efforts eliminates the branch context switching problem. If you clone the repo to your laptop, Gary clones the repo to his laptop, and I clone the repo to my laptop … you can create TJR-sendMassMail-AddInlineImages on your computer, write and test the changes locally, commit the changes and pull them to the DEV server for more robust testing, and then merge your changes into master when you are ready to elevate the code. I can simultaneously create LJR-monitorLDAPReplication-AddOUD11Servers, do my thing, commit changes and pull them to the DEV server (first using “git branch” to determine if someone else is already testing their branch on the DEV server), and merge my stuff into master when I’m ready to elevate. Other than remembering to ensure you verify that DEV has master checked out (i.e. no one else is testing, so the resource is free), we do not have resource contention.

While it may not be desirable to fill up our laptop drives with the entire code set from six different application servers, sparse-checkout allows you to select the specific folders that will come down to your fork.

The advantage of this approach is that it has no initial LOE beyond training and process change. The repositories are left as-is, and we start using them differently.

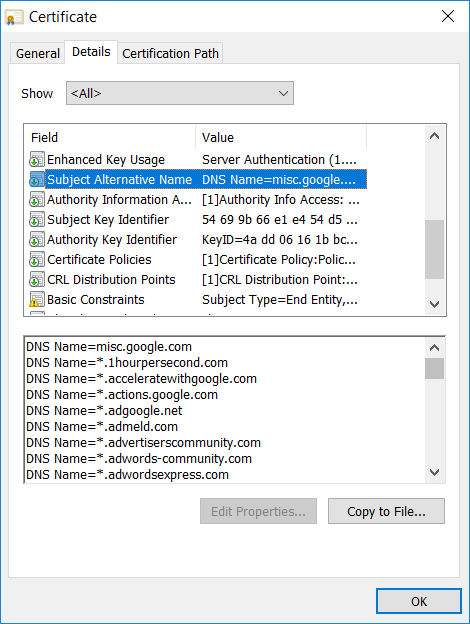

Unfortunately, this approach may not be viable in some instances – when access to data sources is restricted by IP ACL, you may not be able to do more than linting on your laptop. It may not even be possible to configure a Windows laptop to run some of our code – some Linux requirements are difficult to address in Windows (the PKI website’s cert info check, for instance), and testing code on Windows may not ensure successful operation on the Linux hosts.

Break the monolithic repositories into discrete repositories and use submodules allow the multiple independent repositories to be “rolled up” into a top-level repository. Development is done in the submodule repositories. I can clone monitorLDAPReplication, you can clone sendMassMail, etc. changes can be made within our branches of these completely different repositories and merged into the individual repository’s master branch for release to the production environment. Release can be done for the superset (“–recurse-submodules”) or individual sub-modules.

This would require splitting a repository into its individual components and configuring the sub-module relationships. This can be a scripted operation, and it is an incremental change to the script I used to create the repositories and ingest code; but the LOE for implementation is a few days of script writing / testing. Training will be required to ensure individuals can register their submodules within the top-level repo, and we will need to accustom ourselves to maintaining individual repos.

Or just break monolithic repositories into discrete repositories. The level of effort is about the same initially, but no one needs to learn how to set up a new submodule. We lose single-repo conveniences, but there’s literally no association between our different script folders where someone working in X could inadvertently impact Y.