Quick command to report on the IP addresses of all containers running on a Docker server:

docker ps | awk 'NR>1{ print $1 }' | xargs docker inspect -f '{{range .NetworkSettings.Networks}}{{$.Name}}{{" "}}{{.IPAddress}}{{end}}'

Scott set up one of the ESP32’s that we use as environmental sensors to monitor the incubator. There’s an audible alarm if it gets too warm or cold, but that’s only useful if you’re pretty close to the unit. We had gotten a cheap rectangular incubator from Amazon — it’s got some quirks. The display says C, but if we’ve got eggs in a box that’s 100C? They’d be cooked. The number is F, but the letter “C” follows it … there’s supposed to be a calibration menu option, but there isn’t. Worse, though — the temperature sensor is off by a few degrees. If calibration was an option, that wouldn’t be a big deal … but the only way we’re able to get the device over 97F is by taping the temperature probe up to the top of the unit.

So we’ve got an DHT11 sensor inside of the incubator and the ESP32 sends temperature and humidity readings over MQTT to OpenHAB. There are text and audio alerts if the temperature or humidity aren’t in the “good” window. This means you can be out in the yard or away from home and still know if there’s a problem (since data is stored in a database table, we can even chart out the temperature or humidity across the entire incubation period).

We also bought a larger incubator for the chicken eggs — and there’s a new ESP32 and sensor in the larger incubator.

We hit our maximum connection limit on some PostgreSQL servers — which made me wonder why the hot standby servers weren’t being used … well, at all. They’re equally big, expensive servers with loads of disk space. But they’re just sitting there “in case”.

So we directed some traffic over to the standby server. I’m also going to tweak a few settings related to user limits — increase the max connections since these are dedicated hosts and have plenty of available I/O, memory, CPU, etc resources; increase the number of reserved connections since replication filled up all of the reserved slots; implement a per-user connection limit on one account that runs a lot of threads — but directing some people who were only trying to look at data over to the standby server seemed like a quick fix.

Now, we discovered something interesting about how queries against the standby interact with replication. It makes a lot of sense when you start thinking about it — if you query against the writable replica, there’s some blocking that goes on. The system isn’t going to vacuum data that you’re currently trying to use. The standby, however, doesn’t have any way to clue the writable replica in to the fact you are trying to use some data. So the writable replica gets a delete, does its thing to hide those rows from future queries, and eventually auto-vacuum comes through and cleans up those rows. All of this gets pushed over to the standby … and there goes the data you were trying to read.

Odds of this happening on a query that takes eight seconds? Incredibly low! Odds increase, however, the longer a query runs. So some of our super massive reports started seeing an error indicating that their query was cancelled “due to a conflict with recovery”

There are two solutions in the PostgreSQL documentation — one is to increase the max_standby_streaming_delay value (there’s also an archive delay, but we aren’t particularly concerned about clients querying the server during recovery operations) the other is to avoid vacuuming data too quickly — either by setting hot_standby_feedback on the standby or increasing vacuum_defer_cleanup_age on the primary.

There’s a third option too — don’t use the standby for long-running queries. That’s easily done in our case … and doesn’t require tweaking any PostgreSQL settings. Ad hoc reporting and direct user access really shouldn’t be implementing such substantial queries (it’s always good to have a SQL expert plan out and optimize complex queries if that’s an option).

Postgresql stores temporary files for in-flight queries — these don’t normally hang around for long, but sorting a large amount of data or building a large hash can create a lot of temp files. A dead query that was sorting a large amount of data or …. well, we’ve gotten terabytes of temp files associated with multiple backend process IDs. The file names are algorithmic — a string “pgsql_tmp followed by the backend PID, a period, and then some other number. Thus, I can extract the PID from each file name and provide a summary of the processes associated with temp files.

To view a summary of the temp files within the pgsql_tmp folder, run the following command to print a count then a PID number:

ls /path/to/pgdata/base/pgsql_tmp | sed -nr 's/pgsql_tmp([0-9]*)\.[0-9]*/\1/p' | sort | uniq -c

A slightly longer command can be used to reverse the columns – producing a list of process IDs followed by the count of files for that PID – too:

ls /path/to/pgdata/base/pgsql_tmp | sed -nr 's/pgsql_tmp([0-9]*)\.[0-9]*/\1/p' | sort | uniq -c | sort -k2nr | awk '{printf("%s\t%s\n",$2,$1)}END{print}'

We’ve been seeing an error that prevents clients from connecting to Postgresql servers – basically that all available connections are in use and the remaining connections are reserved for superuser and replication activity.

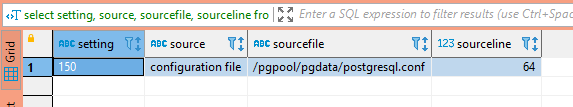

First, we need to determine what the connection limit is —

SELECT setting, source, sourcefile, sourceline FROM pg_settings WHERE name = 'max_connections';

And if there are any per-user connection limits – a limit of -1 means unlimited connections are allowed.

SELECT rolname, rolconnlimit FROM pg_roles

The next step is to identify what connections are exhausting available connections – are there a lot of long-running queries? Are there just more active queries than anticipated? Are there a bunch of idle connections?

SELECT pid, usename, client_addr, client_port ,to_char(pg_stat_activity.query_start, 'YYYY-MM-DD HH:MI:SS') as query_start , state, query FROM pg_stat_activity -- where state = 'idle' -- and usename = 'app_user' order by query_start;

In our case, there were over 100 idle connections using up about 77% of the available connections. Auto-vacuum, client read operations, and replication easily filled up the remaining available connections.

Because the clients keeping these idle connections open are an app running in a Kubernetes cluster, there’s an extra layer of complexity identifying where the connection is actually sourced. When you view the list of connections from the Postgresql server’s perspective, “client_addr” is the worker hosting the pod.

On the worker server, use conntrack to identify the actual source of the connection – the IP address in “-d” is the IP address of the Postgresql server. To isolate a specific connection, select a “client_port” from the list of connections (37900 in this case) and grep for the port. You will see the src IP of the individual POD.

lhost1750:~ # conntrack -L -f ipv4 -d 10.24.29.140 -o extended | grep 37900 ipv4 2 tcp 6 86394 ESTABLISHED src=10.244.4.80 dst=10.24.29.140 sport=37900 dport=5432 src=10.24.29.140 dst=10.24.29.155 sport=5432 dport=37900 [ASSURED] mark=0 use=1 conntrack v1.4.4 (conntrack-tools): 27 flow entries have been shown.

Then use kubeadm to identify which pod is assigned that address:

lhost1745:~ # kubectl get po --all-namespaces -o wide | grep "10.244.4.80" kstreams kafka-stream-app-deployment-1336-d8f7d7456-2n24x 2/2 Running 0 10d 10.244.4.80 lhost0.example.net <none> <none>

In this case, we’ve got an application automatically scaling up that can have 25 connections help open and idle … so there isn’t really a solution other than increasing the number of available connections to a number that’s appropriate given the number of client connections we plan on leaving open. I also want to enact a connection limit on the individual account – if there are 250 connections available on the Postgresql server, then limit the application to 200 of those connections.

A few times now, I’ve encountered individuals with cron jobs or bash scripts where a command execution ends in 2>/dev/null … and the individual is stymied by the fact it’s not working but there’s no clue as to why. The error output is being sent into a big black hole never to escape!

The trick here is to understand file descriptors — 1 is basically a shortcut name for STDOUT and 2 is basically a shortcut name for STDERR (0 is STDIN, although that’s not particularly relevant here). So 2>/dev/null says “take all of the STDERR stuff and redirect it to /dev/null”.

Sometimes you’ll see both STDERR and STDOUT being redirected either to a file or to /dev/null — in that case you will see 2>&1 where the ampersand prior to the “1” indicates the stream is being redirected to a file descriptor (2>1 would direct STDOUT to a file named “1”) — so >/dev/null 2>&1 is the normal way you’d see it written. Functionally, >/dev/null 1>&2 would be the same thing … but redirecting all output into error is, conceptually, a little odd.

To visualize all of this, use a command that will output something to both STDERR and STDOUT — for clarify, I’ve used “1>/dev/null” (redirect STDOUT to /devnull) in conjunction with 2>&1 (redirect STDERR to STDOUT). As written in the text above, the number 1 is generally omitted and just >/dev/null is written.

We have a script that’s used to securely retrieve passwords … a script which failed when run from a Docker container.

* could not load PEM client certificate, OpenSSL error error:140AB18E:SSL routines:SSL_CTX_use_certificate:ca md too weak, (no key found, wrong pass phrase, or wrong file format?)

Appears root of issue is tied to Debian OS that’s used in the python:3.7-slim container that’s being used. Newer iterations of some Linux OS’s have a default setting in the openssl config that provide a setting for SSL_CTX_set_security_level that precludes communication with password server.

Remediating this at the server end is not a reasonable approach, so client config needs to be changed to allow connection to be established. Setting security level to 1 allows connection to proceed, so proposed including additional instruction in Dockerfile that uses sed to update the configuration parameter.

sed -i 's/DEFAULT@SECLEVEL=2/DEFAULT@SECLEVEL=1/' /etc/ssl/openssl.cnf

Once that setting was updated, the script worked perfectly as it does on our physical and VM servers.

I needed to verify the last time a bunch of servers were patched — basically to ensure compliance with the stated quarterly patching interval. This python script pulls the list of installed packages and the date for each package, sorts the info by date DESC, and then reports the latest date on any packages — as well as the number of packages updated on that date. If there’s only one … the system still might bear some investigation. But if a couple of dozen packages were updated in the past quarter … we don’t need to be too worried about turning up on the out-of-compliance report.

import subprocess

import re

import datetime

from collections import OrderedDict

def getFirstElement(odictInput):

'''

This function returns the first element from an ordered collection (an arbitrary element if an unordered collection is passed in)

Input -- odictInput -- ordered collection

Output -- type varies -- first element of ordered collection, arbitrary element of unordered collection

'''

return next(iter(odictInput))

listHosts = ['host01.example.com', 'host02.example.com', 'host03.example.com','host04.example.com','host05.example.com']

for strHost in listHosts:

dictPatchDates = {}

objResults = subprocess.Popen(['ssh', strHost, 'rpm', '-qa', '--last'],stdout=subprocess.PIPE)

for strLine in objResults.stdout:

strPackageInfo = strLine.decode('utf-8').rstrip()

listPackageInfo = re.split(r'\s*([a-zA-Z]{3,}\s[0-9]{2,}\s[a-zA-Z]{3,}\s[0-9]{2,})',strPackageInfo)

strUpdateDate = listPackageInfo[1]

dateUpdateDate = datetime.datetime.strptime(strUpdateDate, "%a %d %b %Y").date()

if dictPatchDates.get(dateUpdateDate) is not None:

dictPatchDates[dateUpdateDate] = dictPatchDates[dateUpdateDate] + 1

else:

dictPatchDates[dateUpdateDate] = 1

dictOrderedPatchDates = OrderedDict(sorted(dictPatchDates.items(), key=lambda t: t[0],reverse=True))

dateLatestPatch = getFirstElement(dictOrderedPatchDates)

print(f"{strHost}\t{dateLatestPatch}\t{dictOrderedPatchDates[dateLatestPatch]}")

There is a great deal of documentation available for building Gerbera from source on a variety of Linux flavors. Unfortunately, Fedora isn’t one of those (and the package names don’t exactly match up to let you replace “apt-get” with “yum” and be done). So I am quickly documenting the process we followed to build Gerbera from source.

The Fedora build of Gerbera has the binaries in /usr/bin and the manual build places the gerbera binary in /usr/local/bin — the build updates the unit file to reflect this change, but this means you want to back up any customizations you’ve made to the unit file before running “make install”.

You need the build system — cmake, g++, etc and the devel packages from the following table as required by your build options

Additional packages that we needed to install: automake, autoconf, libtool

| Library | Fedora Package | Required? | Note | Compile-time option | Default |

| libpupnp | libupnp-devel | XOR libnpupnp | pupnp | ||

| libnpupnp | Build from source (if needed) | XOR libupnp | I was only able to locate this as a source, not available from Fedora repos | WITH_NPUPNP | Disabled |

| libuuid | libuuid-devel | Required | Not required on *BSD | ||

| pugixml | pugixml-devel | Required | XML file and data support | ||

| libiconv | glibc-headers | Required | Charset conversion | ||

| sqlite3 | sqlite-devel | Required | Database storage | ||

| zlib | zlib-devel | Required | Data compression | ||

| fmtlib | fmt-devel | Required | Fast string formatting | ||

| spdlog | spdlog-devel | Required | Runtime logging | ||

| duktape | duktape-devel | Optional | Scripting Support | WITH_JS | Enabled |

| mysql | mariadb-devel | Optional | Alternate database storage | WITH_MYSQL | Disabled |

| curl | libcurl-devel | Optional | Enables web services | WITH_CURL | Enabled |

| taglib | taglib-devel | Optional | Audio tag support | WITH_TAGLIB | Enabled |

| libmagic | file-devel | Optional | File type detection | WITH_MAGIC | Enabled |

| libmatroska | libmatroska-devel | Optional | MKV metadata required for MKV | WITH_MATROSKA | Enabled |

| libebml | libebml-devel | Optional | MKV metadata required for MKV | WITH_MATROSKA | Enabled |

| ffmpeg/libav | ffmpeg-free-devel | Optional | File metadata | WITH_AVCODEC | Disabled |

| libexif | libexif-devel | Optional | JPEG Exif metadata | WITH_EXIF | Enabled |

| libexiv2 | exiv2-devel | Optional | Exif, IPTC, XMP metadata | WITH_EXIV2 | Disabled |

| lastfmlib | liblastfm-devel | Optional | Enables scrobbling | WITH_LASTFM | Disabled |

| ffmpegthumbnailer | ffmpegthumbnailer-devel | Optional | Generate video thumbnails | WITH_FFMPEGTHUMBNAILER | Disabled |

| inotify | glibc-headers | Optional | Efficient file monitoring | WITH_INOTIFY | |

| libavformat | libavformat-free-devel | Required for 2.0 | |||

| libavutil | libavutil-free-devel | Required for 2.0 | |||

| libavcodec | libavcodec-free-devel | Required for 2.0 |

Then follow the generalized instructions — cd into the folder where you want to run the build and run (customizing the cmake line as you wish):

git clone https://github.com/gerbera/gerbera.git mkdir build cd build cmake ../gerbera -DWITH_MAGIC=1 -DWITH_MYSQL=1 -DWITH_CURL=1 -DWITH_INOTIFY=1 -DWITH_JS=1 -DWITH_TAGLIB=1 -DWITH_AVCODEC=1 -DWITH_FFMPEGTHUMBNAILER=0 -DWITH_EXIF=1 -DWITH_EXIV2=1 -DWITH_SYSTEMD=1 -DWITH_LASTFM=0 -DWITH_DEBUG=1 make -j4 sudo make install

As with the Gerbera binary, the Fedora build places the web content in /usr/share/gerbera and the manual build places the web content into /usr/local/share/gerbera — yes, you can change the paths in the build, and I’m sure you can clue Gerbera into the new web file location. I opted for the quick/easy/lazy solution of running

mv /usr/share/gerbera /usr/share/gerbera/old ln -s /usr/local/share/gerbera /usr/share/

To symlink the location my config thinks the web components should be located to the new files.

On the first start of Gerbera, SQL scripts may be run to update the database — don’t stop or kill the service during this process there’s no checkpoint restart of the upgrade process. We backed up /etc/gerbera/gerbera.db prior to starting our Gerbera installation. We’ve also wiped the database files to start from scratch and test changes that impacted how items are ingested into the database.

Fin.

When we are decommissioning a website (or web server), I always watch the log files to ensure there aren’t a lot of people still accessing it. Sometimes there are and it’s worth tracking them down individually to clue them into the site’s eminent demise. Usually there aren’t, and it’s just a confirmation that our decommissioning efforts won’t be impactful.

This python script looks for IP addresses in the log files and outputs each IP & it’s access count per log file. Not great if you’ll see a bunch of IP addresses in the recorded URI string, but it’s good enough for 99% of our log data.

import os

import re

from collections import Counter

def parseApacheHTTPDLog(strLogFile):

regexIPAddress = r'\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3}'

with open(strLogFile) as f:

objLog = f.read()

listIPAddresses = re.findall(regexIPAddress,objLog)

counterAccessByIP = Counter(listIPAddresses)

for strIP, iAccessCount in counterAccessByIP.items():

print(f"{strLogFile}\t{str(strIP)}\t{str(iAccessCount)}")

if __name__ == '__main__':

strLogDirectory = '/var/log/httpd/'

for strFileName in os.listdir(strLogDirectory):

if strFileName.__contains__("access_log"):

#if strFileName.__contains__("hostname.example.com") and strFileName.__contains__("access_log"):

parseApacheHTTPDLog(f"{strLogDirectory}{strFileName}")