Shell into pod

kubectl exec -it <pod_name> -- /bin/bash

Shell into pod

kubectl exec -it <pod_name> -- /bin/bash

I’m building a quick image to test permissions to a folder structure for a friend … so I’m making a quick note about how I’m building this test image so I don’t have to keep re-typing it all.

FROM python:3.7-slim

RUN apt-get update && apt-get install -y curl --no-install-recommends

RUN mkdir -p /srv/ljr/test

# Create service account

RUN useradd --comment "my service account" -u 30001 -g 30001 --shell /sbin/nologin --no-create-home webuser

# Copy a bunch of stuff various places under /srv

# Set ownership on /srv folder tree

RUN chown -R 30001:30001 /srv

USER 30001:30001

ENTRYPOINT ["/bin/bash", "/entrypoint.sh"]

docker build -t “ljr:latest” .

docker run –rm -it –entrypoint “/bin/bash” ljr

We have an index that was created without a lifecycle policy — and it’s taking up about 300GB of our 1.5T on the dev server. I don’t want to delete it — mostly because I don’t know why it’s there. But cleaning up old data seemed like a

POST /metricbeat_kafka-/_delete_by_query

{

"query": {

"range" : {

"@timestamp" : {

"lte" : "2021-02-04T01:47:44.880Z"

}

}

}

}

I’ve been playing around with script fields to manipulate data returned by ElasticSearch queries. As an example, data where there are a few nested objects with values that need to be multiplied together:

{

"order": {

"item1": {

"cost": 31.55,

"count": 111

},

"item2": {

"cost": 62.55,

"count": 222

},

"item3": {

"cost": 93.55,

"count": 333

}

}

}

And to retrieve records and multiply cost by count:

{

"query" : { "match_all" : {} },

"_source": ["order.item*.item", "order.item*.count", "order.item*.cost"],

"script_fields" : {

"total_cost_1" : {

"script" :

{

"lang": "painless",

"source": "return doc['order.item1.cost'].value * doc['order.item1.count'].value;"

}

},

"total_cost_2" : {

"script" :

{

"lang": "painless",

"source": "return doc['order.item2.cost'].value * doc['order.item2.count'].value;"

}

},

"total_cost_3" : {

"script" :

{

"lang": "painless",

"source": "return doc['order.item3.cost'].value * doc['order.item3.count'].value;"

}

}

}

}

Unfortunately, I cannot find any way to iterate across an arbitrary number of item# objects nested in the order object. So, for now, I think the data manipulation will be done in the program using the API to retrieve data. Still, it was good to learn how to address values in the doc record.

This example uses Kerberos for SSO authentication using Docker-ized NGINX. To instantiate the sandbox container, I am mapping the conf.d folder into the container and publishing ports 80 and 443

docker run -dit --name authproxy -v /usr/nginx/conf.d:/etc/nginx/conf.d -p 80:80 -p 443:443 -d centos:latest

Shell into the container, install Kerberos, and configure it to use your domain (in this example, it is my home domain.

docker exec -it authproxy bash

# Fix the repos – this is a docker thing, evidently … cd /etc/yum.repos.d/ sed -i 's/mirrorlist/#mirrorlist/g' /etc/yum.repos.d/CentOS-* sed -i 's|#baseurl=http://mirror.centos.org|baseurl=http://vault.centos.org|g' /etc/yum.repos.d/CentOS-* # And update everything just because dnf update # Install required stuff dnf install vim wget git gcc make pcre-devel zlib-devel krb5-devel

Install NGINX from source and include the spnego-http-auth-nginx-module module

wget http://nginx.org/download/nginx-1.21.6.tar.gz gunzip nginx-1.21.6.tar.gz tar vxf nginx-1.21.6.tar cd nginx-1.21.6/ git clone https://github.com/stnoonan/spnego-http-auth-nginx-module.git dnf install gcc make pcre-devel zlib-devel krb5-devel ./configure --add-module=spnego-http-auth-nginx-module make make install

Configure Kerberos on the server to use your domain:

root@aadac0aa21d5:/# cat /etc/krb5.conf

includedir /etc/krb5.conf.d/

[logging]

default = FILE:/var/log/krb5libs.log

kdc = FILE:/var/log/krb5kdc.log

admin_server = FILE:/var/log/kadmind.log

[libdefaults]

dns_lookup_realm = false

ticket_lifetime = 24h

renew_lifetime = 7d

forwardable = true

rdns = false

default_realm = EXAMPLE.COM

# allow_weak_crypto = true

# default_tgs_enctypes = arcfour-hmac-md5 des-cbc-crc des-cbc-md5

# default_tkt_enctypes = arcfour-hmac-md5 des-cbc-crc des-cbc-md5

default_ccache_name = KEYRING:persistent:%{uid}

[realms]

EXAMPLE.COM= {

kdc = DC01.EXAMPLE.COM

admin_server = DC01.EXAMPLE.COM

}

Create a service account in AD & obtain a keytab file:

ktpass /out nginx.keytab /princ HTTP/docker.example.com@example.com -SetUPN /mapuser nginx /crypto AES256-SHA1 /ptype KRB5_NT_PRINCIPAL /pass Th2s1sth3Pa=s -SetPass /target dc01.example.com

Transfer the keytab file to the NGINX server. Add the following to the server{} section or location{} section to require authentication:

auth_gss on; auth_gss_keytab /path/to/nginx/conf/nginx.keytab; auth_gss_delegate_credentials on;

You will also need to insert header information into the nginx config:

proxy_pass http://www.example.com/authtest/; proxy_set_header Host "www.example.com"; # I need this to match the host header on my server, usually can use data from $host proxy_set_header X-Original-URI $request_uri; # Forward along request URI proxy_set_header X-Real-IP $remote_addr; # pass on real client's IP proxy_set_header X-Forwarded-For "LJRAuthPrxyTest"; proxy_set_header X-Forwarded-Proto $scheme; proxy_set_header Authorization $http_authorization; proxy_pass_header Authorization; proxy_set_header X-WEBAUTH-USER $remote_user; proxy_read_timeout 900;

Run NGINX: /usr/local/nginx/sbin/nginx

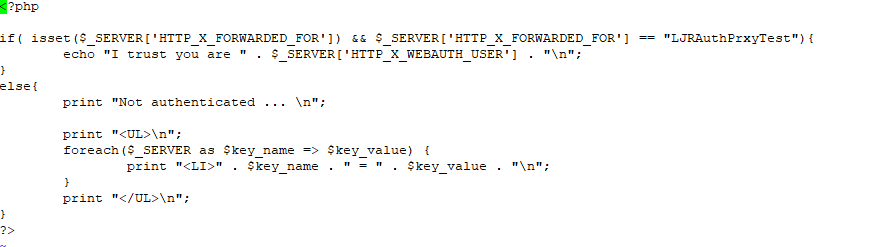

In and of itself, this is the equivalent of requiring authentication – any user – to access a site. The trick with an auth proxy is that the server must trust the header data you inserted – in this case, I have custom PHP code that looks for X-ForwardedFor to be “LJRAuthPrxyTest” and, if it sees that string, reads X-WEBAUTH-USER for the user’s logon name.

In my example, the Apache site is configured to only accept connections from my NGINX instance:

<RequireAll>

Require ip 10.1.3.5

</RequireAll>

This prevents someone from playing around with header insertion and spoofing authentication.

Some applications allow auth proxying, and the server documentation will provide guidance on what header values need to be used.

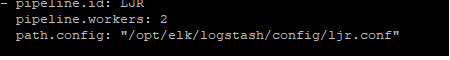

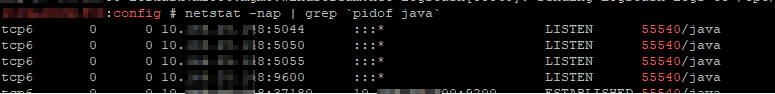

The process to upgrade minor releases of LogStash is quite simple — stop service, drop the binaries in place, and start service. In this case, my upgrade process is slightly complicated by the fact our binaries aren’t installed to the “normal” location from the RPM. I am upgrading from 7.7.0 => 7.17.4

The first step is, obviously, to download the LogStash release you want – in this case, it is 7.17.4 as upgrading across major releases is not supported.

cd /tmp mkdir logstash mv logstash-7.17.4-x86_64.rpm ./logstash cd /tmp/logstash rpm2cpio logstash-7.17.4-x86_64.rpm | cpio -idmv systemctl stop logstash mv /opt/elk/logstash /opt/elk/logstash-7.7.0 mv /tmp/logstash/usr/share/logstash /opt/elk/ mkdir /var/log/logstash mkdir /var/lib/logstash mv /tmp/logstash/etc/logstash /etc/logstash cd /etc/logstash mkdir rpmnew mv jvm.options ./rpmnew/ mv log* ./rpmnew/ mv pipelines.yml ./rpmnew/ mv startup.options ./rpmnew/ cp -r /opt/elk/logstash-7.7.0/config/* ./ ln -s /opt/elk/logstash /usr/share/logstash ln -s /etc/logstash /opt/elk/logstash/config chown -R elasticsearch:elasticsearch /opt/elk/logstash chown -R elasticsearch:elasticsearch /var/log/logstash chown -R elasticsearch:elasticsearch /var/lib/logstash chown -R elasticsearch:elasticsearch /etc/logstash systemctl start logstash systemctl status logstash /opt/elk/logstash/bin/logstash --version

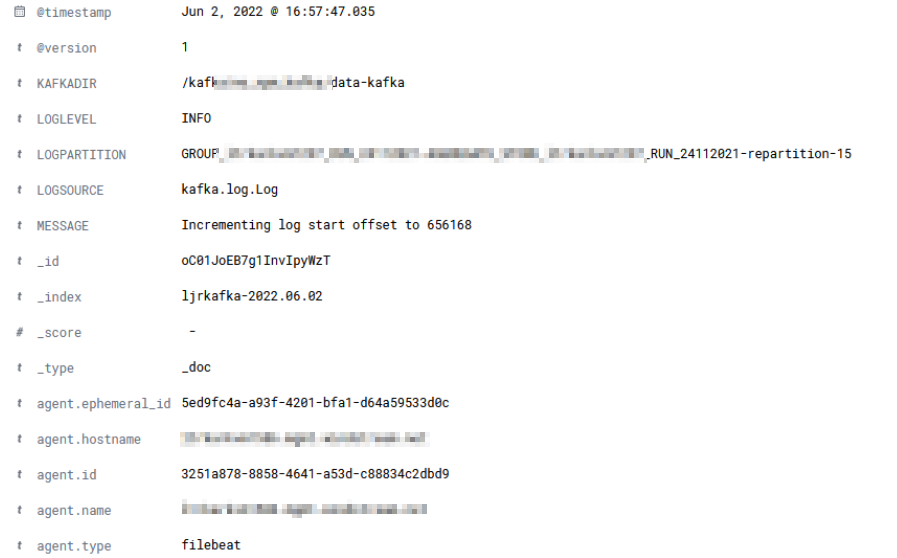

Before sending data, you need a pipleline on logstash to accept the data. If you are using an existing pipeline, you just need the proper host and port for the pipeline to use in the Filebeat configuration. If you need a new pipeline, the input needs to be of type ‘beats’

# Sample Pipeline Config:

input {

beats {

host => "logstashserver.example.com"

port => 5057

client_inactivity_timeout => "3000"

}

}

filter {

grok{

match => {"message"=>"\[%{TIMESTAMP_ISO8601:timestamp}] %{DATA:LOGLEVEL} \[Log partition\=%{DATA:LOGPARTITION}, dir\=%{DATA:KAFKADIR}\] %{DATA:MESSAGE} \(%{DATA:LOGSOURCE}\)"}

}

}

output {

elasticsearch {

action => "index"

hosts => ["https://eshost.example.com:9200"]

ssl => true

cacert => ["/path/to/certs/CA_Chain.pem"]

ssl_certificate_verification => true

user =>"us3r1d"

password => "p@s5w0rd"

index => "ljrkafka-%{+YYYY.MM.dd}"

}

}

Download the appropriate version from https://www.elastic.co/downloads/past-releases#filebeat – I am currently using 7.17.4 as we have a few CentOS + servers.

Install the package (rpm -ihv filebeat-7.17.4-x86_64.rpm) – the installation package places the configuration files in /etc/filebeat and the binaries and other “stuff” in /usr/share/filebeat

Edit /etc/filebeat/filebeat.yml

Run filebeat in debug mode from the command line and watch for success or failure.

filebeat -e -c /etc/filebeat/filebeat.yml -d "*"

Assuming everything is running well, use systemctl start filebeat to run the service and systemctl enable filebeat to set it to launch on boot.

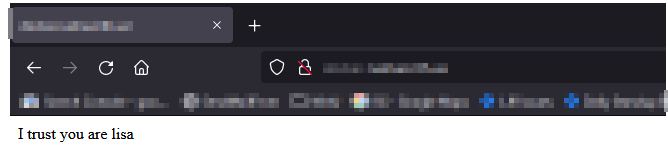

Filebeats will attempt to parse the log data and send a JSON object to the LogStash server. When you view the record in Kibana, you should see any fields parsed out with your grok rule – in this case, we have KAFKADIR, LOGLEVEL, LOGPARTITION, LOGSOURCE, and MESSAGE fields.

Create a logstash pipeline

We now have a logstash data collector ready. We next need to create the index templates in ES

We have a number of logstash servers gathering data from various filebeat sources. We’ve recently experienced a problem where the pipeline stops getting data for some of those sources. Not all — and restarting the non-functional filebeat source sends data for ten minutes or so. We were able to rectify the immediate problem by restarting our logstash services (IT troubleshooting step #1 — we restarted all of the filebeats and, when that didn’t help, moved on to restarting the logstashes)

But we need to have a way to ensure this isn’t happening — losing days of log data from some sources is really bad. So I put together a Python script to verify there’s something coming in from each of the filebeat sources.

pip install elasticsearch==7.13.4

#!/usr/bin/env python3

#-*- coding: utf-8 -*-

# Disable warnings that not verifying SSL trust isn't a good idea

import requests

requests.packages.urllib3.disable_warnings()

from elasticsearch import Elasticsearch

import time

# Modules for email alerting

import smtplib

from email.mime.multipart import MIMEMultipart

from email.mime.text import MIMEText

# Config variables

strSenderAddress = "devnull@example.com"

strRecipientAddress = "me@example.com"

strSMTPHostname = "mail.example.com"

iSMTPPort = 25

listSplunkRelayHosts = ['host293', 'host590', 'host591', 'host022', 'host014', 'host135']

iAgeThreashold = 3600 # Alert if last document is more than an hour old (3600 seconds)

strAlert = None

for strRelayHost in listSplunkRelayHosts:

iCurrentUnixTimestamp = time.time()

elastic_client = Elasticsearch("https://elasticsearchhost.example.com:9200", http_auth=('rouser','r0pAs5w0rD'), verify_certs=False)

query_body = {

"sort": {

"@timestamp": {

"order": "desc"

}

},

"query": {

"bool": {

"must": {

"term": {

"host.hostname": strRelayHost

}

},

"must_not": {

"term": {

"source": "/var/log/messages"

}

}

}

}

}

result = elastic_client.search(index="network_syslog*", body=query_body,size=1)

all_hits = result['hits']['hits']

iDocumentAge = None

for num, doc in enumerate(all_hits):

iDocumentAge = ( (iCurrentUnixTimestamp*1000) - doc.get('sort')[0]) / 1000.0

if iDocumentAge is not None:

if iDocumentAge > iAgeThreashold:

if strAlert is None:

strAlert = f"<tr><td>{strRelayHost}</td><td>{iDocumentAge}</td></tr>"

else:

strAlert = f"{strAlert}\n<tr><td>{strRelayHost}</td><td>{iDocumentAge}</td></tr>\n"

print(f"PROBLEM - For {strRelayHost}, document age is {iDocumentAge} second(s)")

else:

print(f"GOOD - For {strRelayHost}, document age is {iDocumentAge} second(s)")

else:

print(f"PROBLEM - For {strRelayHost}, no recent record found")

if strAlert is not None:

msg = MIMEMultipart('alternative')

msg['Subject'] = "ELK Filebeat Alert"

msg['From'] = strSenderAddress

msg['To'] = strRecipientAddress

strHTMLMessage = f"<html><body><table><tr><th>Server</th><th>Document Age</th></tr>{strAlert}</table></body></html>"

strTextMessage = strAlert

part1 = MIMEText(strTextMessage, 'plain')

part2 = MIMEText(strHTMLMessage, 'html')

msg.attach(part1)

msg.attach(part2)

s = smtplib.SMTP(strSMTPHostname)

s.sendmail(strSenderAddress, strRecipientAddress, msg.as_string())

s.quit()