I mentioned that I had inherited a Git implementation last week. Here is the documentation I created to teach my coworkers what Git is and how to use it. Some isn’t applicable outside of our environment (you won’t care about the AD groups that control access to the system), some is applicable for small non-dedicated development teams … but I figured I’d post the presentation and quick reference guide on the Internet in case it was useful to someone else.

Background:

Git is a system that provides version control for files – we’re using it to control script/program code versions (source control management), but I could put this document in Git and use the version control to manage edits to the document. You can use it to maintain configuration files – allowing config changes to be traceable. You could use it as a cookbook if you were so inclined – a chef tinkering with a recipe might be interested in going back a few versions and trying something else.

Git provides some functionality that is redundant to other systems – you could, for instance, import our scripts to SharePoint and make code changes within SharePoint. The individual replacing the file is recorded. If a previous version is needed, SharePoint maintains previous versions that can be recovered. Why use Git instead of SharePoint? Git makes it easier to have multiple developers working on a program, including functions to “merge” the edited files together. You can have different versions of the whole project – in SharePoint, I can see different versions of each file, but I have no way of correlating which version of file x.cs goes with y.h … which makes the versioning less useful. The inverse is also true — I’m speaking about git as a source code management platform, but we use it to maintain configuration files too. There are even less IT/source control uses for git out there — anything where tracking who changed what when is valuable could leverage git.

If you want the history, LMGTFY 🙂 Or, you know, read WikiPedia. LT;DR: It’s one of Torvalds’s projects, initially used for Linux kernel development and has since become a widely adopted source control management platform. If you have ever looked at a project on GitHub, you have seen a little bit of Git. GitHub is a massive, public Git repository. Because Git has significant adoption within the OpenSource community, there are a lot of good documents on its internal mechanisms (https://book.git-scm.com/book/en/v2/Git-Internals-Git-Objects for example, if you are interested in how data is stored), how it is used (Google “git cheatsheet” and there are thousands of them, or full books like https://git-scm.com/book/en/v2), and oddball errors that might crop up.

Implementation

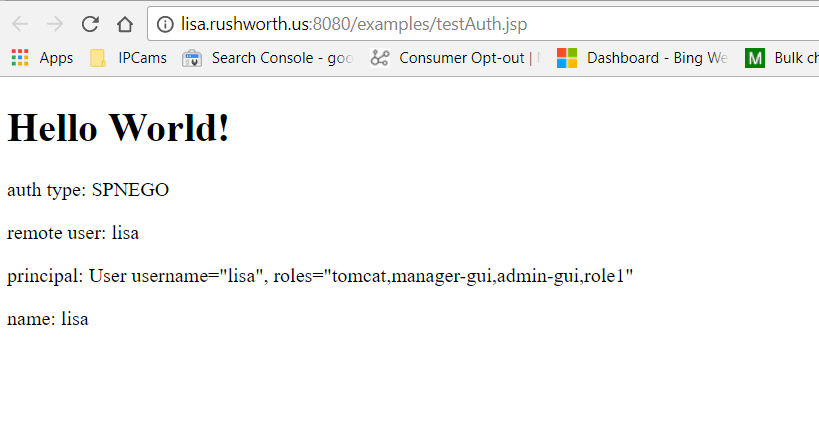

We have a Bobobo Git server using Active Directory for both authentication and authorization. The server source is available on GitHub (https://github.com/jakubgarfield/Bonobo-Git-Server) where you can see issues and be included in conversation about source updates (subscribing lets you know when new versions should be available for install).

Questions and bugs regarding the program are maintained in the GitHub issues section. The Google forum that may come up in searches is not active and was retained for history.

This brainshare is primarily to show client-side usage of the Git server. Server setup, configuration, and management is not the focus. One thing I will highlight on the server config: the groups used to provide authorization are not preexisting or nested groups. This means new team members will need to be added to the appropriate “Windstream CSG Git …” group to use the server.

<add key=”ActiveDirectoryMemberGroupName” value=”Windstream CSG Git Users” />

<add key=”ActiveDirectoryTeamMapping” value=”VDI=Windstream CSG Git VDI,SharePoint=Windstream CSG Git SharePoint, Directory Design=Windstream CSG Git Directory Design”/>

<add key=”ActiveDirectoryRoleMapping” value=”Administrator=Windstream CSG Git Admins” />

The above snippet is from the Web.config file located on the server at F:\inetpub\www\CSG – if new groups need to be added to the Git server, that is where the magic happens.

Some Terminology:

A repository is a storage location. It can store one file, it could be a whole bunch of files that make up a single program (e.g. the CSOCheck Visual Studio project), it could be a bunch of independent programs that have similar purposes (e.g. ‘Provisioning’ that holds all our provisioning scripts). A repository could be all our code glommed into one place (don’t do this – it makes maintaining an individual program more difficult).

A branch is another server-hosted copy of the project. You don’t want to directly edit the in-use production code (we do but this is certainly not a programming best practice!) – a branch is a copy on which development is done. Once development has been completed, the branch is merged back into the master copy. Looking at Git with small projects and a small number of developers, I wouldn’t expect to see a lot of branches on a project. A large program with a lot of dedicated developers may have some break/fix branches as well as longer term feature enhancement branches.

A fork is a personal copy of a repository. In OpenSource development, forks avoid making changes in someone else’s repository. You create your fork, work within your copy, then offer the changes in your fork for inclusion in the project. We don’t have much need to create forks — we would create a branch within the project.

Project – Bonobo does not seem to have projects, but other Git implementations do. A project includes the repository, an issues log, pull requests, and sometimes even a Wiki for the application. If you see someone referring to a project, for us that is just the repository.

The project maintainer is the individual who “owns” the project – this isn’t a project sponsor (a non-tech individual who owns a business relationship) but a technical supervisor for the development work who may also have project sponsor define-requirement type roles. The maintainer decides if changes and features are added. You can suggest changes or features – in OpenSource projects, review the existing issues to see if the feature was already requested (and make a new issue to request the feature if one does not exist) before spending a lot of time working on code that will not be accepted. Your idea may be something people are excited to see included in the project. Or it may be something they don’t want (you can always make a fork and add the feature to your iteration of the project). Even a bugfix – your proposed solution may be accepted. Or there may be a reason the maintainer wants to use a different approach to the issue. We do not have project maintainers.

Commit – this is basically making changes to the branch (add a file, delete a file, or modify a file). A commit should represent a single change. By that, I don’t mean every time you change a line, make a commit. You may well have to update a hundred lines of code across five different files to resolve an issue or implement a feature. But it’s just *one* issue or feature being implemented in the commit. You shouldn’t have a commit that implements SSL encryption in LDAP authentication and allows individuals to approve requests for direct reports. These two things have nothing to do with each other, even if they happen to be the two cards you’ve worked on today.

Commit messages associated with commits where you can indicate what is being changed in the commit. A “good” commit message is like well commented code – don’t provide too much info like “I added XYZ to line 81 on file abc.def, but don’t write “Bug fixes” either. A commit message should convey what has been changed without someone having to diff the versions (i.e. saves time). In more formal software development, commit messages also aid in the creation of release notes. Something like “Changed new user template to include ourOrgPerson objectClass” provides enough detail that we can tell what the commit did – if someone wants to find out what lines got edited, they can diff the files and tell. You can view the commit history in the web site or by using “git log”.

Push is the process of updating the server repository with changes you have made on your local repository.

Pull Request is a term you may encounter when reading Git documentation or participating in GitHub. The request basically clue someone into the fact you’ve got code to be reviewed or integrated into an upstream branch. The project maintainer would, once the changes had been reviewed and agreed upon, merge the feature into the repository and close the pull request. This is not a process we are following, nor are code-related discussions or issue lists tracked within the Git server.

Deploy – once the pull request has been approved, you can deploy and test the changes. If the changes do not work, you roll back by re-deploying the existing master.

Merge is used to combine an individual’s local repository with a server-housed copy of the branch or to combine two branches.

GitHub is an Internet based Git repository used by a lot of people and a lot of OpenSource projects. Projects are publicly readable (well, projects held in free accounts. There’s an add-on fee that allows you to maintain private projects). Yes, we could just get Git enterprise licenses and use the hosted service. We elected to deploy an internally hosted and maintained server.

Process Flow:

In a simple development environment like we have (we’re not dedicated programmers working on enormous applications), branches are straightforward. We’ve got a master for a project. When we encounter a problem, or wish to expand functionality, we make a working branch. Sort the issue or add the feature, commit. Deploy and test the code, then merge your working branch back into master.

If there is only one person working on a project at a time, merge conflicts are not really a thing. We don’t have ten different branches, we don’t have branches from branches (e.g. a branch for implementing external authentication which then has a branch for LDAP, DB table, and external authentication providers. Then the external authentication provider branch has a branch for Facebook, .NET, and Google authentication providers.). When you have a tree full of branches, you need to resolve merge conflicts (pick which change makes it) before you can merge your pull request branch.

A lot of “how to use Git” is process-related and not technical how-to stuff. Questions like “what are your software development lifecycle management processes?” and “What are your criterion for creating a new branch?”

When I worked with full-time developers, we created an “EmerStaging” branch for development on critical incidents and a “DevStaging” branch for development on non-critical incidents. The EmerStaging branch was only intended to be around for a few days – the branch would start out identical to the master, whatever big deal issue would be sorted, then the branch merged back into the master. These changes would then be sync’d down to all other branches (we don’t want the bug to impact development or, worse, to be reintroduced as someone merges in their long-term development project). The DevStaging branch was always present – there’s always a backlog of lower priority bug-fix type stuff to be done – and the project maintainer would ensure the downstream branches were updated when they processed pull requests. In addition to these break/fix branches, a new branch existed for the long-term development work – next version application or specific new features that had not been assigned to a specific release iteration.

Our environment is not so complex – we should be able to get by with one development branch when there is active development on a project and only the master branch when changes are not being made. Following this process, we avoid the challenges of synchronizing and merging multiple branches and sub-branches.

The Git Client

Simply put, a Git client puts files on the local disk and pushes those files back to the server. The first step is getting a git client installed. The examples I am showing today are using the CLI utilities from https://git-scm.com/download/win simply because I already use them at home (it’s the version . Yes, there are other git clients. Lots. If you have used a different client that you prefer, go for it. Different clients will not corrupt a repository.

Some IDE’s have Git integration – their own Git client – it may or may not work with our implementation (some are specific to GitHub / GitHub Enterprise which is not the same thing). If you are using an IDE, it may be convenient to research integrating your IDE directly with Git. There is no need – you can use the command line utilities to retrieve files, switch over to the IDE for your development work, and then use the command line utilities to add, commit, and merge your changes.

To install the Git-SCM clients, download and run the installer. Selecting the defaults on the installation are sufficient – although if you do not have the Win32 port of the GNU utilities, you can select the third option to get grep and such in DOS.

Once the installation completes, grab the two files from \\CWWAPP695\c$\Program Files\Git\mingw64\ssl\certs and put them into your install path\git\mingw64\ssl\certs folder (I renamed the existing ones, but there’s no reason not to delete them). If you see the error “SSL certificate problem: unable to get local issuer certificate”, re-read the last sentence and try again.

Identify a folder on your computer into which you want to clone projects. You can store different projects in distinct locations or you can have a top-level folder in which all your projects are housed.

Creating A New Repository

Log into https://csggit.windstream.com using your Active Directory username and password (no need to specify domain). Repositories are sorted into groups – a group may be a single application project. For example, “AD Password Filter”. A group may contain several different application projects – for example, “Auth Samples”.

To create a new repository, click the big blue button in the upper right-hand corner that says exactly that. Provide a name for the repository – this cannot contain spaces, but should be descriptive enough that people do not need to actually read through the code to see what the program does. I am making a project called “HelloWorld” because … tradition.

Supplying a group name will sort the repository into a group on that first page – please do this, even if your group is your program. Otherwise it’s like creating all of your files in one folder … fine for a small number of files, but quickly difficult to look at. We may want to make a Misc group to hold oddball one-off programs.

The description field provides a place for freeform text describing the purpose of the program. This doesn’t have to be long, but it would be nice to have something. We can consider adding the server(s) to which the code is deployed – that would provide a quick way to list our scripts, what they do, and where they run.

Contributors can clone, push, and pull a repository. Administrators are additionally able to edit the repository details (i.e. change the stuff we’re putting in here now) and delete the repository.

Select the team(s) which will need to access the repository. Disclaimer – most of my experience with Git is at home using a GitLab server. There are only two of us, so permissioning isn’t really a concern. Not sure exactly how secure this is (i.e. if I don’t select Directory Design, can they still view the source but cannot write to it? Do they not even see the repository? I’d not interested enough to get another ID and add it into the security group, but if someone wants to test now … that would be cool.). Click ‘Create’ and the repository will be created.

Look near the top of the page – there will be a hyperlink to go to the new repository. Click that.

We will need the “General Url” as we begin working with the repository (i.e. copy the link address now).

Working With Your Repository

Now that you have a project and URL, clone the project to your local repository – if this is a new project, ignore the warning. If this is a project you expect to have some existing content … well, don’t ignore the error:

D:\tempCSG\ljr\Git>git clone https://csggit.windstream.com/CSG/LJR.git

Cloning into ‘LJR’…

warning: You appear to have cloned an empty repository.

If you are using the Git credential manager, you will be asked to authenticate to the server the first time you clone a repository. You do not need to specify the domain. When you change your password, you can use the Windows Credential Manager to edit your stored credential.

Once the connection has been authenticated, the client will clone the repository and volia, we have stuff

D:\tempCSG\ljr\Git\LJR>dir

Volume in drive D is Data

Volume Serial Number is FA7B-B3E4

Directory of D:\tempCSG\ljr\Git\LJR

06/26/2017 02:33 PM <DIR> .

06/26/2017 02:33 PM <DIR> ..

0 File(s) 0 bytes

2 Dir(s) 9,644,441,600 bytes free

OK, that wasn’t a whole lot of stuff – it just created a folder for my application! Make some files in there – that may mean using the folder as your IDE project location. It may mean using notepad and making a new file. Whatever your approach, make a new file and add some code.

D:\tempCSG\ljr\Git\LJR>notepad helloworld.pl

Then add the new file(s) to the local git repository – important bit here, we are currently making changes to our copy. If you check the server, it is still an empty project.

D:\tempCSG\ljr\Git\LJR>git add *

The * is a wildcard – if you are working on a larger project, you can add just the files you are updating (i.e. I could use git add helloworld.pl here). I used the wildcard here because a lot of people like the convenience. Personally, I always recommend programmers add by name to ensure they are adding the proper ‘stuff’ to the project. There are other short-cut add options: git add . will stage new and modified files (not deletions), git add -u will stage modified and deleted files, and git add -A will stage all files.

Then commit – since this is the first file, I am not using a great commit note. Generally I’ve recommended making the first commit note a link to the requirements document … basically if I wanted to find out why we’ve got this program and what it is meant to do, where do I go? In our case, this might be an INC # or a SharePoint URL. Or it might just be a freeform text like “Provision DMZAD group memberships from acildsdb:OSR2.CWSODMZTable”.

D:\tempCSG\ljr\Git\LJR>git commit -m “Created project”

[master (root-commit) 7a66c68] Created project

1 file changed, 2 insertions(+)

create mode 100644 helloworld.pl

Check the web view to see what’s in the project: nothing. Push the changes:

D:\tempCSG\ljr\Git\LJR>git push

Counting objects: 3, done.

Writing objects: 100% (3/3), 256 bytes | 0 bytes/s, done.

Total 3 (delta 0), reused 0 (delta 0)

To https://csggit.windstream.com/CSG/LJR.git

* [new branch] master -> master

I mentioned earlier that making updates in the master branch is not a best practice … the first time around is an exception … there’s no production implementation that you’re going to bugger up. Now that we’ve got a project that’s running in production (pretend), we’ll make a branch when we want to make changes. Check out the branch – this changes your git ‘context’ to the new branch.

D:\tempCSG\ljr\Git\LJR>git branch newEdits

D:\tempCSG\ljr\Git\LJR>git checkout newEdits

Switched to a new branch ‘newEdits’

Yes there is a shortcut to doing this – “git checkout -b newBranchName”. Push the new branch

D:\tempCSG\ljr\Git\LJR>git push origin newEdits

Total 0 (delta 0), reused 0 (delta 0)

To https://csggit.windstream.com/CSG/LJR.git

* [new branch] newEdits -> newEdits

Make some more changes and add the changed file(s) to the local repo

D:\tempCSG\ljr\Git\LJR>notepad helloworld.pl

D:\tempCSG\ljr\Git\LJR>git add helloworld.pl

Commit the changes and push to the server:

D:\tempCSG\ljr\Git\LJR>git commit -m “Added international support”

[newEdits 28365d9] Added international support

1 file changed, 8 insertions(+), 1 deletion(-)

D:\tempCSG\ljr\Git\LJR>git push origin newEdits

Counting objects: 3, done.

Delta compression using up to 2 threads.

Compressing objects: 100% (2/2), done.

Writing objects: 100% (3/3), 335 bytes | 0 bytes/s, done.

Total 3 (delta 0), reused 0 (delta 0)

To https://csggit.windstream.com/CSG/LJR.git

7a66c68..28365d9 newEdits -> newEdits

Now if you look @ the repository browser on the web site, https://csggit.windstream.com/CSG/Repository/LJR/newEdits/Blob/helloworld.pl, you will see the additions we’ve made. Add some more and repeat the process.

D:\tempCSG\ljr\Git\LJR>notepad helloworld.pl

D:\tempCSG\ljr\Git\LJR>git commit -m “Added Swedish and Hungarian greetings”

[newEdits b2cbedd] Added Swedish and Hungarian greetings

1 file changed, 2 insertions(+)

D:\tempCSG\ljr\Git\LJR>git push origin newEdits

Counting objects: 3, done.

Delta compression using up to 2 threads.

Compressing objects: 100% (2/2), done.

Writing objects: 100% (3/3), 340 bytes | 0 bytes/s, done.

Total 3 (delta 1), reused 0 (delta 0)

To https://csggit.windstream.com/CSG/LJR.git

28365d9..b2cbedd newEdits -> newEdits

Now look at repository explorer and see how the history is tracked – look @ each commit (notice the commit messages and who made the changes). Click into previous version and see how the differences are tracked.

Fast Forward Merging:

This is possible for simple projects like we’re using – there is a master, a branch for changes, then that branch gets collapsed back into the master when the changes have been finished.

D:\tempCSG\ljr\Git\LJR>git checkout master

Switched to branch ‘master’

Your branch is up-to-date with ‘origin/master’.

D:\tempCSG\ljr\Git\LJR>git merge newEdits

Updating 7a66c68..08931d5

Fast-forward

helloworld.pl | 12 +++++++++++-

1 file changed, 11 insertions(+), 1 deletion(-)

D:\tempCSG\ljr\Git\LJR>git push origin master

Total 0 (delta 0), reused 0 (delta 0)

To https://csggit.windstream.com/CSG/LJR.git

7a66c68..08931d5 master -> master

Check web site – you’ll see your changes in master. But newEdits branch is still there.

D:\tempCSG\ljr\Git\LJR>git branch

* master

newEdits

My recommendation is to collapse the branch (delete it) when you have completed your changes. Otherwise you need to manage branches and merges. If there’s a need for multiple branches of sustained development … that’s beyond the scope of a quick brain share. You can find information on more complex merging operations, including conflict resolution (https://git-scm.com/book/en/v2/Git-Branching-Basic-Branching-and-Merging#_basic_merging) and rebasing (https://git-scm.com/book/en/v2/Git-Branching-Rebasing). Google can also tell you the ongoing debate about etiquette around creating new branches, merging, and rebasing.

To delete a branch once development has been completed and the changes have been merged into master:

D:\tempCSG\ljr\Git\LJR>git push origin –delete newEdits

To https://csggit.windstream.com/CSG/LJR.git

– [deleted] newEdits

D:\tempCSG\ljr\Git\LJR>git branch -d newEdits

Deleted branch newEdits (was 08931d5).

Notice the commit history / notes were copied from the newEdits branch into the master, so we haven’t lost anything by merging our branch into the master.

Your local repository is not automatically updated with changes other people commit to the project. A pull retrieves changes pushed by others to the Git server. Alternately , fetch and merge operations to download the changes and play those changes into your local repository.

Since we are not full-time developers, we might opt not to persistently store projects locally (i.e. we have a specific program that needs to be updated, clone the repository locally, perform the edits, push and merge these edits, then destroy the local copy). Provided two people are not simultaneously working on the same project, the newly cloned project is up-to-date each time you start working on a program.

Stashing Changes

If you are working on a particular branch but not yet ready to commit your changes – and you have a need to work on some else in the previous commit – use “git stash save” to table the changes you’ve currently made. Make whatever changes you need to make, add those changes, commit them, and then use “git stash pop” to return the tabled changes.

Getting Rid Of Stuff

The first question is should you remove something? We often keep old code around for future reference (you want to do something similar, instead of re-writing the whole thing … copy this old program and tweak it for the current need). But leaving every old bit of code in the repository is a bit like never deleting an e-mail message or document on disk … eventually you’ll have a big mess of useless stuff that you’re looking through and backing up.

You could change the repository group to “Archive” (or “zzArchive” so it sorts to the bottom of the web view) – this would retain the code but sort it out into a different logical container to identify it as no longer used code.

Some companies will set up a second Git server dedicated to archive – lower I/O requirements on hardware, not frequently backed up, etc. Old code is pushed up to the archive server and then deleted from the active code server. As we don’t currently have an archive Git server, this isn’t an option. But it is a possibility if inactive code that we want to keep becomes burdensome. Other companies archive the code outside of Git and delete the project from the repository.

To remove a file from the repository, use “git rm filename.xtn”. To remove a repository, you can click the little rubbish can next to the project on the web site.

There is no such thing as removing a group – as soon as no repositories exist in the group, it will disappear from the web view.

A Note On Binary Files

The typical solution to storing large binary files in Git is to implement LFS – this feature is not yet supported in Bonobo. As such, avoid storing binary files in Git (media, compiled binaries, compressed data).

Binary files tend to be large. Because of the distributed nature of Git, large files are transmitted and stored a lot of places. Frequently changing binary files bloat the server database too. This isn’t to say you cannot store binary files – just that it is a judgement call. Smaller and more static files, great. Three gig files that get updated daily … find another solution.

Many types of binary files do not compress well – especially already compressed files. You can disable delta compression in .gitattributes (*.mp4 binary -delta) to avoid the I/O of attempting to compress already-compressed data.

When merging binary files, diff just tells you they are different. Not particularly illuminating information if you are manually resolving merge conflicts. For non-text files, there may be a filter that allows changes to be represented in a readable format (e.g. Microsoft Word documents) by setting an appropriate filter in .gitattributes (*.docx diff=word). The diff would not include format changes (i.e. if I bolded a specific sentence, that would not be apparent in the diff), but it will display text content that has been updated.

Remote Repositories

The whole point of Git is distributing copies of the repository elsewhere. It is possible to use Git locally – this would allow a single developer to track and revert changes – but typical implementations have multiple developers pulling from and pushing to a remote repository.

You can have more than one remote repository.

D:\tempCSG\ljr\Git\PKIWeb>git remote -v

origin https://csggit.windstream.com/CSG/PKIWeb.git (fetch)

origin https://csggit.windstream.com/CSG/PKIWeb.git (push)

You may notice that we have the word origin in some of the commands – this is a default repository created when we clone the branch. You can add additional remote repositories (the example I am using is silly since they are the same location). This could be done to transfer a project to a different repository (moving an out-of-support product to an archive Git server or an acquired company moving repositories into the new company’s repository) or to pull a project from an alternate location (other users who maintain their own project for the same application).

D:\tempCSG\ljr\Git\PKIWeb>git remote add ljr https://csggit.windstream.com/CSG/PKIWeb.git

D:\tempCSG\ljr\Git\PKIWeb>git remote -v

ljr https://csggit.windstream.com/CSG/PKIWeb.git (fetch)

ljr https://csggit.windstream.com/CSG/PKIWeb.git (push)

origin https://csggit.windstream.com/CSG/PKIWeb.git (fetch)

origin https://csggit.windstream.com/CSG/PKIWeb.git (push)

Normally there’s no need for us to do this (i.e. don’t maintain your own copy of a project, create a branch in the existing one!), except if our project is derivative work of an opensource project that we need to publish externally. You could have both the internal Git server and GitHub registered as repositories. Make your changes and do “push origin” as well as “push whateverYouCallGitHub”.

You can also “fetch origin” and “fetch whateverYouCallGitHub”, but to avoid confusion, I would use the internal Git server as the authoritative repository (anyone else in the group may be editing the code) and only push to GitHub.

When you no longer need a remote repository, you can remove it.

D:\tempCSG\ljr\Git\PKIWeb>git remote rm ljr

D:\tempCSG\ljr\Git\PKIWeb>git remote -v

origin https://csggit.windstream.com/CSG/PKIWeb.git (fetch)

origin https://csggit.windstream.com/CSG/PKIWeb.git (push)

README

If you participate in GitHub projects, you will notice a README.md file at the root of projects. This is a standard place to include documentation (hence the name), but it is also rendered out in the Git server web site. For an example, see the AD Password Filter project (https://csggit.windstream.com/CSG/Repository/ADPasswordFilter/master/Tree). If there is not a convenient external reference for the initial commit notes, you may want to consider including program documentation in the README.md file.

What if the changes don’t work?

One nice Git feature is undoing changes. The first thing you need to know is that commits have ID numbers (often called a SHA in documentation). You can find that using “git log” or by looking at the web site.

If the changes are local but haven’t been committed to the server, just reset your local copy: git reset –hard ID#

If the changes haven’t been merged into the master branch yet (i.e. you clone your dev branch to the script server, test it … then realize that won’t work), use the git revert functions. First find the commit ID. Then use “git revert ID#” and git will create a commit that is the inverse of the commit specified (it undoes whatever the commit does). Don’t forget to push this revert back to the server.

If the problem is just the commit message, you can modify the message (i.e. remove a typo): git commit –amend -m “This is my new commit message”

You can temporarily revert to a specific commit version (say, to see if the problem you are having was introduced in this version) using “git checkout ID#”. If you intend to make changes from the old state, use “git checkout -b previousState ID# ” to create a new branch from that point.

Ingesting Existing Code

Create the repository. In the directory with your existing code, initialize the directory as a git repository. Add all files to the local repository and commit the initial file load.

D:\Scripts\ljl\wincare-oud>git init

Initialized empty Git repository in D:/Scripts/ljl/wincare-oud/.git/

D:\Scripts\ljl\wincare-oud>git add *

D:\Scripts\ljl\wincare-oud>git commit -m “Uploading existing code to project”

[master (root-commit) 231bafa] Uploading existing code to project

2 files changed, 76 insertions(+)

create mode 100644 _simulateWincare.pl

create mode 100644 res.txt

Add a remote location repository (you can use “git remote -v” to confirm the repository has been added) and push the local repository to the remote

D:\Scripts\ljl\wincare-oud>git remote add origin https://csggit.windstream.com/CSG/WinCareOUDTesting.git

D:\Scripts\ljl\wincare-oud>git push origin master

Counting objects: 4, done.

Delta compression using up to 2 threads.

Compressing objects: 100% (3/3), done.

Writing objects: 100% (4/4), 1.06 KiB | 0 bytes/s, done.

Total 4 (delta 0), reused 0 (delta 0)

To https://csggit.windstream.com/CSG/WinCareOUDTesting.git

* [new branch] master -> master

But wait …

We’ve got a whole bunch of code written and stashed somewhere … but how does that deploy it? It doesn’t. For compiled code, there would be a build process that follows the commits. Someone like a build manager (or an automated process) takes the updated source code, compiles it, hands it off for testing (may be manual testing by QA people or may be an automated test program), then supplies the compiled binaries for release or deployment.

With our interpreted code, using Git is a process change. Instead of going to the task server, copying the script file to something-ljr.xtn, editing my copy, testing, then moving my copy back to something.xnt – we would branch the master for development, clone the development branch to our workstation or elsewhere on the terminal server, make changes, test, commit and push those changes, then merge the development branch back into master.

Once the branch has been merged into master, use git on the task server to integrate changes. (The shortcut below can also be done as “git fetch origin master” and “git merge master”). I am assuming that fast-forward merges can be done.

D:\Scripts\ljl\wincare-oud>git pull origin master

From https://csggit.windstream.com/CSG/WinCareOUDTesting

* branch master -> FETCH_HEAD

Updating 231bafa..202da14

Fast-forward

_simulateWincare.pl | 10 +++++++++-

1 file changed, 9 insertions(+), 1 deletion(-)

On next script execution, the updated code will be used.

Etiquette

There are guidelines to contributing to OpenSource projects (https://opensource.guide/how-to-contribute/) – if you will be working on public projects, read the guidelines and engage with the other developers. Individual projects may have their own guidelines – Git itself is an OpenSource project on GitHub, but pull requests with the obvious repository (named Git) are ignored.

Here, we all know each other … if you see a ticket that requests a new column in a report or a different format for an export, make a development branch, sort the issue, test it, and merge the development branch back into master.

There is one part of the OpenSource guidelines that produce more readable code when multiple individuals are contributing: coding standards. Software development teams have formal documents that define all manner of form within their coding. How to name variables. Are spaces or newlines used before braces? Are spaces used before parenthesis? How are functions named? What does a program or function comment block look like? How are variable and function names cased? When looking at OpenSource projects – or our internal team code – there isn’t a single coding standard. In the absence of a company-supplied standard, most individuals have one of their own. From a class, from a previous job … something.

Some people prefix variable names with type indicators (in statically cast language, you’ve got to search up to the variable declaration otherwise). Some people appreciate concise code and write if(x == y){ doWhatever; } all on one line, others would consider that hopelessly unreadable. Some people use switch statements, some hate them and would rather long-form the if/elseif/else version. If you are making a quick change (+2 needs to be +4 or some word was misspelt), you don’t need to review the code to see how it is written. Anything beyond a quick edit, it is polite to look at how the project maintainer (or original author in our case) has written the code and follow their form.