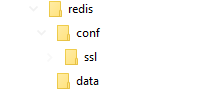

To set up my redis sandbox in Docker, I created two folders — conf and data. The conf will house the SSL stuff and configuration file. The data directory is used to store the redis data.

I first needed to generate a SSL certificate. The public and private keys of the pair are stored in a pem and key file. The public key of the CA that signed the cert is stored in a “ca” folder.

Then I created a redis configuation file — note that the paths are relative to the Docker container

################################## MODULES #####################################

################################## NETWORK #####################################

# My web server is on a different host, so I needed to bind to the public

# network interface. I think we'd *want* to bind to localhost in our

# use case.

# bind 127.0.0.1

# Similarly, I think we'd want 'yes' here

protected-mode no

# Might want to use 0 to disable listening on the unsecure port

port 6379

tcp-backlog 511

timeout 10

tcp-keepalive 300

################################# TLS/SSL #####################################

tls-port 6380

tls-cert-file /opt/redis/ssl/memcache.pem

tls-key-file /opt/redis/ssl/memcache.key

tls-ca-cert-dir /opt/redis/ssl/ca

# I am not auth'ing clients for simplicity

tls-auth-clients no

tls-auth-clients optional

tls-protocols "TLSv1.2 TLSv1.3"

tls-prefer-server-ciphers yes

tls-session-caching no

# These would only be set if we were setting up replication / clustering

# tls-replication yes

# tls-cluster yes

################################# GENERAL #####################################

# This is for docker, we may want to use something like systemd here.

daemonize no

supervised no

#loglevel debug

loglevel notice

logfile "/var/log/redis.log"

syslog-enabled yes

syslog-ident redis

syslog-facility local0

# 1 might be sufficient -- we *could* partition different apps into different databases

# But I'm thinking, if our keys are basically "user:target:service" ... then report_user:RADD:Oracle

# from any web tool would be the same cred. In which case, one database suffices.

databases 3

################################ SNAPSHOTTING ################################

save 900 1

save 300 10

save 60 10000

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename dump.rdb

#

dir ./

################################## SECURITY ###################################

# I wasn't setting up any sort of authentication and just using the facts that

# (1) you are on localhost and

# (2) you have the key to decrypt the stuff we stash

# to mean you are authorized.

############################## MEMORY MANAGEMENT ################################

# This is what to evict from the dataset when memory is maxed

maxmemory-policy volatile-lfu

############################# LAZY FREEING ####################################

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

replica-lazy-flush no

lazyfree-lazy-user-del no

############################ KERNEL OOM CONTROL ##############################

oom-score-adj no

############################## APPEND ONLY MODE ###############################

appendonly no

appendfsync everysec

no-appendfsync-on-rewrite no

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

aof-use-rdb-preamble yes

############################### ADVANCED CONFIG ###############################

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

stream-node-max-bytes 4096

stream-node-max-entries 100

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit replica 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

dynamic-hz yes

aof-rewrite-incremental-fsync yes

rdb-save-incremental-fsync yes

########################### ACTIVE DEFRAGMENTATION #######################

# Enabled active defragmentation

activedefrag no

# Minimum amount of fragmentation waste to start active defrag

active-defrag-ignore-bytes 100mb

# Minimum percentage of fragmentation to start active defrag

active-defrag-threshold-lower 10

Once I had the configuration data set up, I created the container. I’m using port 6380 for the SSL connection. For the sandbox, I also exposed the clear text port. I mapped volumes for both the redis data, the SSL files, and the redis.conf file

docker run --name redis-srv -p 6380:6380 -p 6379:6379 -v /d/docker/redis/conf/ssl:/opt/redis/ssl -v /d/docker/redis/data:/data -v /d/docker/redis/conf/redis.conf:/usr/local/etc/redis/redis.conf -d redis redis-server /usr/local/etc/redis/redis.conf --appendonly yes

Voila, I have a redis server ready. Quick PHP code to ensure it’s functional:

<?php

$sodiumKey = random_bytes(SODIUM_CRYPTO_SECRETBOX_KEYBYTES); // 256 bit

$sodiumNonce = random_bytes(SODIUM_CRYPTO_SECRETBOX_NONCEBYTES); // 24 bytes

#print "Key:\n";

#print sodium_bin2hex($sodiumKey);

#print"\n\nNonce:\n";

#print sodium_bin2hex($sodiumNonce);

#print "\n\n";

$redis = new Redis();

$redis->connect('tls://memcached.example.com', 6380); // enable TLS

//check whether server is running or not

echo "<PRE>Server is running: ".$redis->ping()."\n</pre>";

$checks = array(

"credValueGoesHere",

"cred2",

"cred3",

"cred4",

"cred5"

);

#$ciphertext = safeEncrypt($message, $key);

#$plaintext = safeDecrypt($ciphertext, $key);

foreach ($checks as $i => $value) {

usleep(100);

$key = 'credtest' . $i;

$strCryptedValue = base64_encode(sodium_crypto_secretbox($value, $sodiumNonce, $sodiumKey));

$redis->setEx($key, 1800, $strCryptedValue); // 30 minute timeout

}

echo "<UL>\n";

for($i = 0; $i < count($checks); $i++){

$key = 'credtest'.$i;

$strValue = sodium_crypto_secretbox_open(base64_decode($redis->get($key)),$sodiumNonce, $sodiumKey);

echo "<LI>The value on key $key is: $strValue \n";

}

echo "</UL>\n";

echo "<P>\n";

echo "<P>\n";

echo "<UL>\n";

$objAllKeys = $redis->keys('*'); // all keys will match this.

foreach($objAllKeys as $objKey){

print "<LI>The key $objKey has a TTL of " . $redis->ttl($objKey) . "\n";

}

echo "</UL>\n";

foreach ($checks as $i => $value) {

usleep(100);

$value = $value . "-updated";

$key = 'credtest' . $i;

$strCryptedValue = base64_encode(sodium_crypto_secretbox($value, $sodiumNonce, $sodiumKey));

$redis->setEx($key, 60, $strCryptedValue); // 1 minute timeout

}

echo "<UL>\n";

for($i = 0; $i < count($checks); $i++){

$key = 'credtest'.$i;

$strValue = sodium_crypto_secretbox_open(base64_decode($redis->get($key)),$sodiumNonce, $sodiumKey);

echo "<LI>The value on key $key is: $strValue \n";

}

echo "</UL>\n";

echo "<P>\n";

echo "<UL>\n";

$objAllKeys = $redis->keys('*'); // all keys will match this.

foreach($objAllKeys as $objKey){

print "<LI>The key $objKey has a TTL of " . $redis->ttl($objKey) . "\n";

}

echo "</UL>\n";

foreach ($checks as $i => $value) {

usleep(100);

$value = $value . "-updated";

$key = 'credtest' . $i;

$strCryptedValue = base64_encode(sodium_crypto_secretbox($value, $sodiumNonce, $sodiumKey));

$redis->setEx($key, 1, $strCryptedValue); // 1 second timeout

}

echo "<P>\n";

echo "<UL>\n";

$objAllKeys = $redis->keys('*'); // all keys will match this.

foreach($objAllKeys as $objKey){

print "<LI>The key $objKey has a TTL of " . $redis->ttl($objKey) . "\n";

}

echo "</UL>\n";

sleep(5); // Sleep so data ages out of redis

echo "<UL>\n";

for($i = 0; $i < count($checks); $i++){

$key = 'credtest'.$i;

$strValue = sodium_crypto_secretbox_open(base64_decode($redis->get($key)),$sodiumNonce, $sodiumKey);

echo "<LI>The value on key $key is: $strValue \n";

}

echo "</UL>\n";

?>