Assumptions: This proposal is predicated on having some reasonable way to download the Flow usage report that currently needs to be generated from the Flow admin page. Otherwise usage tracking is a manual process kicked off by logging into Flow admin site and downloading the CSV usage detail report.

This proposal is also predicated on the idea that our group is not-for-profit — we are not seeking to recoup O365 licensing cost through Flow usage billback.

Issue: Flow execution is “throttled” when usage exceeds the tenant limits. Per a response from a “Flow Staff” individual to ‘what actually happens when you exceed your allocated cycle limit’, “To clarify what is meant by throttling, flow run usage exceeding your tenant limits may be suspended at Flow’s discretion until the following billing cycle.”. While “at Flow’s discretion” is still vague, a tenant risks having Flow runs suspended when they exceed their allocated cycle limit.

Additional cycles can be purchased to continue Flow execution. As of 26 April 2019, an additional 50,000 runs costs 40$. We should ask Microsoft to clarify “Flow’s discretion”. Are enterprise customers able to have Flow execution continued and be billed for their overage? Do enterprise customers receive a short grace period during which time they can purchase additional execution cycles for upcoming months?

Farther complicating the issue, it is difficult for a manager to assess how many flow cycles are being executed by their subordinates. While Microsoft has added per-workflow usage statistics, even when workflows are maintained under shared spaces, individually checking each Team flow to determine how many times it has executed is cumbersome.

Solution: If we cannot purchase execution cycles after our limit has been reached, we will need to routinely track the number of cycles executed in our tenant to avoid both execution disruption and purchasing additional runs in months where we do not exceed our “free” cycle quota. To determine an appropriate alerting interval, we need to determine the time between identifying that our tenant is approaching its threshold and the activation of newly purchased cycles.

To create a financial incentive to maintain efficient Flow jobs, cycles should be billed back to the business units that are using Flow. Since each Office 365 license includes 2,000 Flow execution cycles, a BU is not charged when they are under their “free” allocation (i.e. # of O365 licensed users in the BU times 2,000). BUs may be charged at a rate of 0.0008$ per cycle over their “free” allocation (question: does this need to be rounded up to avoid fractional pennies? i.e. one cent per 1,250 cycles). In months were the Flow execution cycle limit in the tenant is exceeded, the BUs are charged. Many individuals do not use Flow so there are unused execution cycles. If we are under our tenant limit but a BU has exceeded their allocation, no additional money has been spent so no billback occurs. The BU owner would still be notified with a message that includes “due to low company-wide Flow usage, your overage does not incur any charge this month. This overage could result in a charge of up to {calculatedOverageCost} charged to your BU.”. The message will include the web link to help them understand who is using Flow in their organization.

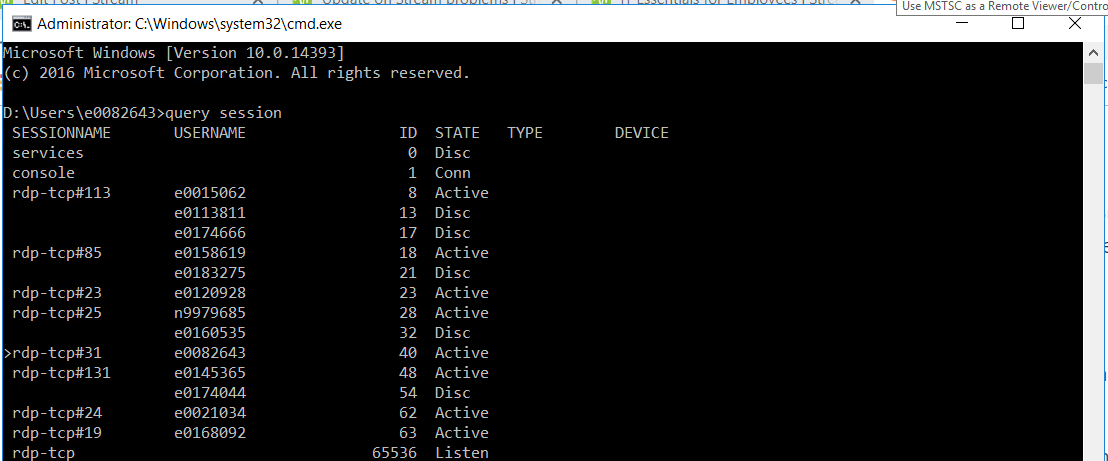

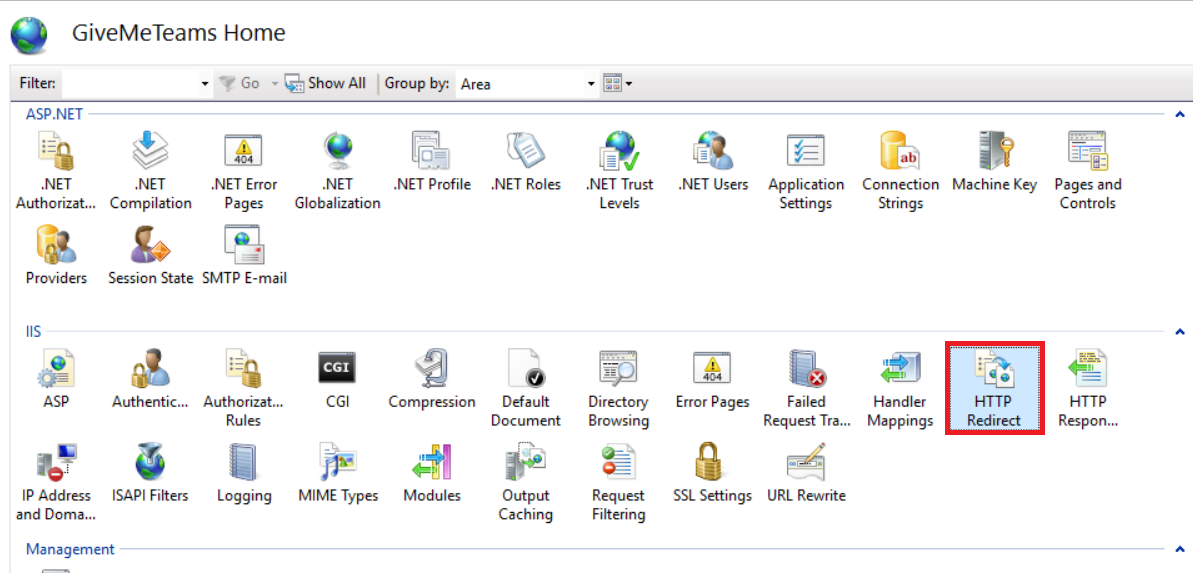

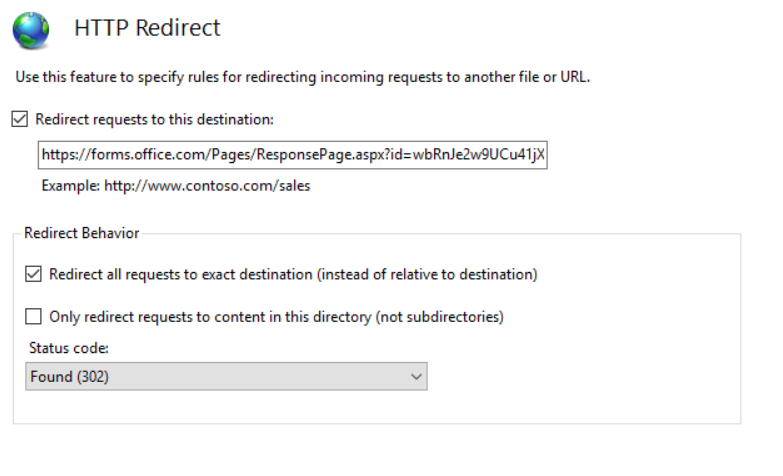

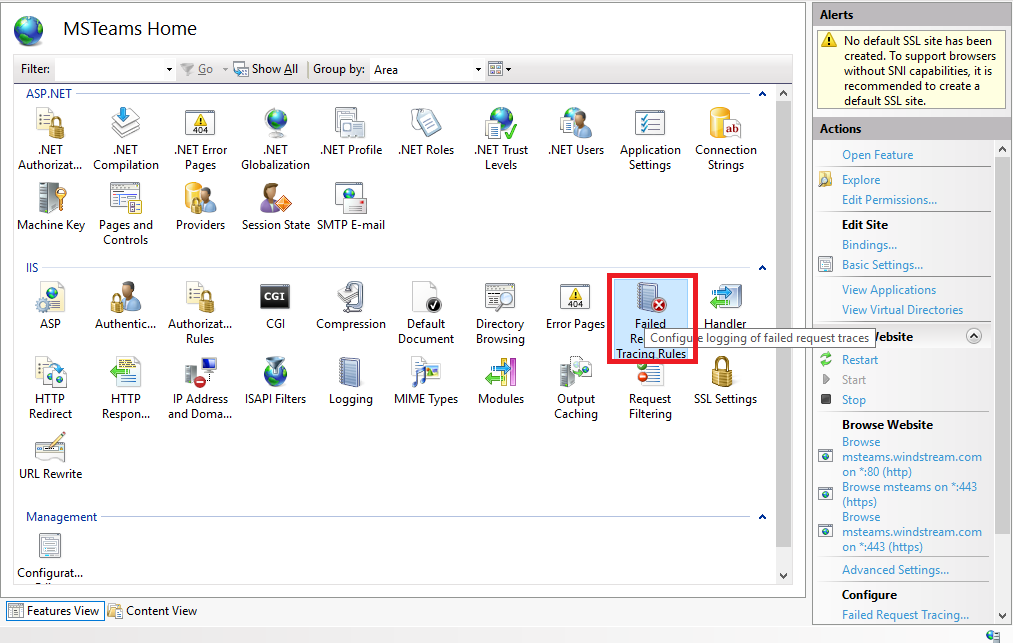

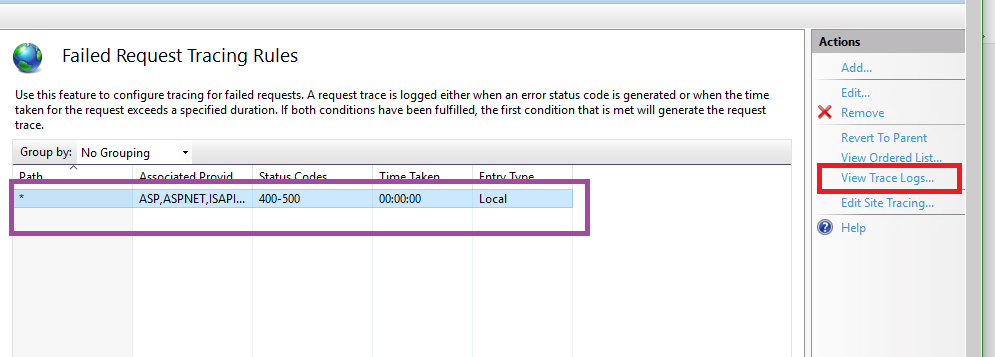

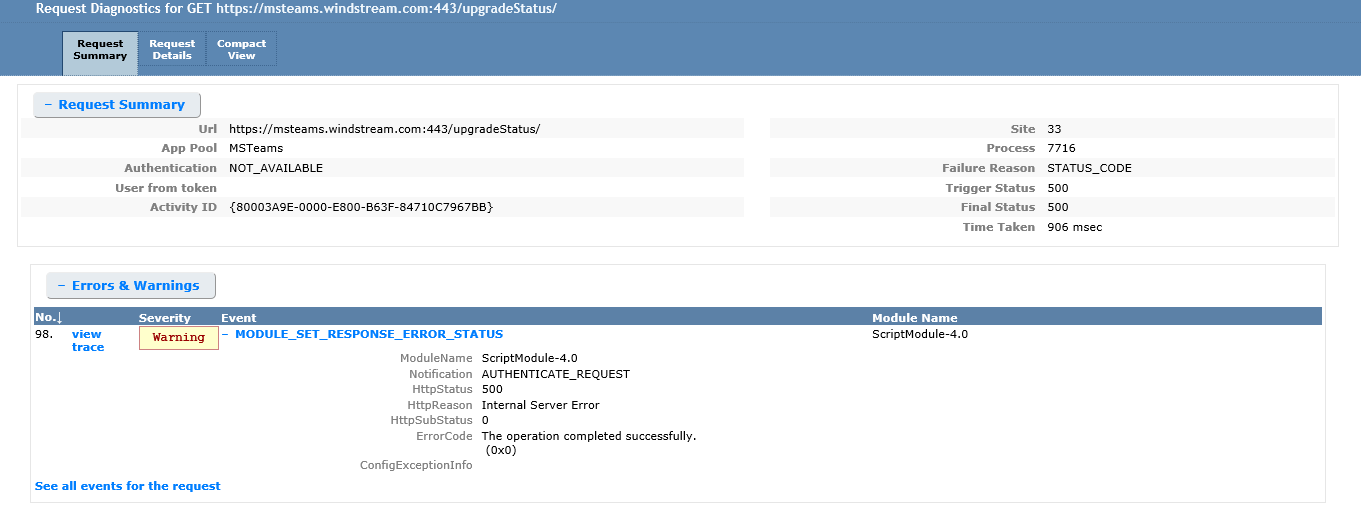

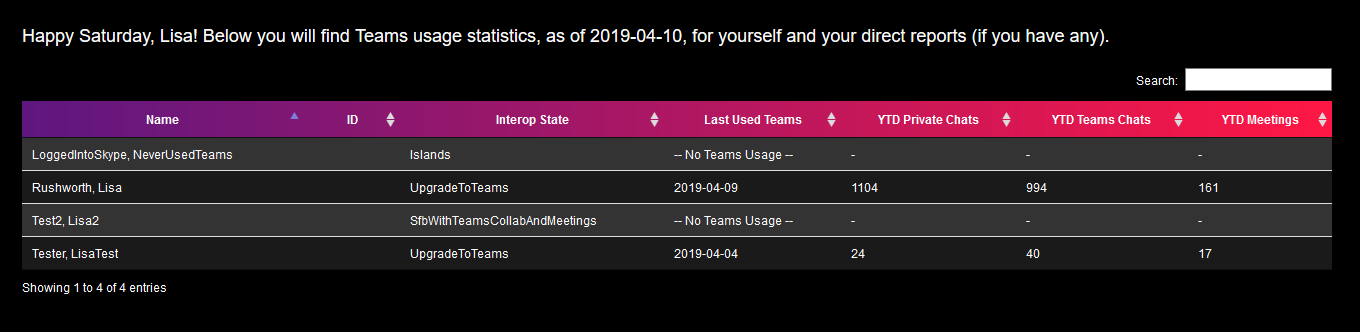

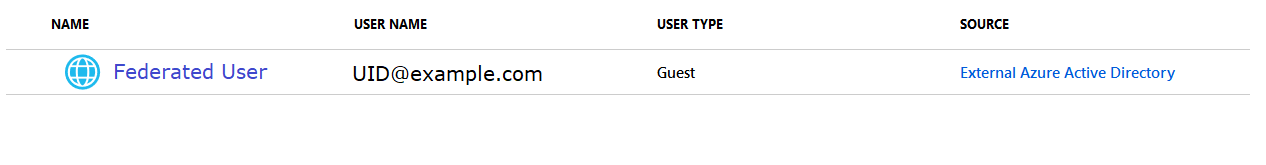

We would develop a script to update a data table. This table will record each Flow user, the number of cycles used month to date, the number of cycles used during the previous month, the number of cycles used year to date, and the number of cycles used during the previous year. A web interface like https://msteams.windstream.com/usageReporting/index.php (which allows managers to view Teams usage of their sub-organization) would be created to provide visibility into the Flow usage data. We would also develop a script, run at the beginning of each month, that determines the BU for each Flow user, aggregates “previous month” Flow usage data per BU, calculates the total number of O365 licensed accounts per BU, calculates the BU’s cycle overage, notifies BU owners per the previous paragraph, and either initiates the billback process or produces the data which is needed to initiate the billback process.

I additionally suggest we create a “best practices” guide for using Flow. As an example, creating a workflow ‘perform some process every time our shared mailbox receives a message from BOB’ incurs a cycle execution every time the shared mailbox receives a message, regardless of the message source. When creating workflows triggered by e-mail messages, using a dedicated mailbox (research: can an O365 group mailbox be used as a trigger??) avoids non-trigger messages executing a Flow.

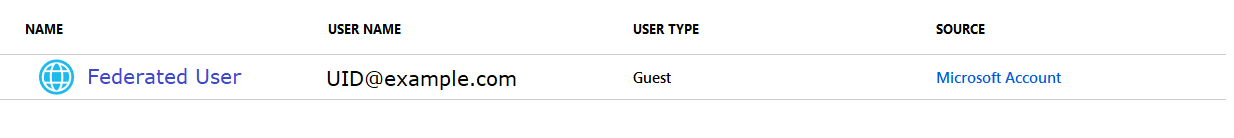

Re-add the new guest account to whatever they were using & their access will be restored.

Re-add the new guest account to whatever they were using & their access will be restored.