We’ve wanted to get our Exchange calendar events into OpenHAB — instead of trying to create a rule to determine preschool is in session, the repeating calendar event will dictate if it is a break or school day. Move the gymnastics session to a new day, and the audio reminder moves itself. Problem is, Microsoft stopped supporting CalDAV.

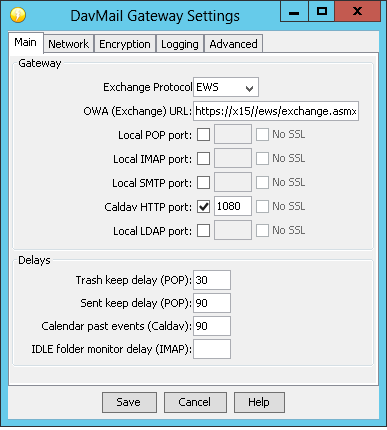

Scott found DAVMail — essentially a proxy that can translate between CalDAV clients and the EWS WSDL. Installation was straight-forward (click ‘next’ a few times). Configuration — for Exchange 2013, you need to select the “EWS” Exchange protocol and use your server’s EWS WSDL URL. https://yourhost.domain.cTLD/ews/exchange.asmx … then enable a local CalDAV port.

On the ‘network’ tab, check the box to allow remote connections. You *can* put the thumbprint of the IIS web site server certificate for your Exchange server into the “server certificate hash” field or you can leave it blank. On the first connection through DAVMail, there will be a pop-up asking you to verify and accept the certificate.

On the ‘encryption’ tab, you can configure a private keystore to allow the client to communicate over SSL. I used a PKCS12 store (Windows type), but a java keystore should work too (you may need to add the key signing key {a.k.a. CA public key} to the ca truststore for your java instance).

On the advanced tab, I did not enable Kerberos because the OpenHAB CalDAV binding passes credentials. I did enable KeepAlive – not sure if it is used, the CalDAV binding seems to poll. Save changes and open up the DAVMail log viewer to verify traffic is coming through.

Then comes Scott’s part — enable the bindings in OpenHAB (there are two of them – a CalDAVIO and CalDAVCmd). In the caldavio.cfg, the config lines need to be prefixed with ‘caldavio’ even though that’s not how it works in OpenHAB2.

caldavio:CalendarIdentifier:url=https://yourhost.yourdomain.gTLD:1080/users/mailbox@yourdomain.gTLD/calendar caldavio:CalendarIdentifier:username=mailbox@yourdomain.gTLD caldavio:CalendarIdentifier:password=PasswordForThatMailbox caldavio:CalendarIdentifier:reloadInterval=5 caldavio:CalendarIdentifier:disableCertificateVerification=true

Then in the caldavCommand.cfg file, you just need to tell it to load that calendar identifier:

caldavCommand:readCalendars=CalendarIdentifier

We have needed stop openhab, delete the config file from ./config/org/openhab/ related to this calendar and binding before config changes are ingested.

Last step is making a calendar item that can do stuff. In the big text box that’s where a message body is located (no idea what that’s called on a calendar entry):

BEGIN:Item_Name:STATE

END:Item_Name:STATE

The subject can be whatever you want. The start time and end time are the times for the begin and end events. Voila!