Obtain SSL Certificates for Each Server

The following process was used to enable SSL communication with the Kakfa servers. Firstly, generate certificates for each server in the environment. I am using a third-party certificate provider, Venafi. When you download the certificates, make sure to select the “PEM (OpenSSL)” format and check the box to “Extract PEM content into separate files (.crt, .key)”

Upload each zip file to the appropriate server under /tmp/ named in the $(hostname).zip format. The following series of commands creates the files needed in the Kafka server configuration. You will be asked to set passwords for the keystore and truststore JKS files. Don’t forget what you use — we’ll need them later.

# Assumes Venafi certificates downloaded as OpenSSL zip files with separate public/private keys are present in /tmp/$(hostname).zip

mkdir /kafka/config/ssl/$(date +%Y)

cd /kafka/config/ssl/$(date +%Y)

mv /tmp/$(hostname).zip ./

unzip $(hostname).zip

# Create keystore for Kakfa

openssl pkcs12 -export -in $(hostname).crt -inkey $(hostname).key -out $(hostname).p12 -name $(hostname) -CAfile ./ca.crt -caname root

keytool -importkeystore -destkeystore $(hostname).keystore.jks -srckeystore $(hostname).p12 -srcstoretype pkcs12 -alias $(hostname)

# Create truststore from CA certs

keytool -keystore kafka.server.truststore.jks -alias SectigoRoot -import -file "Sectigo RSA Organization Validation Secure Server CA.crt"

keytool -keystore kafka.server.truststore.jks -alias UserTrustRoot -import -file "USERTrust RSA Certification Authority.crt"

# Fix permissions

chown -R kafkauser:kafkagroup /kafka/config/ssl

# Create symlinks for current-year certs

cd ..

ln -s /kafka/config/ssl/$(date +%Y)/$(hostname).keystore.jks /kafka/config/ssl/kafka.keystore.jks

ln -s /kafka/config/ssl/$(date +%Y)/kafka.server.truststore.jks /kafka/config/ssl/kafka.truststore.jks

By creating symlinks to the active certs, you can renew the certificates by creating a new /kafka/config/ssl/$(date +%Y) folder and updating the symlink. No change to the configuration files is needed.

Update Kafka server.properties to Use SSL

Append a listener prefixed with SSL:// to the existing listeners – as an example:

#2024-03-27 LJR Adding SSL port on 9095

#listeners=PLAINTEXT://kafka1587.example.net:9092

#advertised.listeners=PLAINTEXT://kafka1587.example.net:9092

listeners=PLAINTEXT://kafka1587.example.net:9092,,SSL://kafka1587.example.net:9095

advertised.listeners=PLAINTEXT://kafka1587.example.net:9092,SSL://kafka1587.example.net:9095

Then add configuration values to use the keystore and truststore, specify which SSL protocols will be permitted, and set whatever client auth requirements you want:

ssl.keystore.location=/kafka/config/ssl/kafka.keystore.jks

ssl.keystore.password=<WhateverYouSetEarlier>

ssl.truststore.location=/kafka/config/ssl/kafka.truststore.jks

ssl.truststore.password=<WhateverYouSetForThisOne>

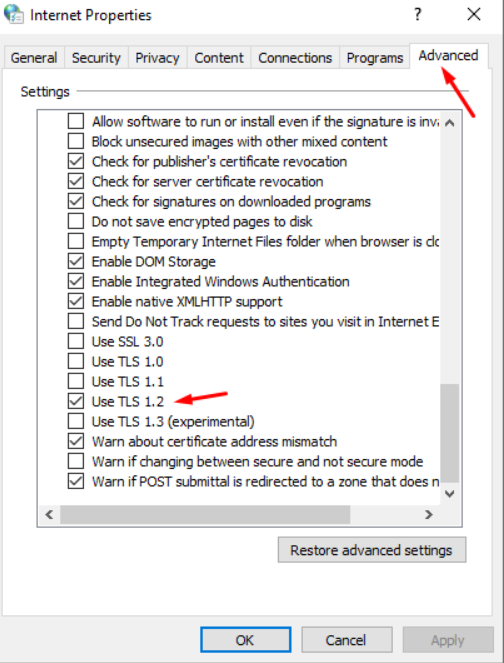

ssl.enabled.protocols=TLSv1.2,TLSv1.3

ssl.client.auth=none # Or whatever auth setting you require

Save the server.properties file and use “systemctl restart kafka” to restart the Kafka service.

Update Firewall Rules to Permit Traffic on New Port

firewall-cmd –add-port=9095/tcp

firewall-cmd –add-port=9095/tcp –permanent