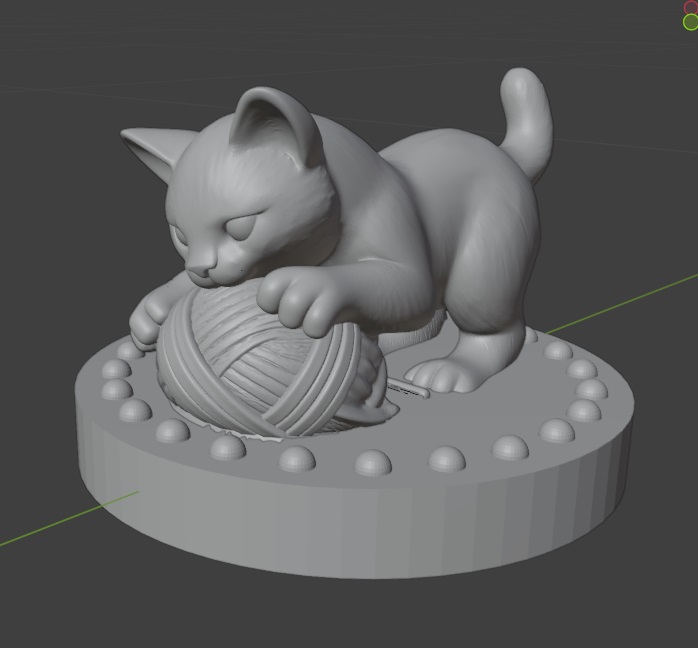

I’ve got a king! I intended to start with the king, but then realized it made more sense to make the pawns — kittens — first and then increase the size and add decorations for the other pieces. Instead of first, the kitten king was the last one made. Blender has some cool “brushes” for creating folds and gathers in fabric.

Author: Lisa

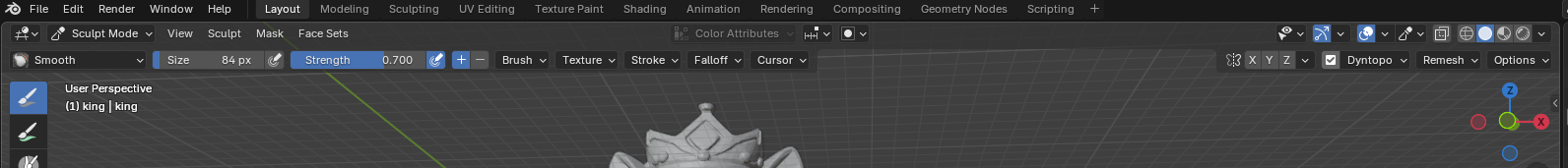

Blender – Box Trim Not Supported in Dynamic Topology Mode

I hand-sculpted bases for my chess set before realizing that I wanted them to be very identical. So I wanted to “cut” the hand-sculpted base off of the figure and replace it with a programmatic one. Except I kept getting this error using box trim — Not supported in dynamic topology mode.

Switch to a drawing brush and uncheck Dynamic Topology box

Then switch back to box trim and it actually trims

And then, of course, you need to remember to turn dynamic topography back on for the drawing tools to draw.

Cat Chess – Knight

Turkey Salad

I made a turkey salad with ~ 2 cups of roast turkey diced, 1/2 cup yogurt, 1/3 c chopped pecans, 1/4 c chopped green olives (stuffed with garlic and jalapeno peppers), a little diced onion, ~1 tsp tumeric, 1/2 tsp salt, 1/2 tsp garlic powder, and 1/4 tsp black pepper

Mixed all together, it looks just like chicken salad 🙂

Served with gluten free crackers and guacamole for a quick lunch.

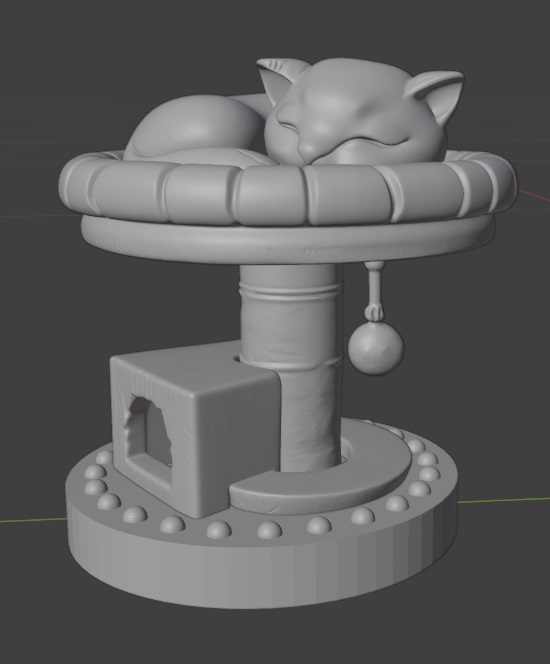

Cat Chess – Rook

Other “Cheese” Mac and Cheese

DNF5 History *UNDO*?!?

This is cool – I’ve only tested with a package I didn’t need and didn’t matter if it got mucked up. No idea if there’s an undo for, say, kernels. Or if undo on an update would roll back to the previous version. That’s the sort of testing to do on a sandbox that you don’t want running 30 minutes from now!

[root@fedora log]# dnf5 install bvi

Updating and loading repositories:

Repositories loaded.

Package Arch Version Repository Size

Installing:

bvi x86_64 1.5.0-1.fc43 fedora 157.1 KiB

Transaction Summary:

Installing: 1 package

Total size of inbound packages is 83 KiB. Need to download 83 KiB.

After this operation, 157 KiB extra will be used (install 157 KiB, remove 0 B).

Is this ok [y/N]: y

[1/1] bvi-0:1.5.0-1.fc43.x86_64 100% | 351.6 KiB/s | 83.0 KiB | 00m00s

———————————————————————————————————————————————————————————————————————————————

[1/1] Total 100% | 164.9 KiB/s | 83.0 KiB | 00m01s

Running transaction

[1/3] Verify package files 100% | 142.0 B/s | 1.0 B | 00m00s

[2/3] Prepare transaction 100% | 2.0 B/s | 1.0 B | 00m00s

[3/3] Installing bvi-0:1.5.0-1.fc43.x86_64 100% | 243.9 KiB/s | 160.0 KiB | 00m01s

Complete!

[root@fedora log]# dnf5 history list

ID Command line Date and time Action(s) Altered

29 dnf install bvi 2026-02-06 04:50:43 1

28 dnf remove kernel-core-6.18.3-200.fc43.x86_64 kernel-modules-6.18.3-200.fc43.x86_64 kernel-modules-extra-6.18.3-200.fc43.x86_64 2026-01-14 05:07:22 4

27 dnf update 2026-01-14 05:01:58 70

26 dnf remove kernel-core-6.17.9-300.fc43.x86_64 kernel-modules-6.17.9-300.fc43.x86_64 kernel-modules-extra-6.17.9-300.fc43.x86_64 2026-01-14 05:01:28 4

25 dnf update 2026-01-09 19:19:48 42

24 dnf update 2026-01-08 19:57:05 618

23 dnf5 remove kernel-core-6.11.10-300.fc41.x86_64 kernel-modules-6.11.10-300.fc41.x86_64 kernel-modules-core-6.11.10-300.fc41.x86_64 2026-01-08 19:53:42 3

22 dnf remove kernel-6.11.10-300.fc41 2025-12-26 02:06:47 1

21 dnf install speedtest-cli 2025-12-19 23:06:12 1

20 yum install chromedriver 2025-12-08 00:20:24 3

19 dnf system-upgrade download –releasever=43 2025-12-06 18:40:14 3792

18 dnf upgrade –refresh 2025-12-06 08:22:19 1422

17 dnf install -y cloud-utils-growpart 2025-12-06 08:16:00 1

16 dnf install xmlsec1 xmlsec1-openssl 2025-10-27 20:44:39 1

15 yum install xmlsec1 2025-10-27 20:42:29 1

14 dnf install mod_md 2025-07-03 19:22:36 1

13 yum install npm 2025-06-23 20:05:13 5

12 dnf update 2025-02-23 19:56:36 482

11 dnf update 2025-01-31 16:01:33 626

10 dnf5 install dnf5-plugin-automatic 2025-01-31 15:59:29 9

9 yum install xxd 2025-01-15 21:38:38 1

8 dnf install mosquitto 2025-01-04 23:40:52 3

7 dnf update 2025-01-03 04:51:41 14

6 dnf update 2025-01-01 00:19:22 358

5 dnf update 2024-12-06 04:30:32 18

4 dnf update 2024-12-06 04:14:41 404

3 dnf update 2024-11-22 17:21:43 65

2 dnf update 2024-11-18 18:33:18 116

1 dnf update 2024-11-14 18:58:09 54

[root@fedora log]# dnf5 history undo 29

Updating and loading repositories:

Repositories loaded.

Package Arch Version Repository Size

Removing:

bvi x86_64 1.5.0-1.fc43 fedora 157.1 KiB

Transaction Summary:

Removing: 1 package

After this operation, 157 KiB will be freed (install 0 B, remove 157 KiB).

Is this ok [y/N]: y

Running transaction

[1/2] Prepare transaction 100% | 4.0 B/s | 1.0 B | 00m00s

[2/2] Removing bvi-0:1.5.0-1.fc43.x86_64 100% | 35.0 B/s | 20.0 B | 00m01s

Complete!

Kitten Chess – Bishop

DOS Command – Bulk Change File Extension

There is a one-line DOS command to get all files with a specific extension and change it to a different extension. In my case, I had a bunch of p12 files that I wanted to be pfx so they’d open magically without creating a new association.

for %f in (*.p12) do ren "%f" "%~nf.pfx"