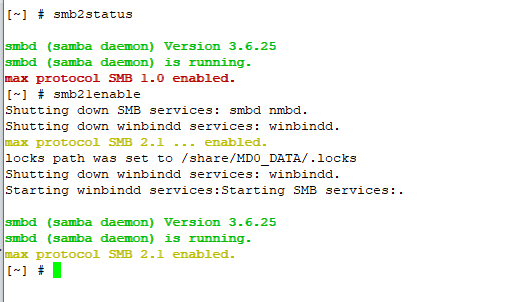

After upgrading to Fedora 39, we started having problems with Samba falling over on startup. The server has IPv6 disabled, and (evidently) something is not happy about that. I guess we could enable IPv6, but we don’t really need it.

Adding the following to lines to the GLOBAL section of the smb.conf file and restarting samba sorted it:

bind interfaces only = yes

interfaces = lo eth0

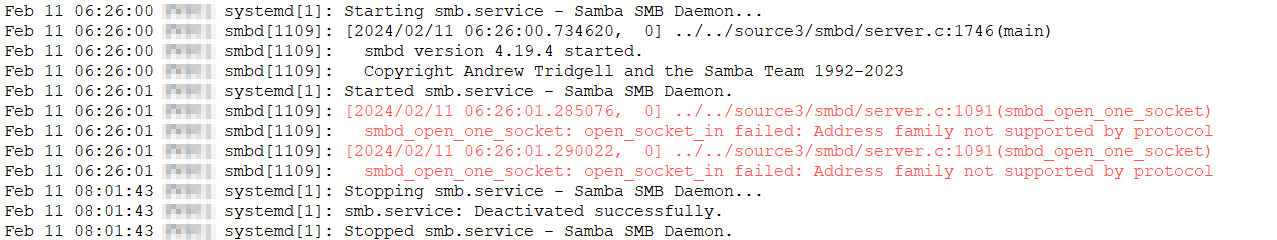

Feb 11 06:26:01 systemd[1]: Started smb.service – Samba SMB Daemon.

Feb 11 06:26:01 smbd[1109]: [2024/02/11 06:26:01.285076, 0] ../../source3/smbd/server.c:1091(smbd_open_one_socket)

Feb 11 06:26:01 smbd[1109]: smbd_open_one_socket: open_socket_in failed: Address family not supported by protocol

Feb 11 06:26:01 smbd[1109]: [2024/02/11 06:26:01.290022, 0] ../../source3/smbd/server.c:1091(smbd_open_one_socket)

Feb 11 06:26:01 smbd[1109]: smbd_open_one_socket: open_socket_in failed: Address family not supported by protocol

Feb 11 08:01:43 systemd[1]: Stopping smb.service – Samba SMB Daemon…

Feb 11 08:01:43 systemd[1]: smb.service: Deactivated successfully.

Feb 11 08:01:43 systemd[1]: Stopped smb.service – Samba SMB Daemon.