The default size limit for CSV reports in Kibana is 10 meg. Since that’s not enough for some of our users, I’ve been testing increases to the xpack.reporting.csv.maxSizeBytes value.

We’re still limited by the ES http.max_content_length value — which the documentation seems pretty confident shouldn’t be increased because the system can become unstable. Increasing the max Kibana report size to 100mb just yields a different error because ES doesn’t like it. 75 exhausted the JavaScript heap (?) – which I could get around by setting NODE_OPTIONS=–max_old_space_size=4096 … but that just led to the server abending whenever a report was run (in fact, I had to remove the reports I tried to run from the server to get everything back into a working state). Increasing the limit to 50 meg, though, didn’t do anything unreasonable in dev. So somewhere between 50 and 75 meg is our upper limit, and 50 seemed like a nice round number to me.

Notes on resource usage – Data is held in memory as a report is created. We’d see an increase in memory/CPU usage while the report is being generated (or, I guess more accurately, a longer time during which the memory/CPU usage is increased because if a 10 meg report takes 30 seconds to run then a 50 meg report is going to take 2.5 minutes to run … and the memory/CPU usage is pretty much the same during the “a report is running” period).

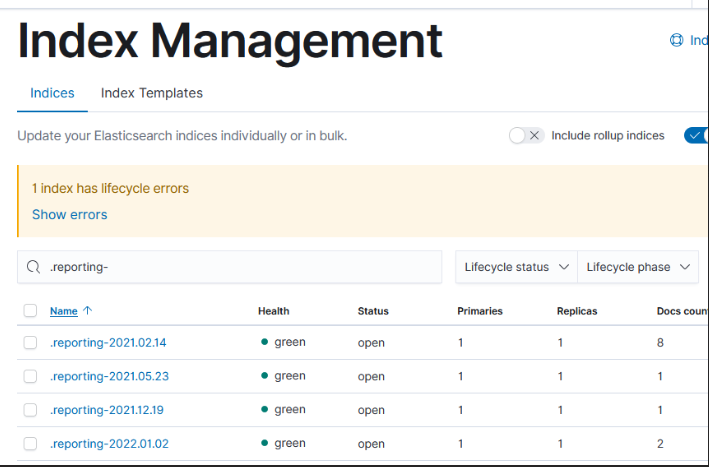

Then, though, the report is stashed in ElasticSearch for user(s) to retrieve within .reporting* indicies. And that’s where things get a little silly — architecturally, this is just another index; it ages off with a lifecycle policy if one exists. But it looks like they never created a lifecycle management policy. So you can still retrieve reports run a little over two years ago! We will certainly want to set up a policy to clean up old reports … just have to decide how long is reasonable.